Mrigank Sharad

Approximate ADCs for In-Memory Computing

Aug 11, 2024

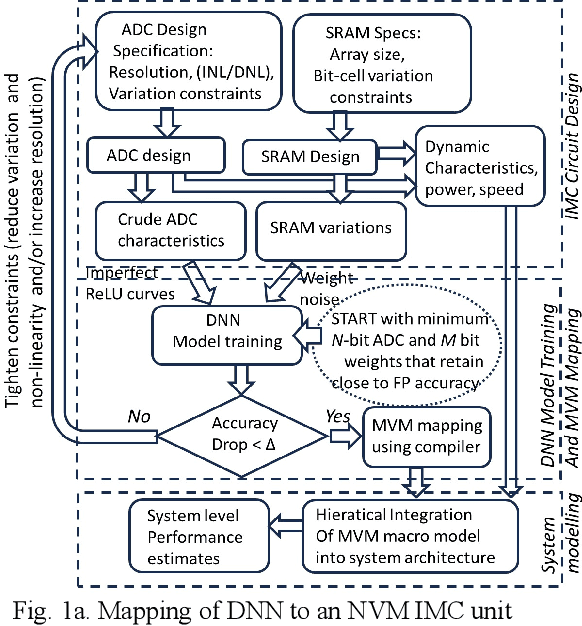

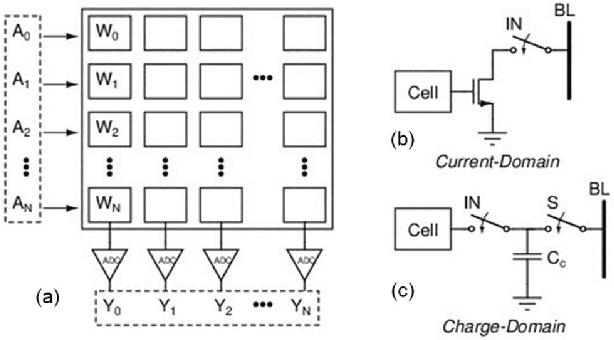

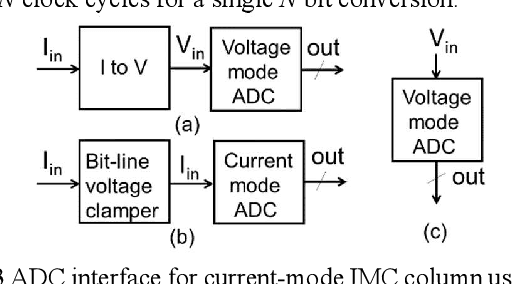

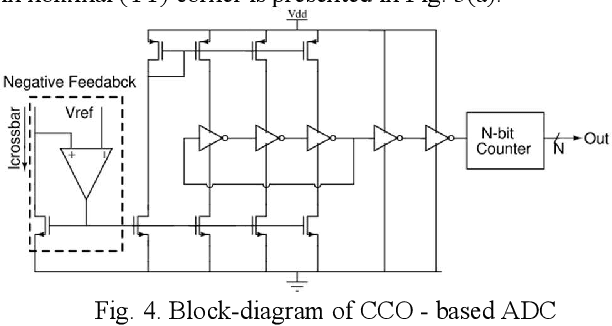

Abstract:In memory computing (IMC) architectures for deep learning (DL) accelerators leverage energy-efficient and highly parallel matrix vector multiplication (MVM) operations, implemented directly in memory arrays. Such IMC designs have been explored based on CMOS as well as emerging non-volatile memory (NVM) technologies like RRAM. IMC architectures generally involve a large number of cores consisting of memory arrays, storing the trained weights of the DL model. Peripheral units like DACs and ADCs are also used for applying inputs and reading out the output values. Recently reported designs reveal that the ADCs required for reading out the MVM results, consume more than 85% of the total compute power and also dominate the area, thereby eschewing the benefits of the IMC scheme. Mitigation of imperfections in the ADCs, namely, non-linearity and variations, incur significant design overheads, due to dedicated calibration units. In this work we present peripheral aware design of IMC cores, to mitigate such overheads. It involves incorporating the non-idealities of ADCs in the training of the DL models, along with that of the memory units. The proposed approach applies equally well to both current mode as well as charge mode MVM operations demonstrated in recent years., and can significantly simplify the design of mixed-signal IMC units.

Intelligent Wireless Sensor Nodes for Human Footstep Sound Classification for Security Application

Dec 23, 2019

Abstract:Sensor nodes present in a wireless sensor network (WSN) for security surveillance applications should preferably be small, energy-efficient and inexpensive with on-sensor computational abilities. An appropriate data processing scheme in the sensor node can help in reducing the power dissipation of the transceiver through compression of information to be communicated. In this paper, authors have attempted a simulation-based study of human footstep sound classification in natural surroundings using simple time-domain features. We used a spiking neural network (SNN), a computationally low weight classifier, derived from an artificial neural network (ANN), for classification. A classification accuracy greater than 85% is achieved using an SNN, degradation of ~5% as compared to ANN. The SNN scheme, along with the required feature extraction scheme, can be amenable to low power sub-threshold analog implementation. Results show that all analog implementation of the proposed SNN scheme can achieve significant power savings over the digital implementation of the same computing scheme and also over other conventional digital architectures using frequency-domain feature extraction and ANN-based classification.

Power efficient Spiking Neural Network Classifier based on memristive crossbar network for spike sorting application

Feb 25, 2018

Abstract:In this paper authors have presented a power efficient scheme for implementing a spike sorting module. Spike sorting is an important application in the field of neural signal acquisition for implantable biomedical systems whose function is to map the Neural-spikes (N-spikes) correctly to the neurons from which it originates. The accurate classification is a pre-requisite for the succeeding systems needed in Brain-Machine-Interfaces (BMIs) to give better performance. The primary design constraint to be satisfied for the spike sorter module is low power with good accuracy. There lies a trade-off in terms of power consumption between the on-chip and off-chip training of the N-spike features. In the former case care has to be taken to make the computational units power efficient whereas in the later the data rate of wireless transmission should be minimized to reduce the power consumption due to the transceivers. In this work a 2-step shared training scheme involving a K-means sorter and a Spiking Neural Network (SNN) is elaborated for on-chip training and classification. Also, a low power SNN classifier scheme using memristive crossbar type architecture is compared with a fully digital implementation. The advantage of the former classifier is that it is power efficient while providing comparable accuracy as that of the digital implementation due to the robustness of the SNN training algorithm which has a good tolerance for variation in memristance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge