Moshe Shechner

Relaxed Models for Adversarial Streaming: The Advice Model and the Bounded Interruptions Model

Jan 22, 2023Abstract:Streaming algorithms are typically analyzed in the oblivious setting, where we assume that the input stream is fixed in advance. Recently, there is a growing interest in designing adversarially robust streaming algorithms that must maintain utility even when the input stream is chosen adaptively and adversarially as the execution progresses. While several fascinating results are known for the adversarial setting, in general, it comes at a very high cost in terms of the required space. Motivated by this, in this work we set out to explore intermediate models that allow us to interpolate between the oblivious and the adversarial models. Specifically, we put forward the following two models: (1) *The advice model*, in which the streaming algorithm may occasionally ask for one bit of advice. (2) *The bounded interruptions model*, in which we assume that the adversary is only partially adaptive. We present both positive and negative results for each of these two models. In particular, we present generic reductions from each of these models to the oblivious model. This allows us to design robust algorithms with significantly improved space complexity compared to what is known in the plain adversarial model.

On the Robustness of CountSketch to Adaptive Inputs

Feb 28, 2022

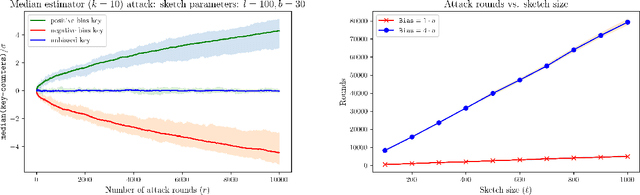

Abstract:CountSketch is a popular dimensionality reduction technique that maps vectors to a lower dimension using randomized linear measurements. The sketch supports recovering $\ell_2$-heavy hitters of a vector (entries with $v[i]^2 \geq \frac{1}{k}\|\boldsymbol{v}\|^2_2$). We study the robustness of the sketch in adaptive settings where input vectors may depend on the output from prior inputs. Adaptive settings arise in processes with feedback or with adversarial attacks. We show that the classic estimator is not robust, and can be attacked with a number of queries of the order of the sketch size. We propose a robust estimator (for a slightly modified sketch) that allows for quadratic number of queries in the sketch size, which is an improvement factor of $\sqrt{k}$ (for $k$ heavy hitters) over prior work.

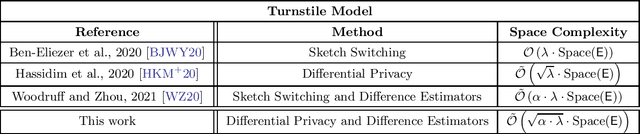

A Framework for Adversarial Streaming via Differential Privacy and Difference Estimators

Jul 30, 2021

Abstract:Streaming algorithms are algorithms for processing large data streams, using only a limited amount of memory. Classical streaming algorithms operate under the assumption that the input stream is fixed in advance. Recently, there is a growing interest in studying streaming algorithms that provide provable guarantees even when the input stream is chosen by an adaptive adversary. Such streaming algorithms are said to be {\em adversarially-robust}. We propose a novel framework for adversarial streaming that hybrids two recently suggested frameworks by Hassidim et al. (2020) and by Woodruff and Zhou (2021). These recently suggested frameworks rely on very different ideas, each with its own strengths and weaknesses. We combine these two frameworks (in a non-trivial way) into a single hybrid framework that gains from both approaches to obtain superior performances for turnstile streams.

Differentially Private Algorithms for Clustering with Stability Assumptions

Jun 11, 2021

Abstract:We study the problem of differentially private clustering under input-stability assumptions. Despite the ever-growing volume of works on differential privacy in general and differentially private clustering in particular, only three works (Nissim et al. 2007, Wang et al. 2015, Huang et al. 2018) looked at the problem of privately clustering "nice" k-means instances, all three relying on the sample-and-aggregate framework and all three measuring utility in terms of Wasserstein distance between the true cluster centers and the centers returned by the private algorithm. In this work we improve upon this line of works on multiple axes. We present a far simpler algorithm for clustering stable inputs (not relying on the sample-and-aggregate framework), and analyze its utility in both the Wasserstein distance and the k-means cost. Moreover, our algorithm has straight-forward analogues for "nice" k-median instances and for the local-model of differential privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge