Mohit Vohra

Visually Guided UGV for Autonomous Mobile Manipulation in Dynamic and Unstructured GPS Denied Environments

Dec 15, 2021

Abstract:A robotic solution for the unmanned ground vehicles (UGVs) to execute the highly complex task of object manipulation in an autonomous mode is presented. This paper primarily focuses on developing an autonomous robotic system capable of assembling elementary blocks to build the large 3D structures in GPS-denied environments. The key contributions of this system paper are i) Designing of a deep learning-based unified multi-task visual perception system for object detection, part-detection, instance segmentation, and tracking, ii) an electromagnetic gripper design for robust grasping, and iii) system integration in which multiple system components are integrated to develop an optimized software stack. The entire mechatronic and algorithmic design of UGV for the above application is detailed in this work. The performance and efficacy of the overall system are reported through several rigorous experiments.

End-To-End Real-Time Visual Perception Framework for Construction Automation

Jul 27, 2021

Abstract:In this work, we present a robotic solution to automate the task of wall construction. To that end, we present an end-to-end visual perception framework that can quickly detect and localize bricks in a clutter. Further, we present a light computational method of brick pose estimation that incorporates the above information. The proposed detection network predicts a rotated box compared to YOLO and SSD, thereby maximizing the object's region in the predicted box regions. In addition, precision P, recall R, and mean-average-precision (mAP) scores are reported to evaluate the proposed framework. We observed that for our task, the proposed scheme outperforms the upright bounding box detectors. Further, we deploy the proposed visual perception framework on a robotic system endowed with a UR5 robot manipulator and demonstrate that the system can successfully replicate a simplified version of the wall-building task in an autonomous mode.

Edge and Corner Detection in Unorganized Point Clouds for Robotic Pick and Place Applications

Apr 21, 2021

Abstract:In this paper, we propose a novel edge and corner detection algorithm for an unorganized point cloud. Our edge detection method classifies a query point as an edge point by evaluating the distribution of local neighboring points around the query point. The proposed technique has been tested on generic items such as dragons, bunnies, and coffee cups from the Stanford 3D scanning repository. The proposed technique can be directly applied to real and unprocessed point cloud data of random clutter of objects. To demonstrate the proposed technique's efficacy, we compare it to the other solutions for 3D edge extractions in an unorganized point cloud data. We observed that the proposed method could handle the raw and noisy data with little variations in parameters compared to other methods. We also extend the algorithm to estimate the 6D pose of known objects in the presence of dense clutter while handling multiple instances of the object. The overall approach is tested for a warehouse application, where an actual UR5 robot manipulator is used for robotic pick and place operations in an autonomous mode.

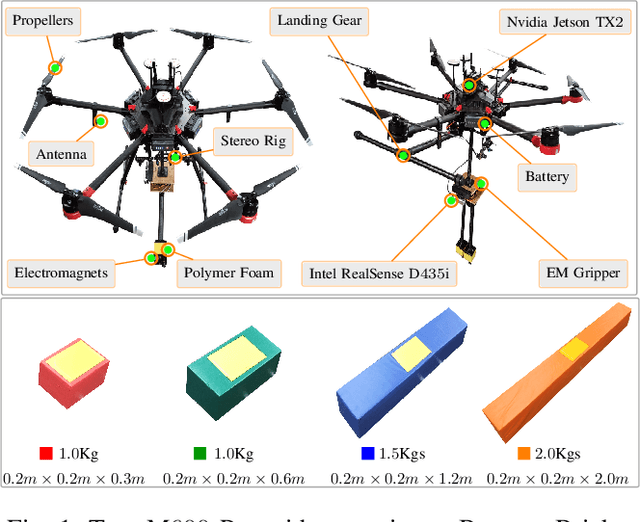

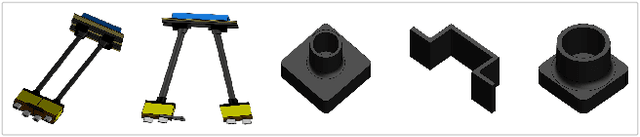

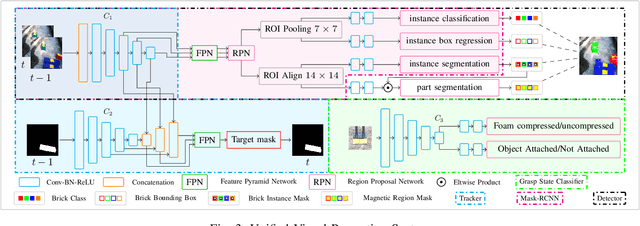

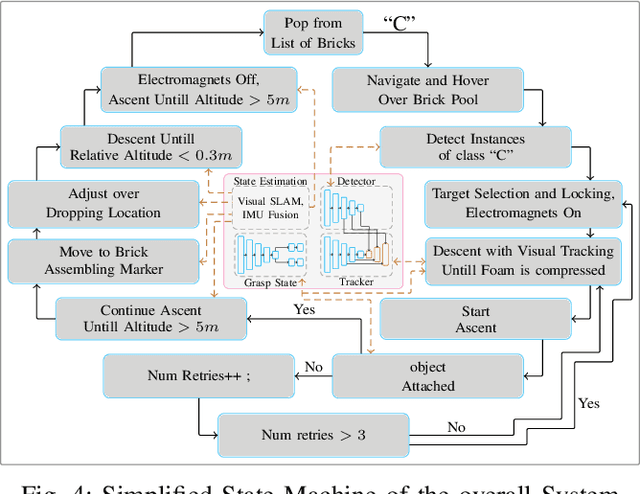

Towards Deep Learning Assisted Autonomous UAVs for Manipulation Tasks in GPS-Denied Environments

Jan 16, 2021

Abstract:In this work, we present a pragmatic approach to enable unmanned aerial vehicle (UAVs) to autonomously perform highly complicated tasks of object pick and place. This paper is largely inspired by challenge-2 of MBZIRC 2020 and is primarily focused on the task of assembling large 3D structures in outdoors and GPS-denied environments. Primary contributions of this system are: (i) a novel computationally efficient deep learning based unified multi-task visual perception system for target localization, part segmentation, and tracking, (ii) a novel deep learning based grasp state estimation, (iii) a retracting electromagnetic gripper design, (iv) a remote computing approach which exploits state-of-the-art MIMO based high speed (5000Mb/s) wireless links to allow the UAVs to execute compute intensive tasks on remote high end compute servers, and (v) system integration in which several system components are weaved together in order to develop an optimized software stack. We use DJI Matrice-600 Pro, a hex-rotor UAV and interface it with the custom designed gripper. Our framework is deployed on the specified UAV in order to report the performance analysis of the individual modules. Apart from the manipulation system, we also highlight several hidden challenges associated with the UAVs in this context.

Domain Independent Unsupervised Learning to grasp the Novel Objects

Jan 09, 2020

Abstract:One of the main challenges in the vision-based grasping is the selection of feasible grasp regions while interacting with novel objects. Recent approaches exploit the power of the convolutional neural network (CNN) to achieve accurate grasping at the cost of high computational power and time. In this paper, we present a novel unsupervised learning based algorithm for the selection of feasible grasp regions. Unsupervised learning infers the pattern in data-set without any external labels. We apply k-means clustering on the image plane to identify the grasp regions, followed by an axis assignment method. We define a novel concept of Grasp Decide Index (GDI) to select the best grasp pose in image plane. We have conducted several experiments in clutter or isolated environment on standard objects of Amazon Robotics Challenge 2017 and Amazon Picking Challenge 2016. We compare the results with prior learning based approaches to validate the robustness and adaptive nature of our algorithm for a variety of novel objects in different domains.

Real-time Grasp Pose Estimation for Novel Objects in Densely Cluttered Environment

Jan 03, 2020

Abstract:Grasping of novel objects in pick and place applications is a fundamental and challenging problem in robotics, specifically for complex-shaped objects. It is observed that the well-known strategies like \textit{i}) grasping from the centroid of object and \textit{ii}) grasping along the major axis of the object often fails for complex-shaped objects. In this paper, a real-time grasp pose estimation strategy for novel objects in robotic pick and place applications is proposed. The proposed technique estimates the object contour in the point cloud and predicts the grasp pose along with the object skeleton in the image plane. The technique is tested for the objects like ball container, hand weight, tennis ball and even for complex shape objects like blower (non-convex shape). It is observed that the proposed strategy performs very well for complex shaped objects and predicts the valid grasp configurations in comparison with the above strategies. The experimental validation of the proposed grasping technique is tested in two scenarios, when the objects are placed distinctly and when the objects are placed in dense clutter. A grasp accuracy of 88.16\% and 77.03\% respectively are reported. All the experiments are performed with a real UR10 robot manipulator along with WSG-50 two-finger gripper for grasping of objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge