Mohammad Billah

Captioning Near-Future Activity Sequences

Aug 02, 2019

Abstract:Most of the existing works on human activity analysis focus on recognition or early recognition of the activity labels from complete or partial observations. Similarly, existing video captioning approaches focus on the observed events in videos. Predicting the labels and the captions of future activities where no frames of the predicted activities have been observed is a challenging problem, with important applications that require anticipatory response. In this work, we propose a system that can infer the labels and the captions of a sequence of future activities. Our proposed network for label prediction of a future activity sequence is similar to a hybrid Siamese network with three branches where the first branch takes visual features from the objects present in the scene, the second branch takes observed activity features and the third branch captures the last observed activity features. The predicted labels and the observed scene context are then mapped to meaningful captions using a sequence-to-sequence learning based method. Experiments on three challenging activity analysis datasets and a video description dataset demonstrate that both our label prediction framework and captioning framework outperforms the state-of-the-arts.

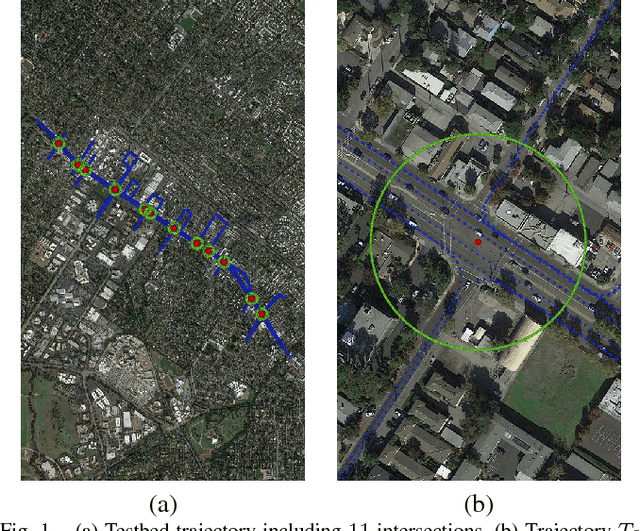

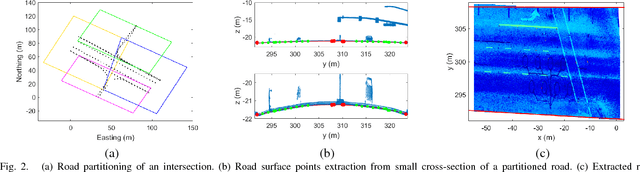

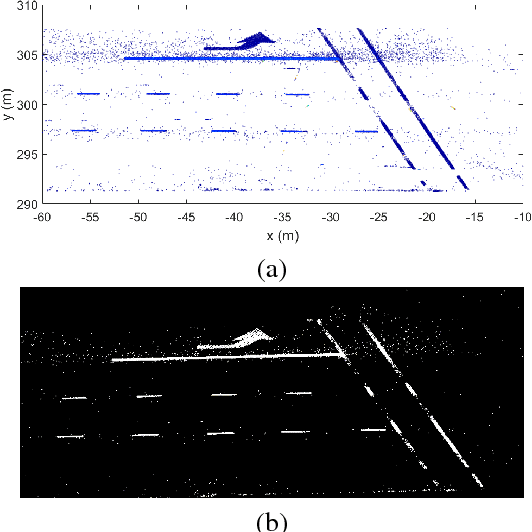

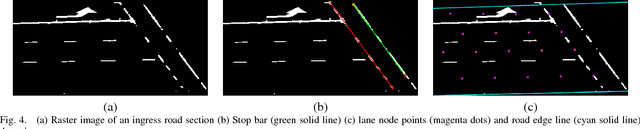

Challenges in Partially-Automated Roadway Feature Mapping Using Mobile Laser Scanning and Vehicle Trajectory Data

Feb 09, 2019

Abstract:Connected vehicle and driver's assistance applications are greatly facilitated by Enhanced Digital Maps (EDMs) that represent roadway features (e.g., lane edges or centerlines, stop bars). Due to the large number of signalized intersections and miles of roadway, manual development of EDMs on a global basis is not feasible. Mobile Terrestrial Laser Scanning (MTLS) is the preferred data acquisition method to provide data for automated EDM development. Such systems provide an MTLS trajectory and a point cloud for the roadway environment. The challenge is to automatically convert these data into an EDM. This article presents a new processing and feature extraction method, experimental demonstration providing SAE-J2735 map messages for eleven example intersections, and a discussion of the results that points out remaining challenges and suggests directions for future research.

Multi-View Frame Reconstruction with Conditional GAN

Sep 27, 2018

Abstract:Multi-view frame reconstruction is an important problem particularly when multiple frames are missing and past and future frames within the camera are far apart from the missing ones. Realistic coherent frames can still be reconstructed using corresponding frames from other overlapping cameras. We propose an adversarial approach to learn the spatio-temporal representation of the missing frame using conditional Generative Adversarial Network (cGAN). The conditional input to each cGAN is the preceding or following frames within the camera or the corresponding frames in other overlapping cameras, all of which are merged together using a weighted average. Representations learned from frames within the camera are given more weight compared to the ones learned from other cameras when they are close to the missing frames and vice versa. Experiments on two challenging datasets demonstrate that our framework produces comparable results with the state-of-the-art reconstruction method in a single camera and achieves promising performance in multi-camera scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge