Mohamed El Amine Elforaici

Semi-supervised ViT knowledge distillation network with style transfer normalization for colorectal liver metastases survival prediction

Nov 17, 2023

Abstract:Colorectal liver metastases (CLM) significantly impact colon cancer patients, influencing survival based on systemic chemotherapy response. Traditional methods like tumor grading scores (e.g., tumor regression grade - TRG) for prognosis suffer from subjectivity, time constraints, and expertise demands. Current machine learning approaches often focus on radiological data, yet the relevance of histological images for survival predictions, capturing intricate tumor microenvironment characteristics, is gaining recognition. To address these limitations, we propose an end-to-end approach for automated prognosis prediction using histology slides stained with H&E and HPS. We first employ a Generative Adversarial Network (GAN) for slide normalization to reduce staining variations and improve the overall quality of the images that are used as input to our prediction pipeline. We propose a semi-supervised model to perform tissue classification from sparse annotations, producing feature maps. We use an attention-based approach that weighs the importance of different slide regions in producing the final classification results. We exploit the extracted features for the metastatic nodules and surrounding tissue to train a prognosis model. In parallel, we train a vision Transformer (ViT) in a knowledge distillation framework to replicate and enhance the performance of the prognosis prediction. In our evaluation on a clinical dataset of 258 patients, our approach demonstrates superior performance with c-indexes of 0.804 (0.014) for OS and 0.733 (0.014) for TTR. Achieving 86.9% to 90.3% accuracy in predicting TRG dichotomization and 78.5% to 82.1% accuracy for the 3-class TRG classification task, our approach outperforms comparative methods. Our proposed pipeline can provide automated prognosis for pathologists and oncologists, and can greatly promote precision medicine progress in managing CLM patients.

Posture recognition using an RGB-D camera : exploring 3D body modeling and deep learning approaches

Oct 13, 2018

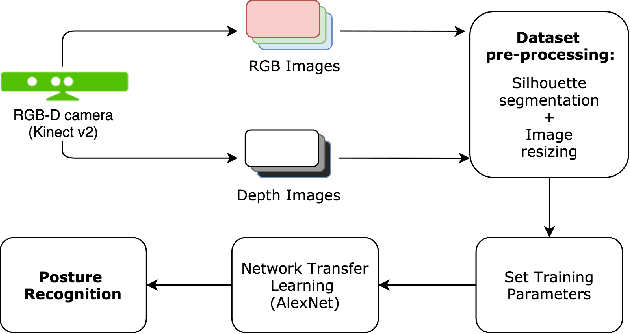

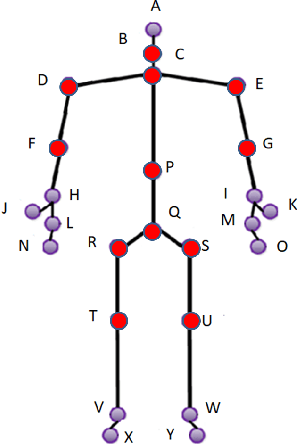

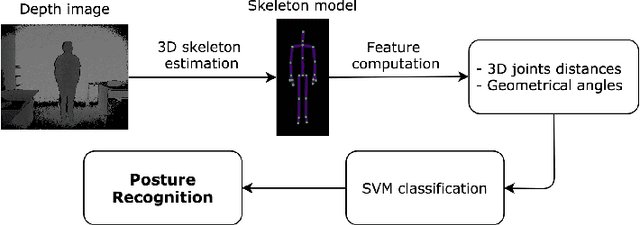

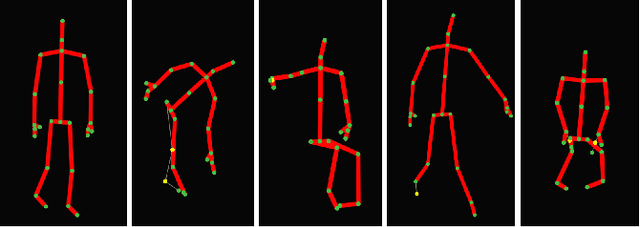

Abstract:The emergence of RGB-D sensors offered new possibilities for addressing complex artificial vision problems efficiently. Human posture recognition is among these computer vision problems, with a wide range of applications such as ambient assisted living and intelligent health care systems. In this context, our paper presents novel methods and ideas to design automatic posture recognition systems using an RGB-D camera. More specifically, we introduce two supervised methods to learn and recognize human postures using the main types of visual data provided by an RGB-D camera. The first method is based on convolutional features extracted from 2D images. Convolutional Neural Networks (CNNs) are trained to recognize human postures using transfer learning on RGB and depth images. Secondly, we propose to model the posture using the body joint configuration in the 3D space. Posture recognition is then performed through SVM classification of 3D skeleton-based features. To evaluate the proposed methods, we created a challenging posture recognition dataset with a considerable variability regarding the acquisition conditions. The experimental results demonstrated comparable performances and high precision for both methods in recognizing human postures, with a slight superiority for the CNN-based method when applied on depth images. Moreover, the two approaches demonstrated a high robustness to several perturbation factors, such as scale and orientation change.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge