Mohamed Abdelkader

VECTOR: Velocity-Enhanced GRU Neural Network for Real-Time 3D UAV Trajectory Prediction

Oct 24, 2024

Abstract:This paper tackles the challenge of real-time 3D trajectory prediction for UAVs, which is critical for applications such as aerial surveillance and defense. Existing prediction models that rely primarily on position data struggle with accuracy, especially when UAV movements fall outside the position domain used in training. Our research identifies a gap in utilizing velocity estimates, first-order dynamics, to better capture the dynamics and enhance prediction accuracy and generalizability in any position domain. To bridge this gap, we propose a new trajectory prediction method using Gated Recurrent Units (GRUs) within sequence-based neural networks. Unlike traditional methods that rely on RNNs or transformers, this approach forecasts future velocities and positions based on historical velocity data instead of positions. This is designed to enhance prediction accuracy and scalability, overcoming challenges faced by conventional models in handling complex UAV dynamics. The methodology employs both synthetic and real-world 3D UAV trajectory data, capturing a wide range of flight patterns, speeds, and agility. Synthetic data is generated using the Gazebo simulator and PX4 Autopilot, while real-world data comes from the UZH-FPV and Mid-Air drone racing datasets. The GRU-based models significantly outperform state-of-the-art RNN approaches, with a mean square error (MSE) as low as 2 x 10^-8. Overall, our findings confirm the effectiveness of incorporating velocity data in improving the accuracy of UAV trajectory predictions across both synthetic and real-world scenarios, in and out of position data distributions. Finally, we open-source our 5000 trajectories dataset and a ROS 2 package to facilitate the integration with existing ROS-based UAV systems.

SMART-TRACK: A Novel Kalman Filter-Guided Sensor Fusion For Robust UAV Object Tracking in Dynamic Environments

Oct 14, 2024

Abstract:In the field of sensor fusion and state estimation for object detection and localization, ensuring accurate tracking in dynamic environments poses significant challenges. Traditional methods like the Kalman Filter (KF) often fail when measurements are intermittent, leading to rapid divergence in state estimations. To address this, we introduce SMART (Sensor Measurement Augmentation and Reacquisition Tracker), a novel approach that leverages high-frequency state estimates from the KF to guide the search for new measurements, maintaining tracking continuity even when direct measurements falter. This is crucial for dynamic environments where traditional methods struggle. Our contributions include: 1) Versatile Measurement Augmentation Using KF Feedback: We implement a versatile measurement augmentation system that serves as a backup when primary object detectors fail intermittently. This system is adaptable to various sensors, demonstrated using depth cameras where KF's 3D predictions are projected into 2D depth image coordinates, integrating nonlinear covariance propagation techniques simplified to first-order approximations. 2) Open-source ROS2 Implementation: We provide an open-source ROS2 implementation of the SMART-TRACK framework, validated in a realistic simulation environment using Gazebo and ROS2, fostering broader adaptation and further research. Our results showcase significant enhancements in tracking stability, with estimation RMSE as low as 0.04 m during measurement disruptions, advancing the robustness of UAV tracking and expanding the potential for reliable autonomous UAV operations in complex scenarios. The implementation is available at https://github.com/mzahana/SMART-TRACK.

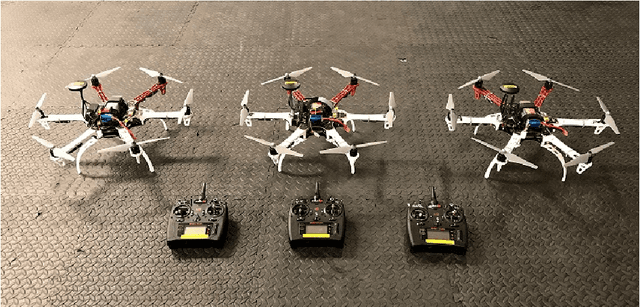

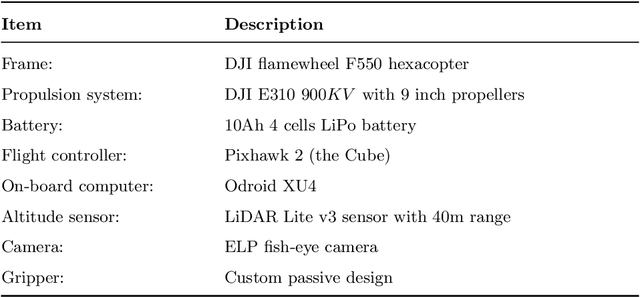

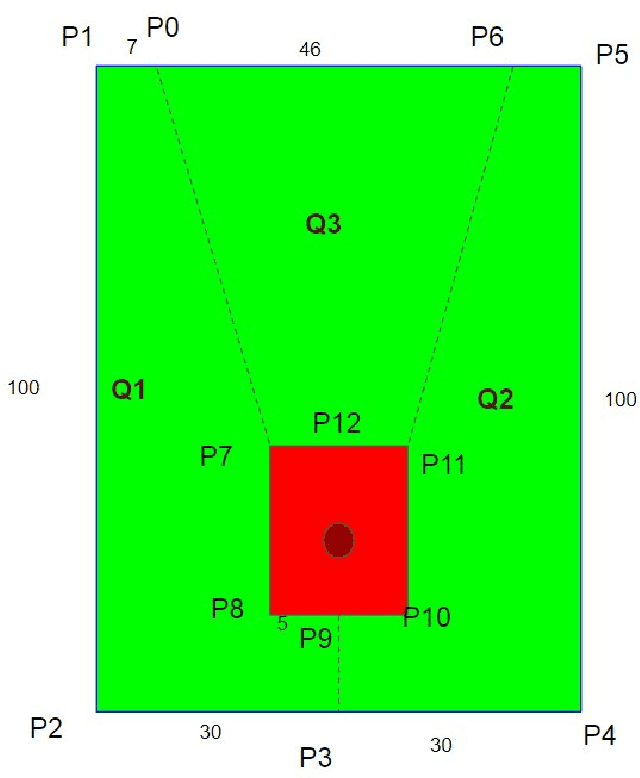

RISCuer: A Reliable Multi-UAV Search and Rescue Testbed

Jun 12, 2020

Abstract:We present the RISC Lab multi-agent testbed for reliable search and rescue and aerial transport in outdoor environments. The system consists of a team of three multi-rotor Unmanned Aerial Vehicles (UAVs), which are capable of autonomously searching, picking, and transporting randomly distributed objects in an outdoor field. The method involves vision based object detection and localization, passive aerial grasping with our novel design, GPS based UAV navigation, and safe release of the objects at the drop zone. Our cooperative strategy ensures safe spatial separation between UAVs at all times and we prevent any conflicts at the drop zone using communication enabled consensus. All computation is performed onboard each UAV. We describe the complete software and hardware architecture for the system and demonstrate its reliable performance using comprehensive outdoor experiments and by comparing our results with some recent, similar works.

Infrastructure-free Localization of Aerial Robots with Ultrawideband Sensors

Sep 21, 2018

Abstract:Robots in a swarm take advantage of a motion capture system or GPS sensors to obtain their global position. However, motion capture systems are environment-dependent and GPS sensors are not reliable in occluded environments. For a reliable and versatile operation in a swarm, robots must sense each other and interact locally. Motivated by this requirement, here we propose an on-board localization framework for multi-robot systems. Our framework consists of an anchor robot with three ultrawideband (UWB) sensors and a tag robot with a single UWB sensor. The anchor robot utilizes the three UWB sensors as a localization infrastructure and estimates the tag robot's location by using its on-board sensing and computational capabilities solely, without explicit inter-robot communication. We utilize a dual Monte-Carlo localization approach to capture the agile maneuvers of the tag robot with an acceptable precision. We validate the effectiveness of our algorithm with simulations and indoor and outdoor experiments on a two-drone setup. The proposed dual MCL algorithm yields highly accurate estimates for various speed profiles of the tag robot and demonstrates a superior performance over the standard particle filter and the extended Kalman Filter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge