Mingzhe Zhu

Perturbation on Feature Coalition: Towards Interpretable Deep Neural Networks

Aug 23, 2024Abstract:The inherent "black box" nature of deep neural networks (DNNs) compromises their transparency and reliability. Recently, explainable AI (XAI) has garnered increasing attention from researchers. Several perturbation-based interpretations have emerged. However, these methods often fail to adequately consider feature dependencies. To solve this problem, we introduce a perturbation-based interpretation guided by feature coalitions, which leverages deep information of network to extract correlated features. Then, we proposed a carefully-designed consistency loss to guide network interpretation. Both quantitative and qualitative experiments are conducted to validate the effectiveness of our proposed method. Code is available at github.com/Teriri1999/Perturebation-on-Feature-Coalition.

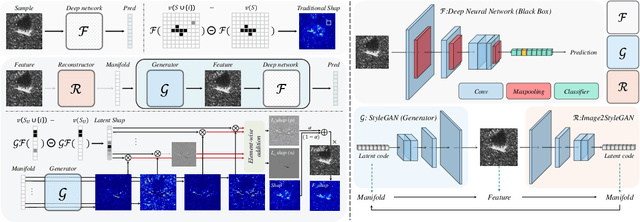

Multi-task SAR Image Processing via GAN-based Unsupervised Manipulation

Aug 02, 2024

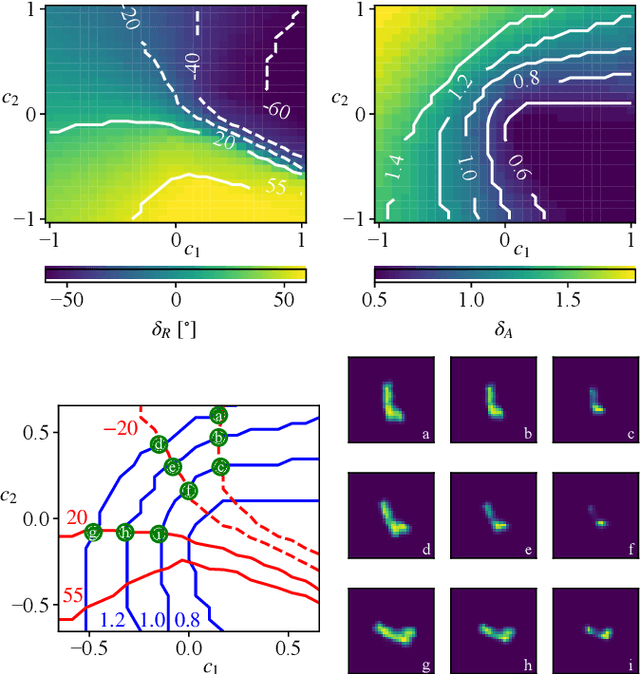

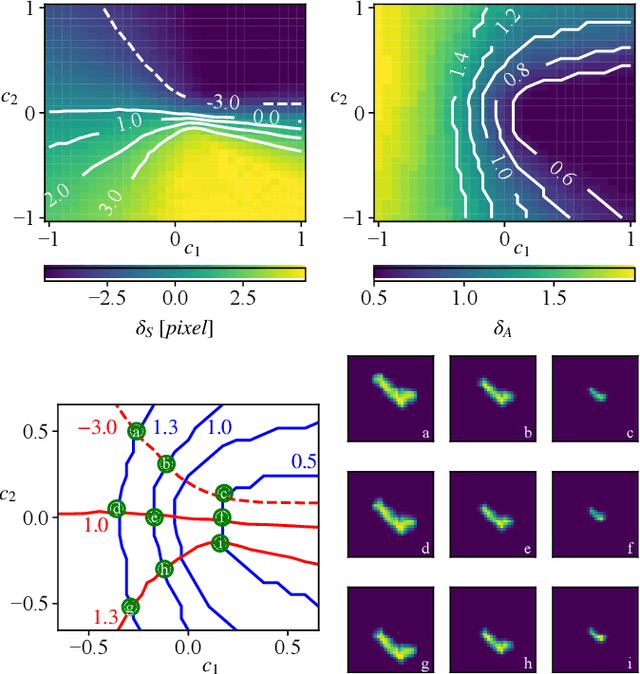

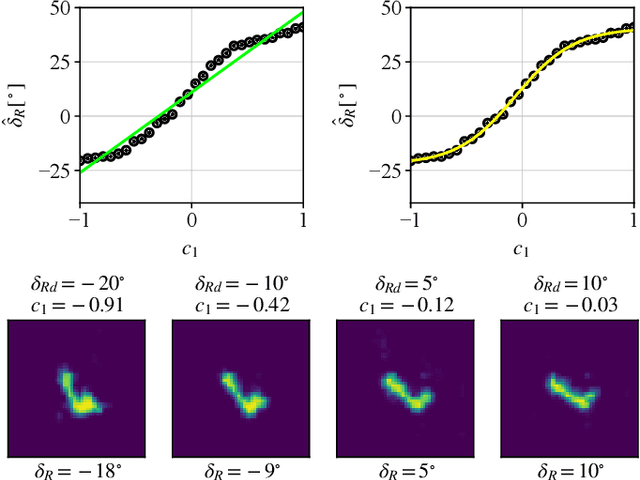

Abstract:Generative Adversarial Networks (GANs) have shown tremendous potential in synthesizing a large number of realistic SAR images by learning patterns in the data distribution. Some GANs can achieve image editing by introducing latent codes, demonstrating significant promise in SAR image processing. Compared to traditional SAR image processing methods, editing based on GAN latent space control is entirely unsupervised, allowing image processing to be conducted without any labeled data. Additionally, the information extracted from the data is more interpretable. This paper proposes a novel SAR image processing framework called GAN-based Unsupervised Editing (GUE), aiming to address the following two issues: (1) disentangling semantic directions in the GAN latent space and finding meaningful directions; (2) establishing a comprehensive SAR image processing framework while achieving multiple image processing functions. In the implementation of GUE, we decompose the entangled semantic directions in the GAN latent space by training a carefully designed network. Moreover, we can accomplish multiple SAR image processing tasks (including despeckling, localization, auxiliary identification, and rotation editing) in a single training process without any form of supervision. Extensive experiments validate the effectiveness of the proposed method.

SAR Despeckling via Regional Denoising Diffusion Probabilistic Model

Jan 06, 2024Abstract:Speckle noise poses a significant challenge in maintaining the quality of synthetic aperture radar (SAR) images, so SAR despeckling techniques have drawn increasing attention. Despite the tremendous advancements of deep learning in fixed-scale SAR image despeckling, these methods still struggle to deal with large-scale SAR images. To address this problem, this paper introduces a novel despeckling approach termed Region Denoising Diffusion Probabilistic Model (R-DDPM) based on generative models. R-DDPM enables versatile despeckling of SAR images across various scales, accomplished within a single training session. Moreover, The artifacts in the fused SAR images can be avoided effectively with the utilization of region-guided inverse sampling. Experiments of our proposed R-DDPM on Sentinel-1 data demonstrates superior performance to existing methods.

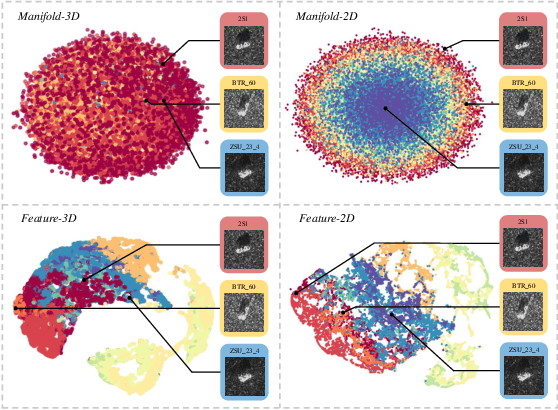

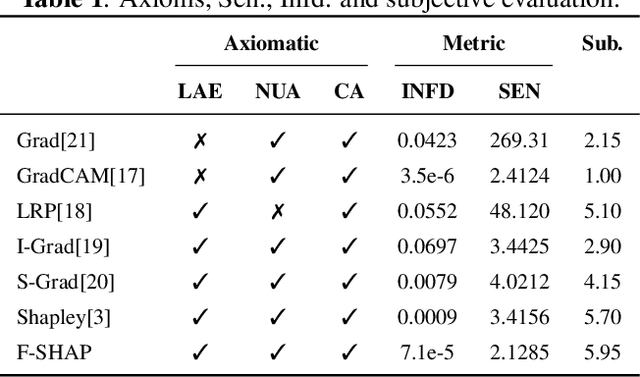

Manifold-based Shapley for SAR Recognization Network Explanation

Jan 06, 2024

Abstract:Explainable artificial intelligence (XAI) holds immense significance in enhancing the deep neural network's transparency and credibility, particularly in some risky and high-cost scenarios, like synthetic aperture radar (SAR). Shapley is a game-based explanation technique with robust mathematical foundations. However, Shapley assumes that model's features are independent, rendering Shapley explanation invalid for high dimensional models. This study introduces a manifold-based Shapley method by projecting high-dimensional features into low-dimensional manifold features and subsequently obtaining Fusion-Shap, which aims at (1) addressing the issue of erroneous explanations encountered by traditional Shap; (2) resolving the challenge of interpretability that traditional Shap faces in complex scenarios.

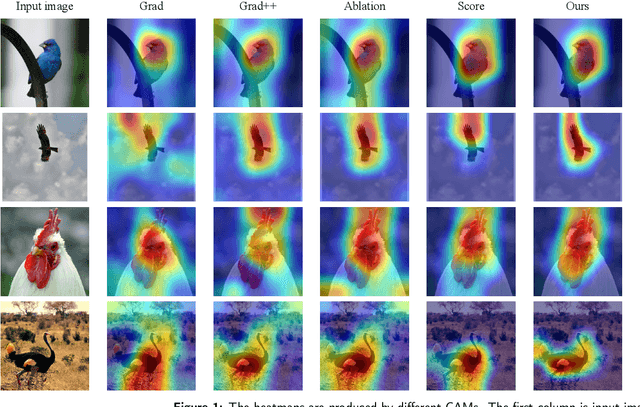

Cluster-CAM: Cluster-Weighted Visual Interpretation of CNNs' Decision in Image Classification

Feb 03, 2023

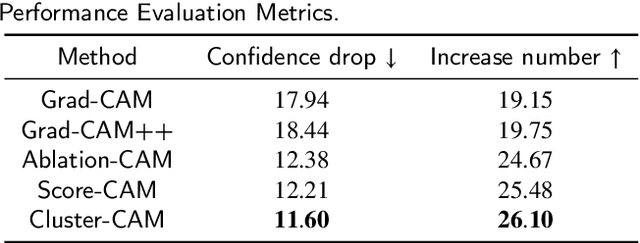

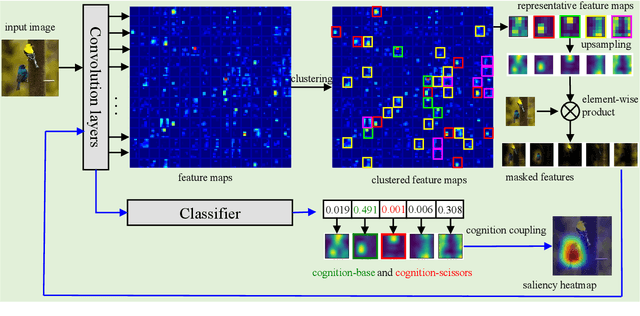

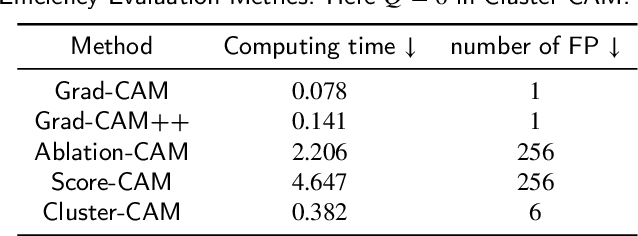

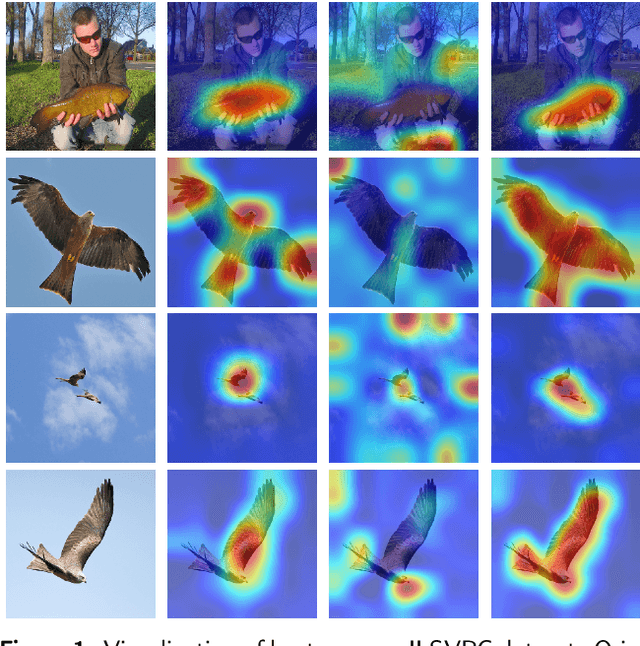

Abstract:Despite the tremendous success of convolutional neural networks (CNNs) in computer vision, the mechanism of CNNs still lacks clear interpretation. Currently, class activation mapping (CAM), a famous visualization technique to interpret CNN's decision, has drawn increasing attention. Gradient-based CAMs are efficient while the performance is heavily affected by gradient vanishing and exploding. In contrast, gradient-free CAMs can avoid computing gradients to produce more understandable results. However, existing gradient-free CAMs are quite time-consuming because hundreds of forward interference per image are required. In this paper, we proposed Cluster-CAM, an effective and efficient gradient-free CNN interpretation algorithm. Cluster-CAM can significantly reduce the times of forward propagation by splitting the feature maps into clusters in an unsupervised manner. Furthermore, we propose an artful strategy to forge a cognition-base map and cognition-scissors from clustered feature maps. The final salience heatmap will be computed by merging the above cognition maps. Qualitative results conspicuously show that Cluster-CAM can produce heatmaps where the highlighted regions match the human's cognition more precisely than existing CAMs. The quantitative evaluation further demonstrates the superiority of Cluster-CAM in both effectiveness and efficiency.

VS-CAM: Vertex Semantic Class Activation Mapping to Interpret Vision Graph Neural Network

Sep 15, 2022

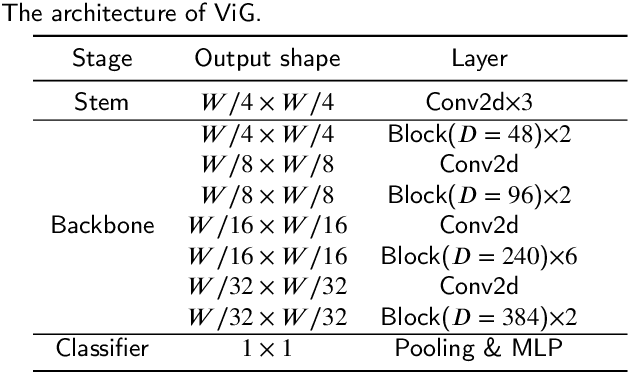

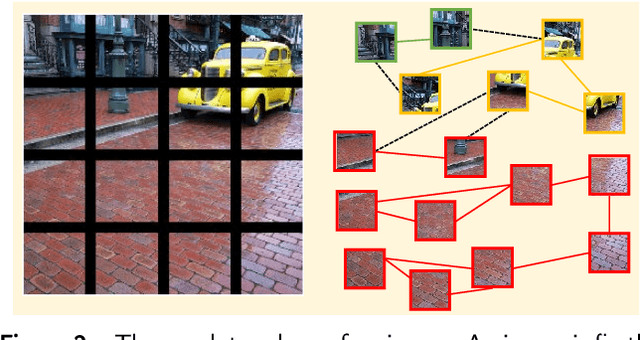

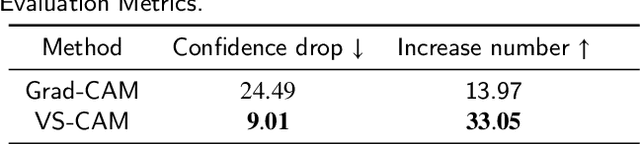

Abstract:Graph convolutional neural network (GCN) has drawn increasing attention and attained good performance in various computer vision tasks, however, there lacks a clear interpretation of GCN's inner mechanism. For standard convolutional neural networks (CNNs), class activation mapping (CAM) methods are commonly used to visualize the connection between CNN's decision and image region by generating a heatmap. Nonetheless, such heatmap usually exhibits semantic-chaos when these CAMs are applied to GCN directly. In this paper, we proposed a novel visualization method particularly applicable to GCN, Vertex Semantic Class Activation Mapping (VS-CAM). VS-CAM includes two independent pipelines to produce a set of semantic-probe maps and a semantic-base map, respectively. Semantic-probe maps are used to detect the semantic information from semantic-base map to aggregate a semantic-aware heatmap. Qualitative results show that VS-CAM can obtain heatmaps where the highlighted regions match the objects much more precisely than CNN-based CAM. The quantitative evaluation further demonstrates the superiority of VS-CAM.

Analytical Interpretation of Latent Codes in InfoGAN with SAR Images

May 26, 2022

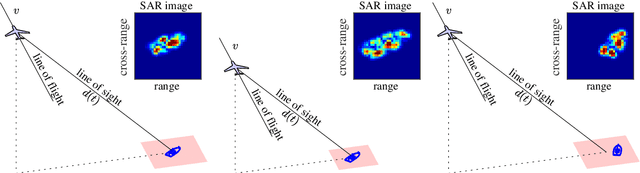

Abstract:Generative Adversarial Networks (GANs) can synthesize abundant photo-realistic synthetic aperture radar (SAR) images. Some recent GANs (e.g., InfoGAN), are even able to edit specific properties of the synthesized images by introducing latent codes. It is crucial for SAR image synthesis since the targets in real SAR images are with different properties due to the imaging mechanism. Despite the success of InfoGAN in manipulating properties, there still lacks a clear explanation of how these latent codes affect synthesized properties, thus editing specific properties usually relies on empirical trials, unreliable and time-consuming. In this paper, we show that latent codes are disentangled to affect the properties of SAR images in a non-linear manner. By introducing some property estimators for latent codes, we are able to provide a completely analytical nonlinear model to decompose the entangled causality between latent codes and different properties. The qualitative and quantitative experimental results further reveal that the properties can be calculated by latent codes, inversely, the satisfying latent codes can be estimated given desired properties. In this case, properties can be manipulated by latent codes as we expect.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge