Ming-Chun Huang

HSTFormer: Hierarchical Spatial-Temporal Transformers for 3D Human Pose Estimation

Jan 18, 2023

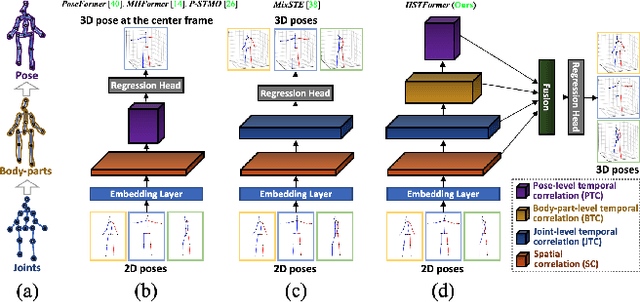

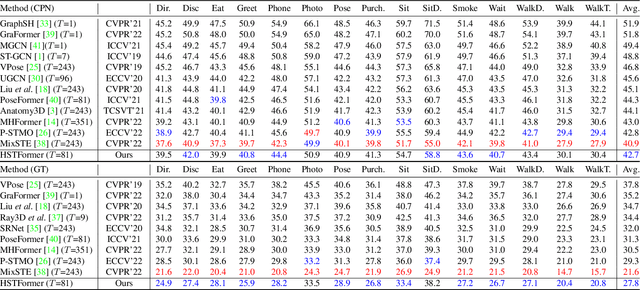

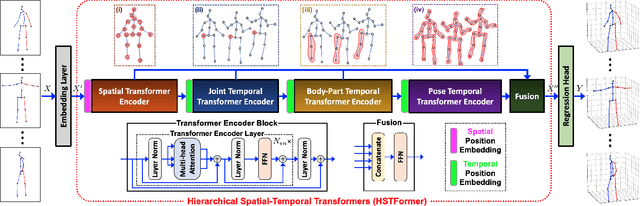

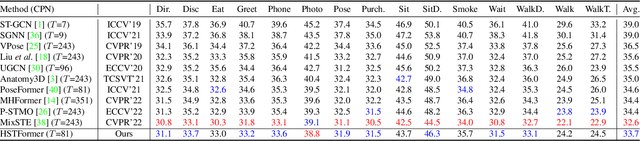

Abstract:Transformer-based approaches have been successfully proposed for 3D human pose estimation (HPE) from 2D pose sequence and achieved state-of-the-art (SOTA) performance. However, current SOTAs have difficulties in modeling spatial-temporal correlations of joints at different levels simultaneously. This is due to the poses' spatial-temporal complexity. Poses move at various speeds temporarily with various joints and body-parts movement spatially. Hence, a cookie-cutter transformer is non-adaptable and can hardly meet the "in-the-wild" requirement. To mitigate this issue, we propose Hierarchical Spatial-Temporal transFormers (HSTFormer) to capture multi-level joints' spatial-temporal correlations from local to global gradually for accurate 3D HPE. HSTFormer consists of four transformer encoders (TEs) and a fusion module. To the best of our knowledge, HSTFormer is the first to study hierarchical TEs with multi-level fusion. Extensive experiments on three datasets (i.e., Human3.6M, MPI-INF-3DHP, and HumanEva) demonstrate that HSTFormer achieves competitive and consistent performance on benchmarks with various scales and difficulties. Specifically, it surpasses recent SOTAs on the challenging MPI-INF-3DHP dataset and small-scale HumanEva dataset, with a highly generalized systematic approach. The code is available at: https://github.com/qianxiaoye825/HSTFormer.

Soft Sensing Model Visualization: Fine-tuning Neural Network from What Model Learned

Nov 12, 2021

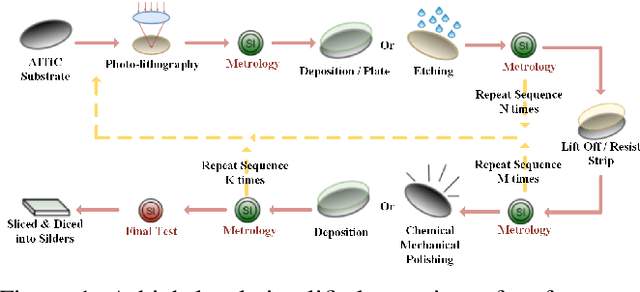

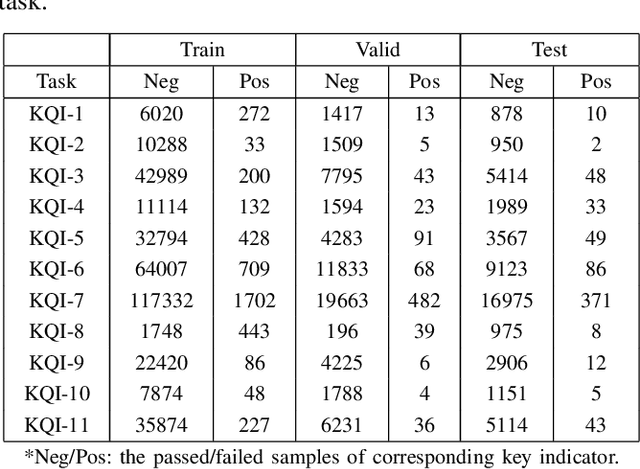

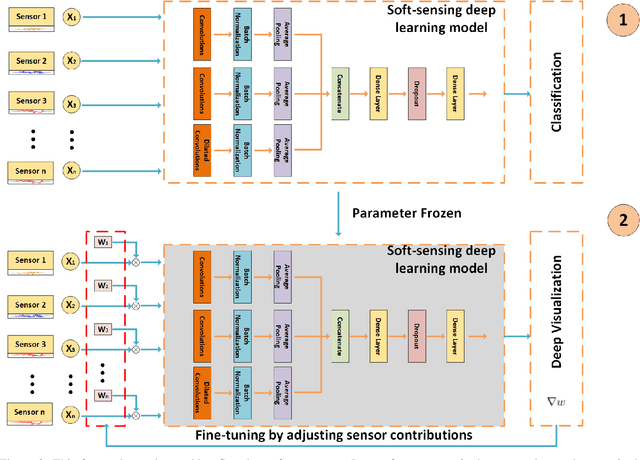

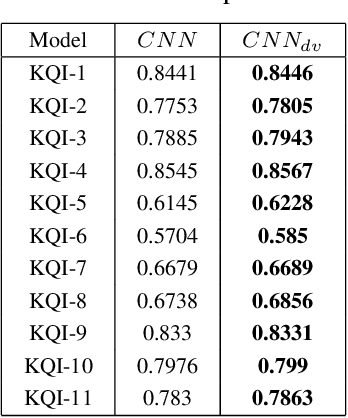

Abstract:The growing availability of the data collected from smart manufacturing is changing the paradigms of production monitoring and control. The increasing complexity and content of the wafer manufacturing process in addition to the time-varying unexpected disturbances and uncertainties, make it infeasible to do the control process with model-based approaches. As a result, data-driven soft-sensing modeling has become more prevalent in wafer process diagnostics. Recently, deep learning has been utilized in soft sensing system with promising performance on highly nonlinear and dynamic time-series data. Despite its successes in soft-sensing systems, however, the underlying logic of the deep learning framework is hard to understand. In this paper, we propose a deep learning-based model for defective wafer detection using a highly imbalanced dataset. To understand how the proposed model works, the deep visualization approach is applied. Additionally, the model is then fine-tuned guided by the deep visualization. Extensive experiments are performed to validate the effectiveness of the proposed system. The results provide an interpretation of how the model works and an instructive fine-tuning method based on the interpretation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge