Miklas Strøm Kristoffersen

Deep Sound Field Reconstruction in Real Rooms: Introducing the ISOBEL Sound Field Dataset

Feb 12, 2021

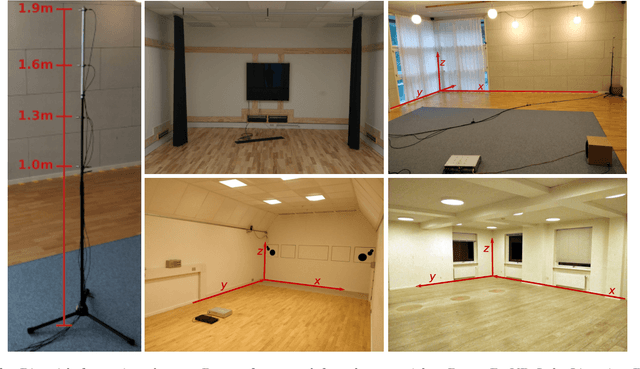

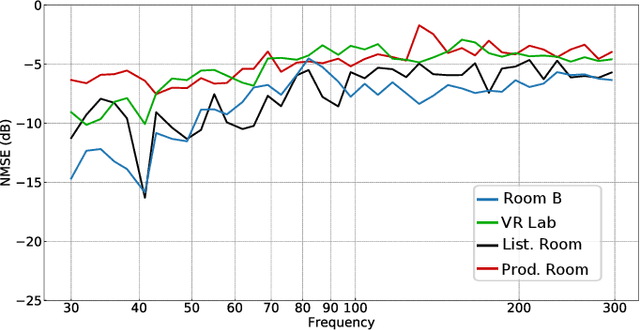

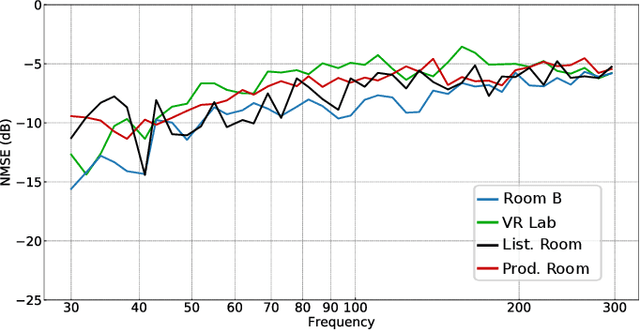

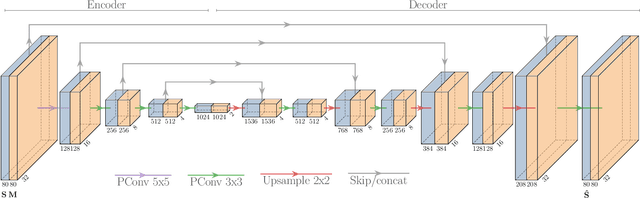

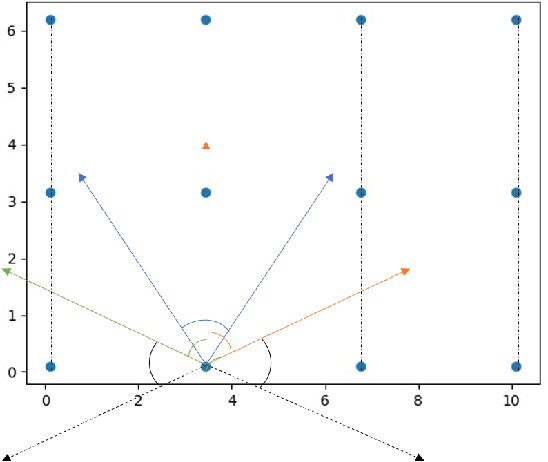

Abstract:Knowledge of loudspeaker responses are useful in a number of applications, where a sound system is located inside a room that alters the listening experience depending on position within the room. Acquisition of sound fields for sound sources located in reverberant rooms can be achieved through labor intensive measurements of impulse response functions covering the room, or alternatively by means of reconstruction methods which can potentially require significantly fewer measurements. This paper extends evaluations of sound field reconstruction at low frequencies by introducing a dataset with measurements from four real rooms. The ISOBEL Sound Field dataset is publicly available, and aims to bridge the gap between synthetic and real-world sound fields in rectangular rooms. Moreover, the paper advances on a recent deep learning-based method for sound field reconstruction using a very low number of microphones, and proposes an approach for modeling both magnitude and phase response in a U-Net-like neural network architecture. The complex-valued sound field reconstruction demonstrates that the estimated room transfer functions are of high enough accuracy to allow for personalized sound zones with contrast ratios comparable to ideal room transfer functions using 15 microphones below 150 Hz.

Multiview Based 3D Scene Understanding On Partial Point Sets

Nov 30, 2018

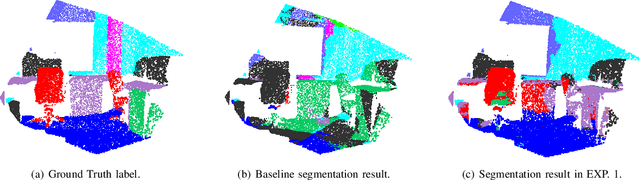

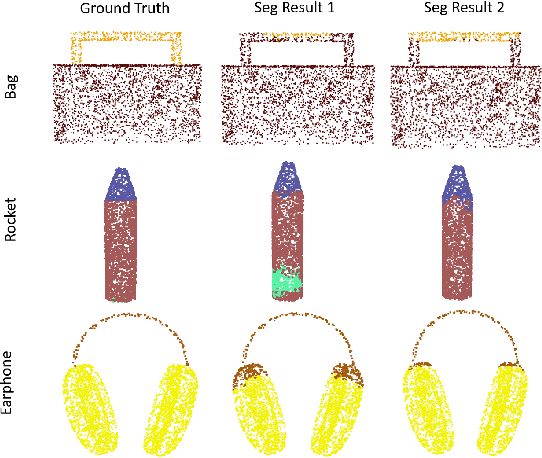

Abstract:Deep learning within the context of point clouds has gained much research interest in recent years mostly due to the promising results that have been achieved on a number of challenging benchmarks, such as 3D shape recognition and scene semantic segmentation. In many realistic settings however, snapshots of the environment are often taken from a single view, which only contains a partial set of the scene due to the field of view restriction of commodity cameras. 3D scene semantic understanding on partial point clouds is considered as a challenging task. In this work, we propose a processing approach for 3D point cloud data based on a multiview representation of the existing 360{\deg} point clouds. By fusing the original 360{\deg} point clouds and their corresponding 3D multiview representations as input data, a neural network is able to recognize partial point sets while improving the general performance on complete point sets, resulting in an overall increase of 31.9% and 4.3% in segmentation accuracy for partial and complete scene semantic understanding, respectively. This method can also be applied in a wider 3D recognition context such as 3D part segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge