Mickael Laine

Soft Gripping System for Space Exploration Legged Robots

Nov 08, 2024

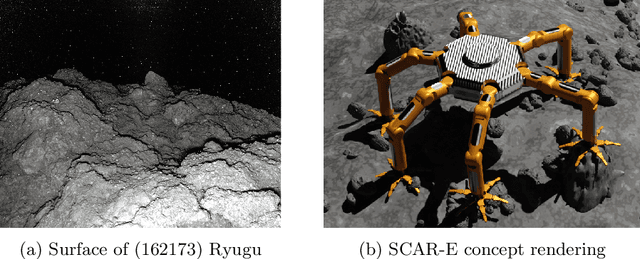

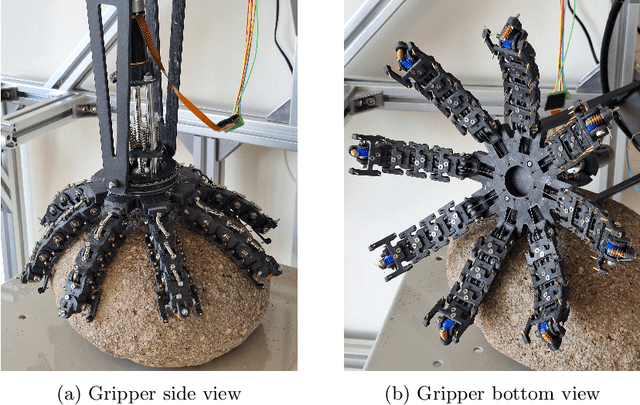

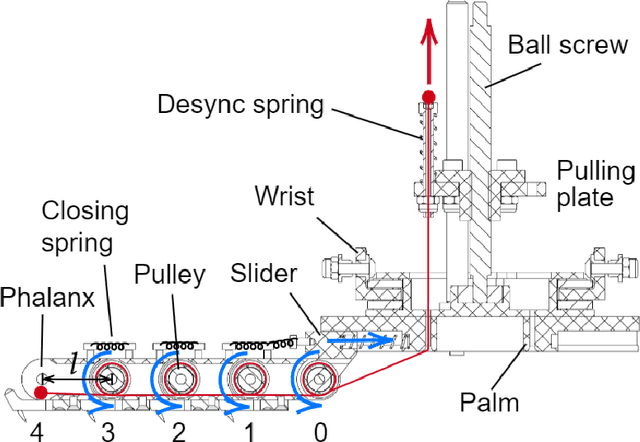

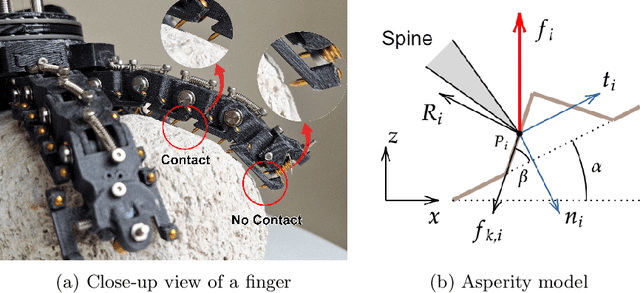

Abstract:Although wheeled robots have been predominant for planetary exploration, their geometry limits their capabilities when traveling over steep slopes, through rocky terrains, and in microgravity. Legged robots equipped with grippers are a viable alternative to overcome these obstacles. This paper proposes a gripping system that can provide legged space-explorer robots a reliable anchor on uneven rocky terrain. This gripper provides the benefits of soft gripping technology by using segmented tendon-driven fingers to adapt to the target shape, and creates a strong adhesion to rocky surfaces with the help of microspines. The gripping performances are showcased, and multiple experiments demonstrate the impact of the pulling angle, target shape, spine configuration, and actuation power on the performances. The results show that the proposed gripper can be a suitable solution for advanced space exploration, including climbing, lunar caves, or exploration of the surface of asteroids.

Sinkage Study in Granular Material for Space Exploration Legged Robot Gripper

Nov 08, 2024

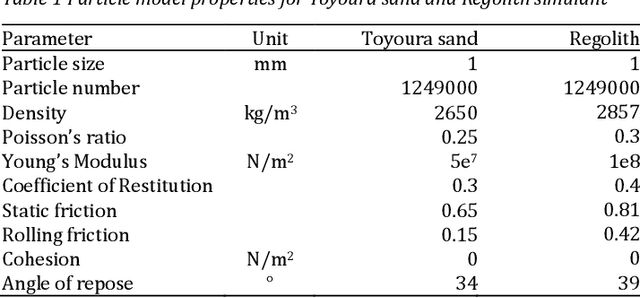

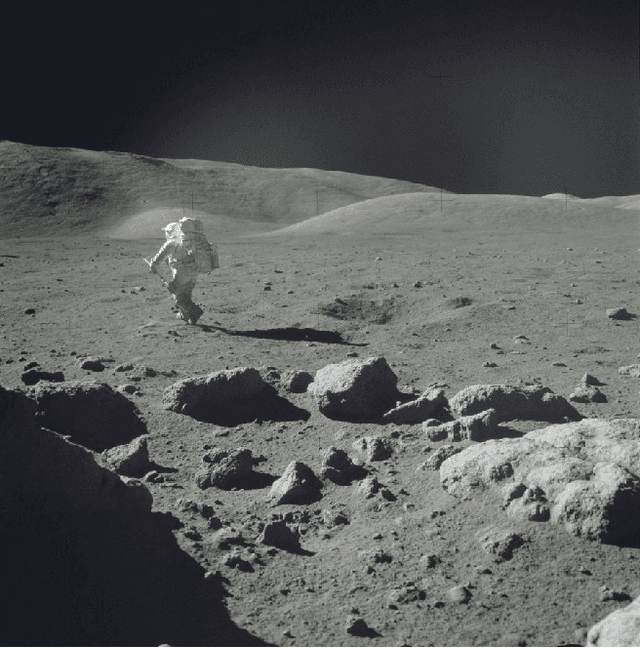

Abstract:Wheeled rovers have been the primary choice for lunar exploration due to their speed and efficiency. However, deeper areas, such as lunar caves and craters, require the mobility of legged robots. To do so, appropriate end effectors must be designed to enable climbing and walking on the granular surface of the Moon. This paper investigates the behavior of an underactuated soft gripper on deformable granular material when a legged robot is walking in soft soil. A modular test bench and a simulation model were developed to observe the gripper sinkage behavior under load. The gripper uses tendon-driven fingers to match its target shape and grasp on the target surface using multiple micro-spines. The sinkage of the gripper in silica sand was measured by comparing the axial displacement of the gripper with the nominal load of the robot mass. Multiple experiments were performed to observe the sinkage of the gripper over a range of slope angles. A simulation model accounting for the degrees of compliance of the gripper fingers was created using Altair MotionSolve software and coupled to Altair EDEM to compute the gripper interaction with particles utilizing the discrete element method. After validation of the model, complementary simulations using Lunar gravity and a regolith particle model were performed. The results show that a satisfactory gripper model with accurate freedom of motion can be created in simulation using the Altair simulation packages and expected sinkage under load in a particle-filled environment can be estimated using this model. By computing the sinkage of the end effector of legged robots, the results can be directly integrated into the motion control algorithm and improve the accuracy of mobility in a granular material environment.

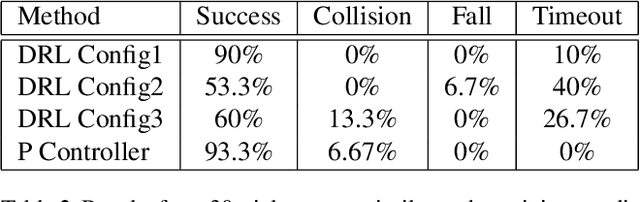

SegVisRL: Visuomotor Development for a Lunar Rover for Hazard Avoidance using Camera Images

Mar 26, 2021

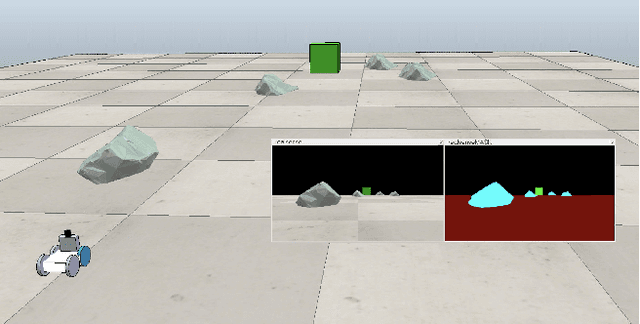

Abstract:The visuomotor system of any animal is critical for its survival, and the development of a complex one within humans is large factor in our success as a species on Earth. This system is an essential part of our ability to adapt to our environment. We use this system continuously throughout the day, when picking something up, or walking around while avoiding bumping into objects. Equipping robots with such capabilities will help produce more intelligent locomotion with the ability to more easily understand their surroundings and to move safely. In particular, such capabilities are desirable for traversing the lunar surface, as it is full of hazardous obstacles, such as rocks. These obstacles need to be identified and avoided in real time. This paper seeks to demonstrate the development of a visuomotor system within a robot for navigation and obstacle avoidance, with complex rock shaped objects representing hazards. Our approach uses deep reinforcement learning with only image data. In this paper, we compare the results from several neural network architectures and a preprocessing methodology which includes producing a segmented image and downsampling.

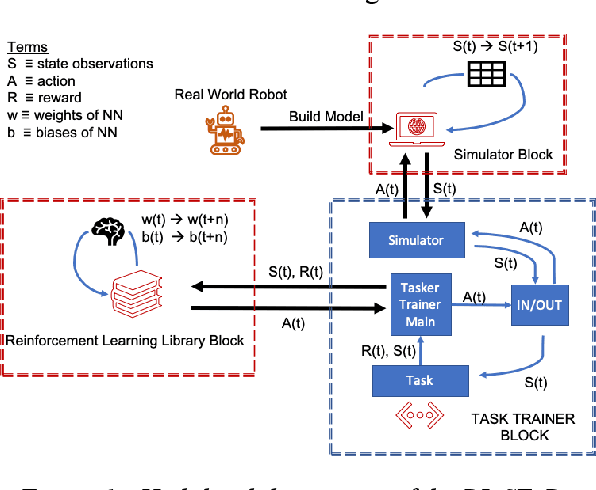

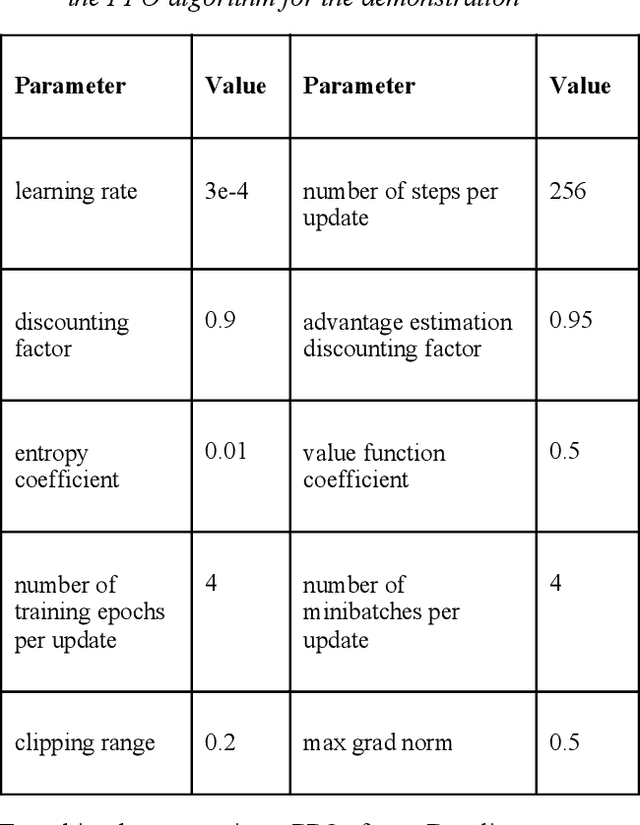

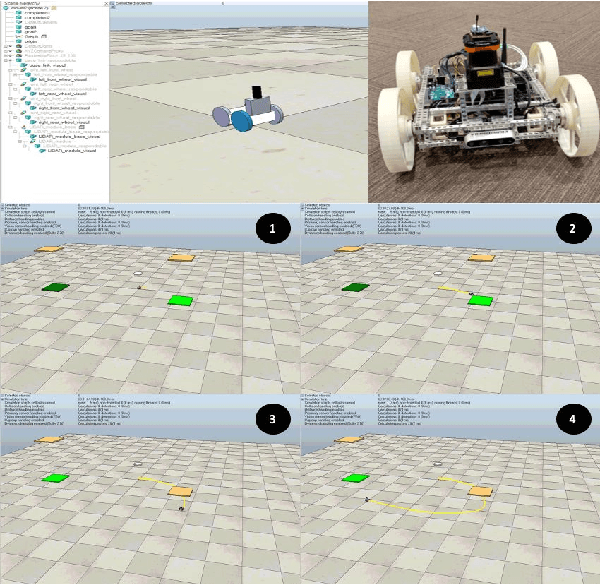

RL STaR Platform: Reinforcement Learning for Simulation based Training of Robots

Sep 21, 2020

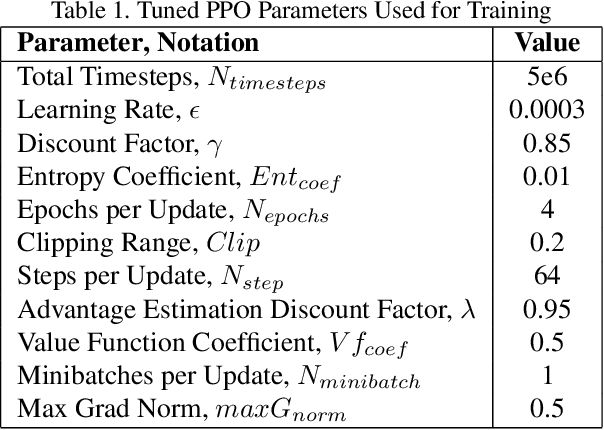

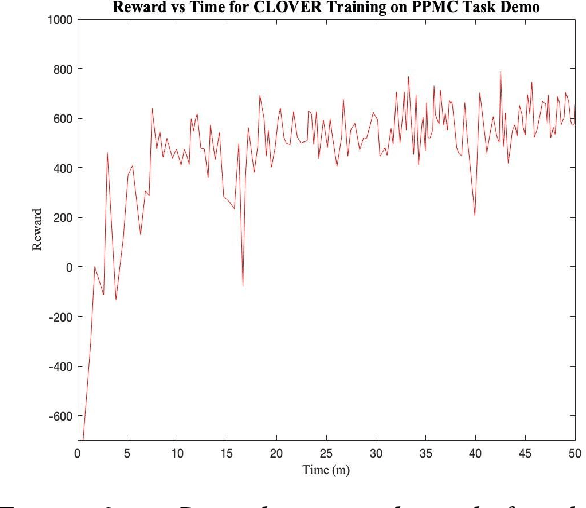

Abstract:Reinforcement learning (RL) is a promising field to enhance robotic autonomy and decision making capabilities for space robotics, something which is challenging with traditional techniques due to stochasticity and uncertainty within the environment. RL can be used to enable lunar cave exploration with infrequent human feedback, faster and safer lunar surface locomotion or the coordination and collaboration of multi-robot systems. However, there are many hurdles making research challenging for space robotic applications using RL and machine learning, particularly due to insufficient resources for traditional robotics simulators like CoppeliaSim. Our solution to this is an open source modular platform called Reinforcement Learning for Simulation based Training of Robots, or RL STaR, that helps to simplify and accelerate the application of RL to the space robotics research field. This paper introduces the RL STaR platform, and how researchers can use it through a demonstration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge