Tamir Blum

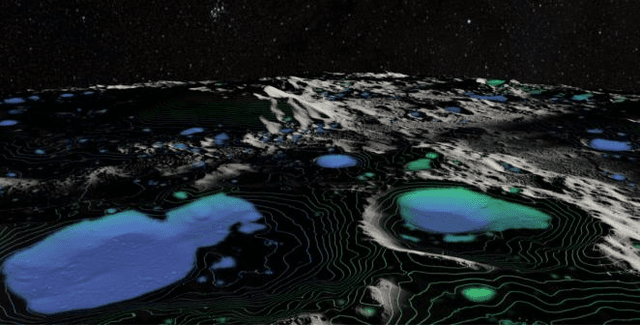

SegVisRL: Visuomotor Development for a Lunar Rover for Hazard Avoidance using Camera Images

Mar 26, 2021

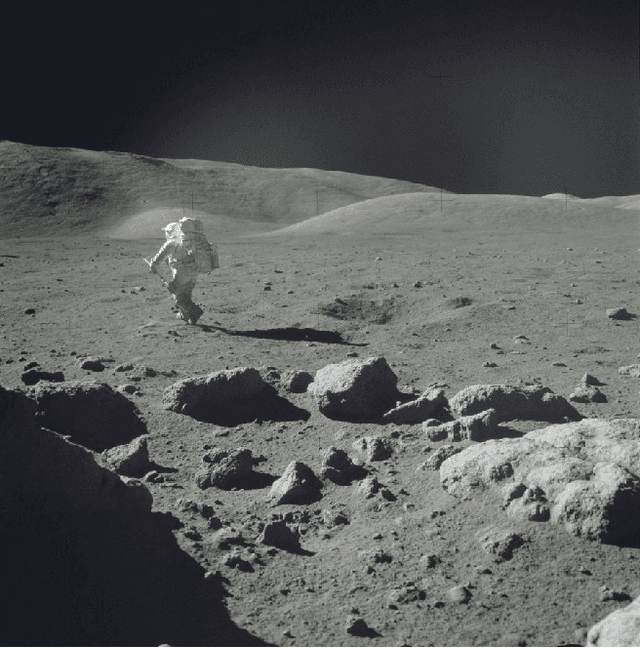

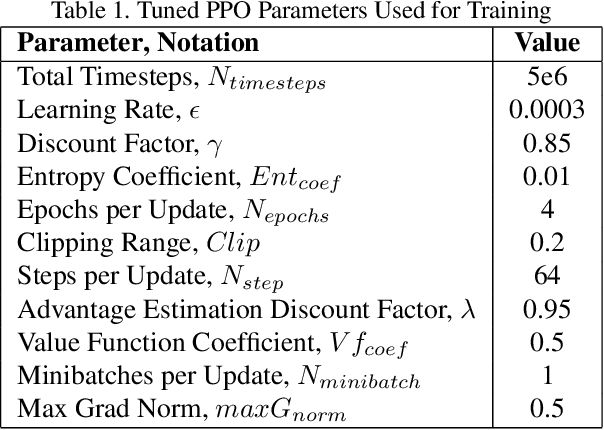

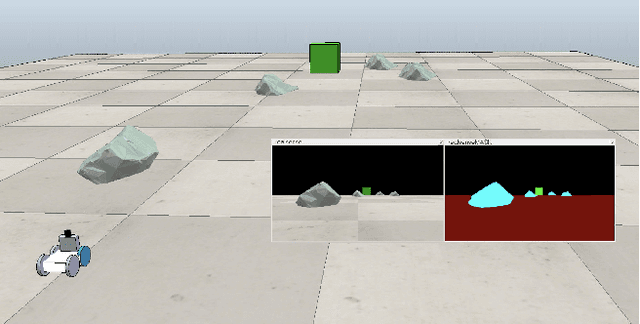

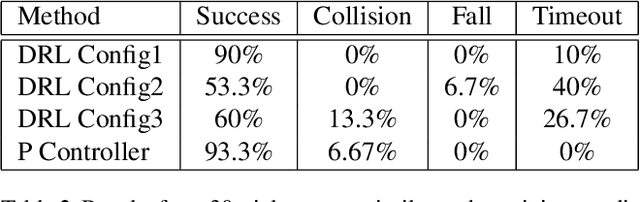

Abstract:The visuomotor system of any animal is critical for its survival, and the development of a complex one within humans is large factor in our success as a species on Earth. This system is an essential part of our ability to adapt to our environment. We use this system continuously throughout the day, when picking something up, or walking around while avoiding bumping into objects. Equipping robots with such capabilities will help produce more intelligent locomotion with the ability to more easily understand their surroundings and to move safely. In particular, such capabilities are desirable for traversing the lunar surface, as it is full of hazardous obstacles, such as rocks. These obstacles need to be identified and avoided in real time. This paper seeks to demonstrate the development of a visuomotor system within a robot for navigation and obstacle avoidance, with complex rock shaped objects representing hazards. Our approach uses deep reinforcement learning with only image data. In this paper, we compare the results from several neural network architectures and a preprocessing methodology which includes producing a segmented image and downsampling.

RL STaR Platform: Reinforcement Learning for Simulation based Training of Robots

Sep 21, 2020

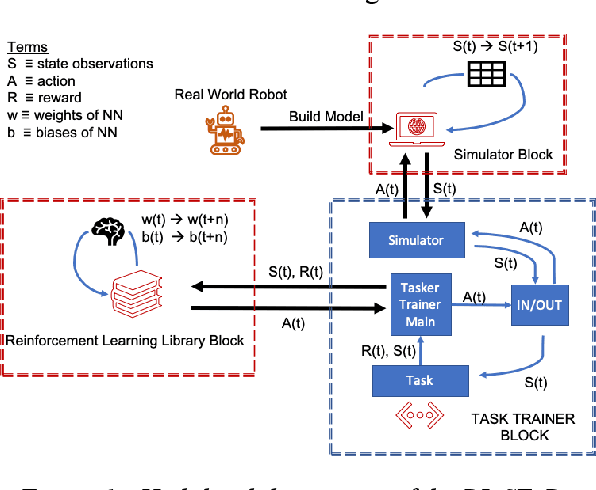

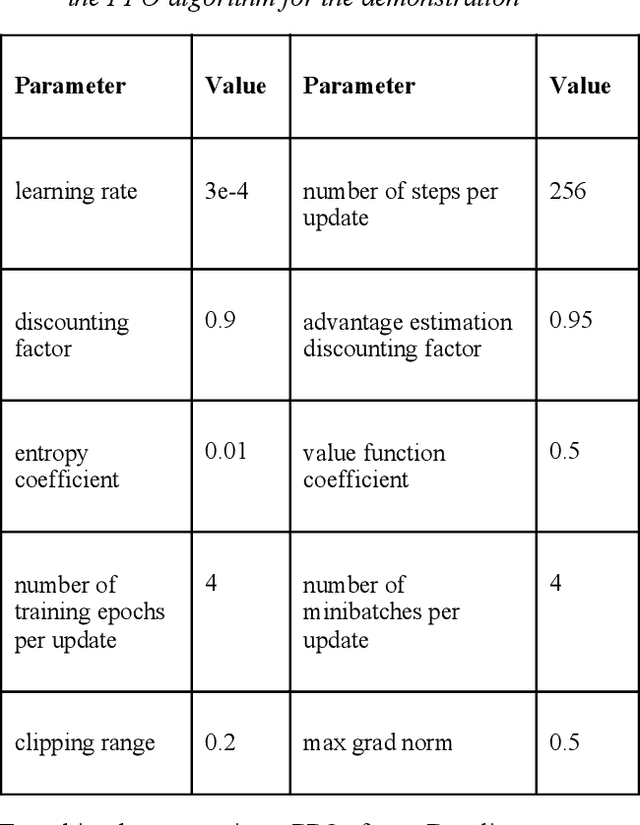

Abstract:Reinforcement learning (RL) is a promising field to enhance robotic autonomy and decision making capabilities for space robotics, something which is challenging with traditional techniques due to stochasticity and uncertainty within the environment. RL can be used to enable lunar cave exploration with infrequent human feedback, faster and safer lunar surface locomotion or the coordination and collaboration of multi-robot systems. However, there are many hurdles making research challenging for space robotic applications using RL and machine learning, particularly due to insufficient resources for traditional robotics simulators like CoppeliaSim. Our solution to this is an open source modular platform called Reinforcement Learning for Simulation based Training of Robots, or RL STaR, that helps to simplify and accelerate the application of RL to the space robotics research field. This paper introduces the RL STaR platform, and how researchers can use it through a demonstration.

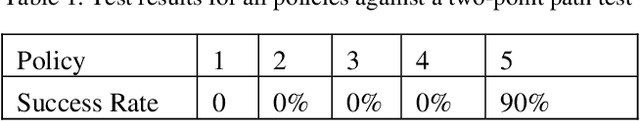

PPMC RL Training Algorithm: Rough Terrain Intelligent Robots through Reinforcement Learning

Mar 13, 2020

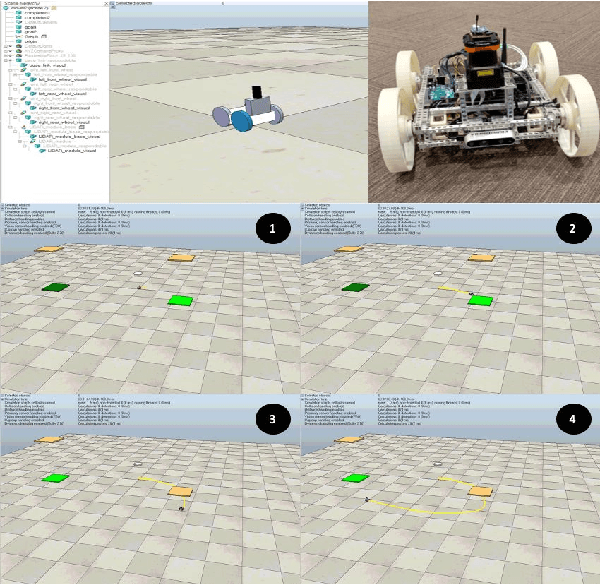

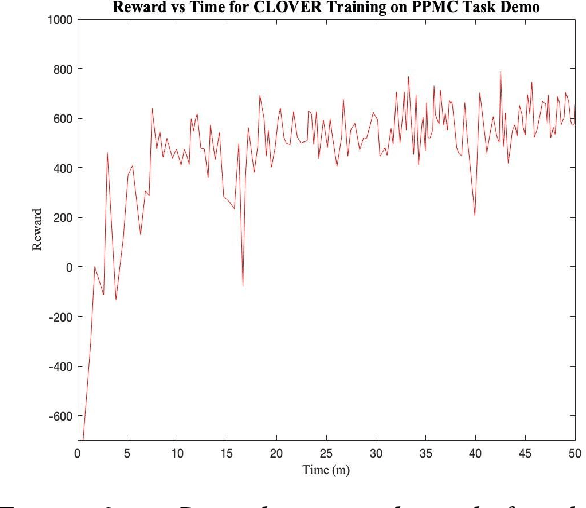

Abstract:Robots can now learn how to make decisions and control themselves, generalizing learned behaviors to unseen scenarios. In particular, AI powered robots show promise in rough environments like the lunar surface, due to the environmental uncertainties. We address this critical generalization aspect for robot locomotion in rough terrain through a training algorithm we have created called the Path Planning and Motion Control (PPMC) Training Algorithm. This algorithm is coupled with any generic reinforcement learning algorithm to teach robots how to respond to user commands and to travel to designated locations on a single neural network. In this paper, we show that the algorithm works independent of the robot structure, demonstrating that it works on a wheeled rover in addition the past results on a quadruped walking robot. Further, we take several big steps towards real world practicality by introducing a rough highly uneven terrain. Critically, we show through experiments that the robot learns to generalize to new rough terrain maps, retaining a 100% success rate. To the best of our knowledge, this is the first paper to introduce a generic training algorithm teaching generalized PPMC in rough environments to any robot, with just the use of reinforcement learning.

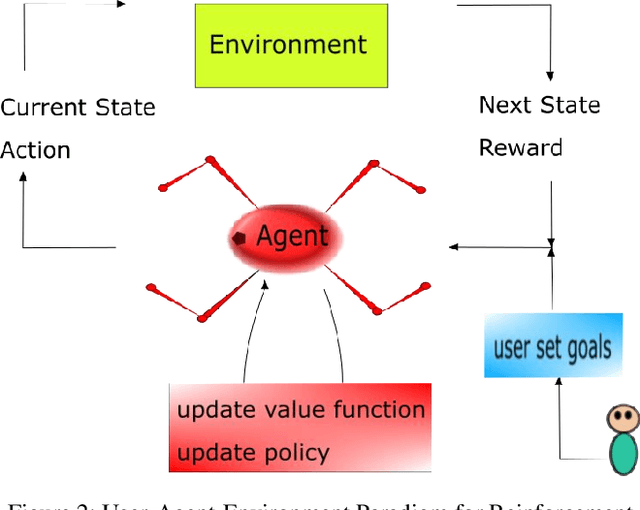

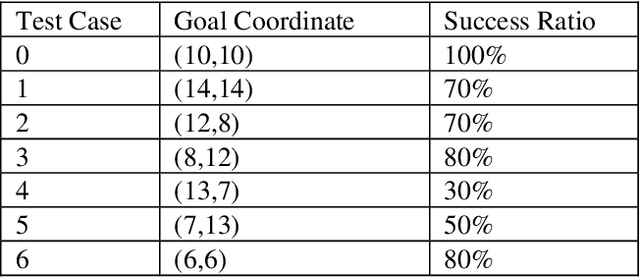

Deep Learned Path Planning via Randomized Reward-Linked-Goals and Potential Space Applications

Sep 13, 2019

Abstract:Space exploration missions have seen use of increasingly sophisticated robotic systems with ever more autonomy. Deep learning promises to take this even a step further, and has applications for high-level tasks, like path planning, as well as low-level tasks, like motion control, which are critical components for mission efficiency and success. Using deep reinforcement end-to-end learning with randomized reward function parameters during training, we teach a simulated 8 degree-of-freedom quadruped ant-like robot to travel anywhere within a perimeter, conducting path plan and motion control on a single neural network, without any system model or prior knowledge of the terrain or environment. Our approach also allows for user specified waypoints, which could translate well to either fully autonomous or semi-autonomous/teleoperated space applications that encounter delay times. We trained the agent using randomly generated waypoints linked to the reward function and passed waypoint coordinates as inputs to the neural network. Such applications show promise on a variety of space exploration robots, including high speed rovers for fast locomotion and legged cave robots for rough terrain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge