Michelle G. Newman

Unlocking the Emotional World of Visual Media: An Overview of the Science, Research, and Impact of Understanding Emotion

Jul 25, 2023

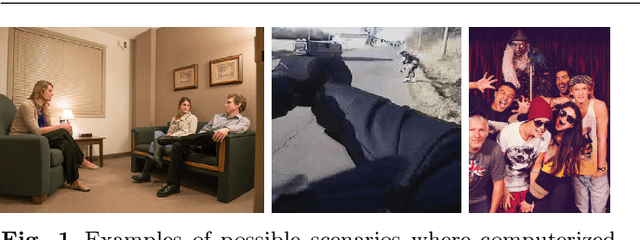

Abstract:The emergence of artificial emotional intelligence technology is revolutionizing the fields of computers and robotics, allowing for a new level of communication and understanding of human behavior that was once thought impossible. While recent advancements in deep learning have transformed the field of computer vision, automated understanding of evoked or expressed emotions in visual media remains in its infancy. This foundering stems from the absence of a universally accepted definition of "emotion", coupled with the inherently subjective nature of emotions and their intricate nuances. In this article, we provide a comprehensive, multidisciplinary overview of the field of emotion analysis in visual media, drawing on insights from psychology, engineering, and the arts. We begin by exploring the psychological foundations of emotion and the computational principles that underpin the understanding of emotions from images and videos. We then review the latest research and systems within the field, accentuating the most promising approaches. We also discuss the current technological challenges and limitations of emotion analysis, underscoring the necessity for continued investigation and innovation. We contend that this represents a "Holy Grail" research problem in computing and delineate pivotal directions for future inquiry. Finally, we examine the ethical ramifications of emotion-understanding technologies and contemplate their potential societal impacts. Overall, this article endeavors to equip readers with a deeper understanding of the domain of emotion analysis in visual media and to inspire further research and development in this captivating and rapidly evolving field.

ARBEE: Towards Automated Recognition of Bodily Expression of Emotion In the Wild

Aug 28, 2018

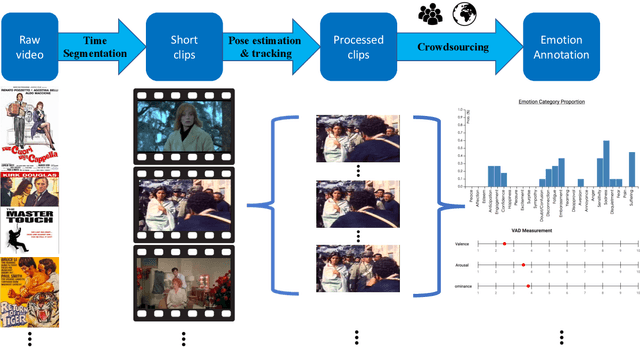

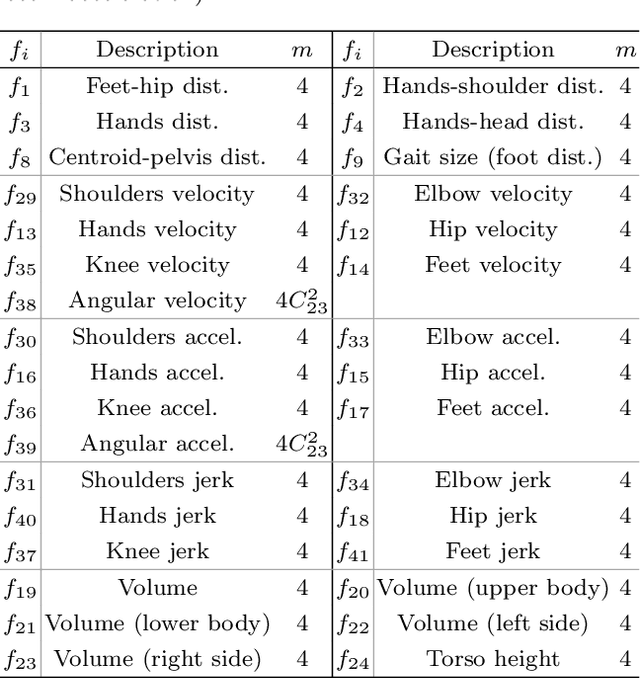

Abstract:Humans are arguably innately prepared to possess the ability to comprehend others' emotional expressions from subtle body movements. A number of robotic applications become possible if robots or computers can be empowered with this capability. Recognizing human bodily expression automatically in unconstrained situations, however, is daunting due to the lack of a full understanding about relationship between body movements and emotional expressions. The current research, as a multidisciplinary effort among computer and information sciences, psychology, and statistics, proposes a scalable and reliable crowdsourcing approach for collecting in-the-wild perceived emotion data for computers to learn to recognize body languages of humans. To do this, a large and growing annotated dataset with 9,876 body movements video clips and 13,239 human characters, named BoLD (Body Language Dataset), has been created. Comprehensive statistical analysis revealed many interesting insights from the dataset. A system to model the emotional expressions based on bodily movements, named ARBEE (Automated Recognition of Bodily Expression of Emotion), has also been developed and evaluated. Our feature analysis shows the effectiveness of Laban Movement Analysis (LMA) features in characterizing arousal. Our experiments using a deep model further demonstrate computability of bodily expression. The dataset and findings presented in this work will likely serve as a launchpad for multiple future discoveries in body language understanding that will make future robots more useful as they interact and collaborate with humans.

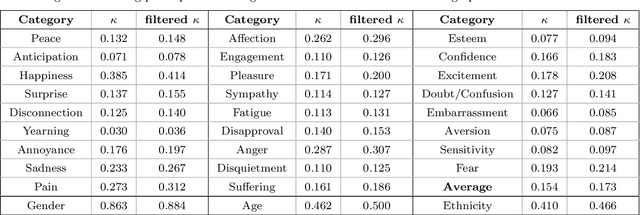

Probabilistic Multigraph Modeling for Improving the Quality of Crowdsourced Affective Data

Jan 06, 2017

Abstract:We proposed a probabilistic approach to joint modeling of participants' reliability and humans' regularity in crowdsourced affective studies. Reliability measures how likely a subject will respond to a question seriously; and regularity measures how often a human will agree with other seriously-entered responses coming from a targeted population. Crowdsourcing-based studies or experiments, which rely on human self-reported affect, pose additional challenges as compared with typical crowdsourcing studies that attempt to acquire concrete non-affective labels of objects. The reliability of participants has been massively pursued for typical non-affective crowdsourcing studies, whereas the regularity of humans in an affective experiment in its own right has not been thoroughly considered. It has been often observed that different individuals exhibit different feelings on the same test question, which does not have a sole correct response in the first place. High reliability of responses from one individual thus cannot conclusively result in high consensus across individuals. Instead, globally testing consensus of a population is of interest to investigators. Built upon the agreement multigraph among tasks and workers, our probabilistic model differentiates subject regularity from population reliability. We demonstrate the method's effectiveness for in-depth robust analysis of large-scale crowdsourced affective data, including emotion and aesthetic assessments collected by presenting visual stimuli to human subjects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge