Michele Tonutti

Pacmed

Uncertainty estimation for classification and risk prediction in medical settings

Apr 13, 2020

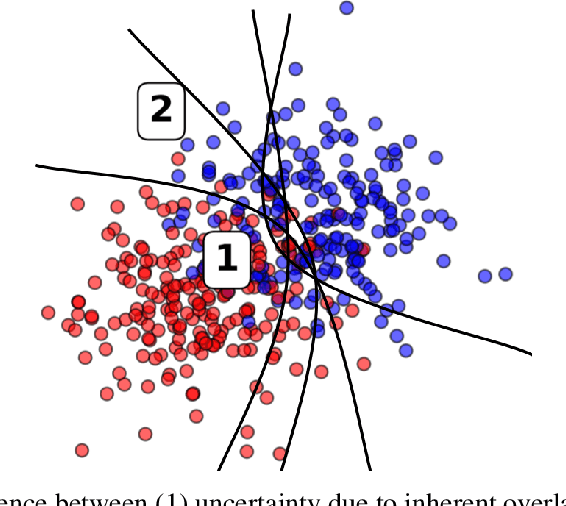

Abstract:In a data-scarce field such as healthcare, where models often deliver predictions on patients with rare conditions, the ability to measure the uncertainty of a model's prediction could potentially lead to improved effectiveness of decision support tools and increased user trust. This work advances the understanding of uncertainty estimation for classification and risk prediction on medical tabular data, in a three-fold way. First, we analyze two families of promising methods and discuss the preferred approach for uncertainty estimation for classification and risk prediction. Second, these remarks are enriched by considerations of the interplay of uncertainty estimation with class imbalance, post-modeling calibration and other modeling procedures. Finally, we expand and refine the set of heuristics to select an uncertainty estimation technique, introducing tests for clinically-relevant scenarios such as generalization to uncommon pathologies, changes in clinical protocol and simulations of corrupted data. These findings are supported by an array of experiments on toy and real-world data

Bayesian Modelling in Practice: Using Uncertainty to Improve Trustworthiness in Medical Applications

Jun 20, 2019

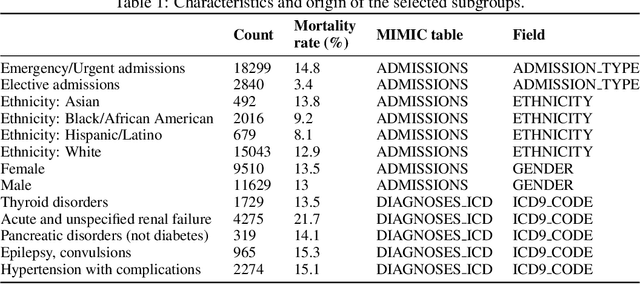

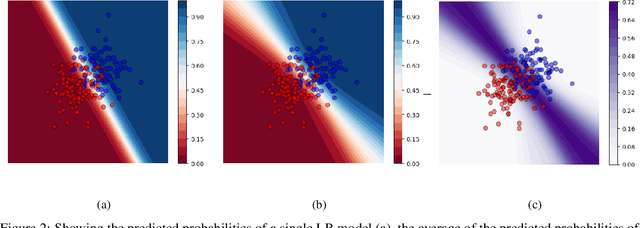

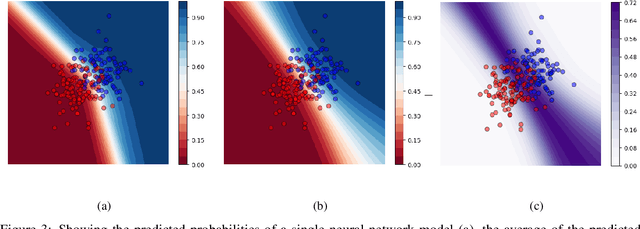

Abstract:The Intensive Care Unit (ICU) is a hospital department where machine learning has the potential to provide valuable assistance in clinical decision making. Classical machine learning models usually only provide point-estimates and no uncertainty of predictions. In practice, uncertain predictions should be presented to doctors with extra care in order to prevent potentially catastrophic treatment decisions. In this work we show how Bayesian modelling and the predictive uncertainty that it provides can be used to mitigate risk of misguided prediction and to detect out-of-domain examples in a medical setting. We derive analytically a bound on the prediction loss with respect to predictive uncertainty. The bound shows that uncertainty can mitigate loss. Furthermore, we apply a Bayesian Neural Network to the MIMIC-III dataset, predicting risk of mortality of ICU patients. Our empirical results show that uncertainty can indeed prevent potential errors and reliably identifies out-of-domain patients. These results suggest that Bayesian predictive uncertainty can greatly improve trustworthiness of machine learning models in high-risk settings such as the ICU.

Robust and Subject-Independent Driving Manoeuvre Anticipation through Domain-Adversarial Recurrent Neural Networks

Feb 26, 2019

Abstract:Through deep learning and computer vision techniques, driving manoeuvres can be predicted accurately a few seconds in advance. Even though adapting a learned model to new drivers and different vehicles is key for robust driver-assistance systems, this problem has received little attention so far. This work proposes to tackle this challenge through domain adaptation, a technique closely related to transfer learning. A proof of concept for the application of a Domain-Adversarial Recurrent Neural Network (DA-RNN) to multi-modal time series driving data is presented, in which domain-invariant features are learned by maximizing the loss of an auxiliary domain classifier. Our implementation is evaluated using a leave-one-driver-out approach on individual drivers from the Brain4Cars dataset, as well as using a new dataset acquired through driving simulations, yielding an average increase in performance of 30% and 114% respectively compared to no adaptation. We also show the importance of fine-tuning sections of the network to optimise the extraction of domain-independent features. The results demonstrate the applicability of the approach to driver-assistance systems as well as training and simulation environments.

* 40 pages, 4 figures. Published online in Robotics and Autonomous Systems

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge