Michele Mazza

Detection and Characterization of Coordinated Online Behavior: A Survey

Aug 02, 2024

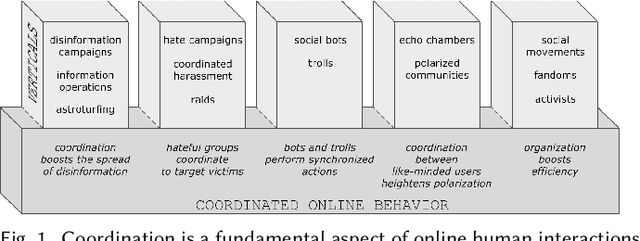

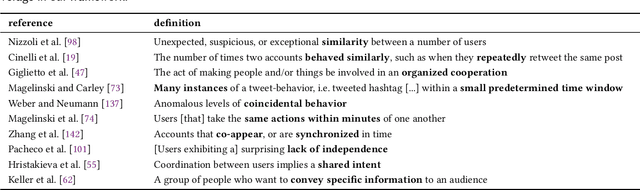

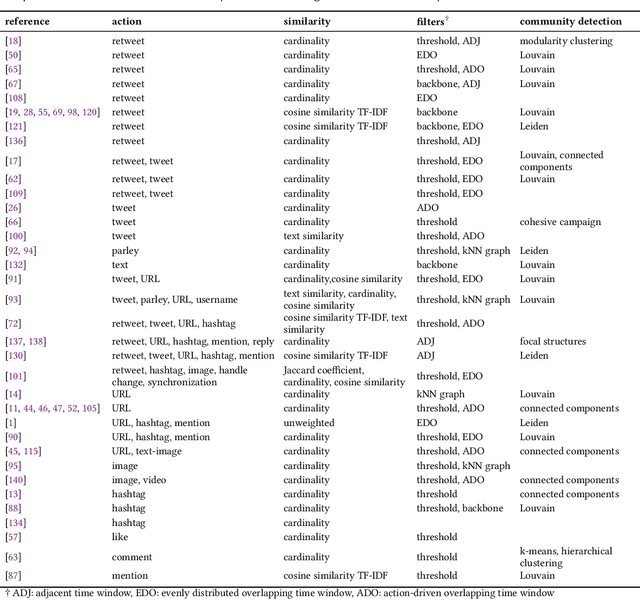

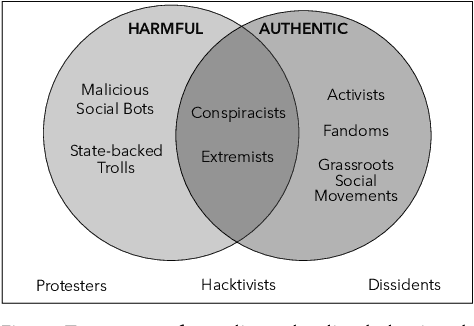

Abstract:Coordination is a fundamental aspect of life. The advent of social media has made it integral also to online human interactions, such as those that characterize thriving online communities and social movements. At the same time, coordination is also core to effective disinformation, manipulation, and hate campaigns. This survey collects, categorizes, and critically discusses the body of work produced as a result of the growing interest on coordinated online behavior. We reconcile industry and academic definitions, propose a comprehensive framework to study coordinated online behavior, and review and critically discuss the existing detection and characterization methods. Our analysis identifies open challenges and promising directions of research, serving as a guide for scholars, practitioners, and policymakers in understanding and addressing the complexities inherent to online coordination.

Modularity-based approach for tracking communities in dynamic social networks

Feb 24, 2023Abstract:Community detection is a fundamental task in social network analysis. Online social networks have dramatically increased the volume and speed of interactions among users, enabling advanced analysis of these dynamics. Despite a growing interest in tracking the evolution of groups of users in real-world social networks, most community detection efforts focus on communities within static networks. Here, we describe a framework for tracking communities over time in a dynamic network, where a series of significant events is identified for each community. To this end, a modularity-based strategy is proposed to effectively detect and track dynamic communities. The potential of our framework is shown by conducting extensive experiments on synthetic networks containing embedded events. Results indicate that our framework outperforms other state-of-the-art methods. In addition, we briefly explore how the proposed approach can identify dynamic communities in a Twitter network composed of more than 60,000 users, which posted over 5 million tweets throughout 2020. The proposed framework can be applied to different social network and provides a valuable tool to understand the evolution of communities in dynamic social networks.

RTbust: Exploiting Temporal Patterns for Botnet Detection on Twitter

Feb 12, 2019

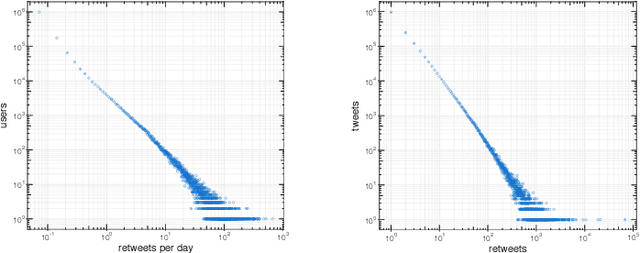

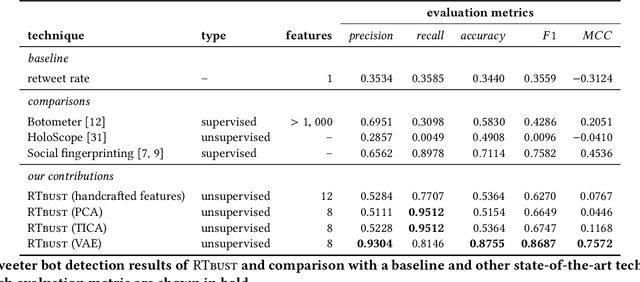

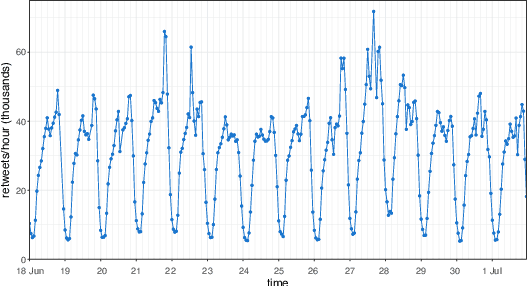

Abstract:Within OSNs, many of our supposedly online friends may instead be fake accounts called social bots, part of large groups that purposely re-share targeted content. Here, we study retweeting behaviors on Twitter, with the ultimate goal of detecting retweeting social bots. We collect a dataset of 10M retweets. We design a novel visualization that we leverage to highlight benign and malicious patterns of retweeting activity. In this way, we uncover a 'normal' retweeting pattern that is peculiar of human-operated accounts, and 3 suspicious patterns related to bot activities. Then, we propose a bot detection technique that stems from the previous exploration of retweeting behaviors. Our technique, called Retweet-Buster (RTbust), leverages unsupervised feature extraction and clustering. An LSTM autoencoder converts the retweet time series into compact and informative latent feature vectors, which are then clustered with a hierarchical density-based algorithm. Accounts belonging to large clusters characterized by malicious retweeting patterns are labeled as bots. RTbust obtains excellent detection results, with F1 = 0.87, whereas competitors achieve F1 < 0.76. Finally, we apply RTbust to a large dataset of retweets, uncovering 2 previously unknown active botnets with hundreds of accounts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge