Michele Colledanchise

Towards Confidence-guided Shape Completion for Robotic Applications

Sep 09, 2022

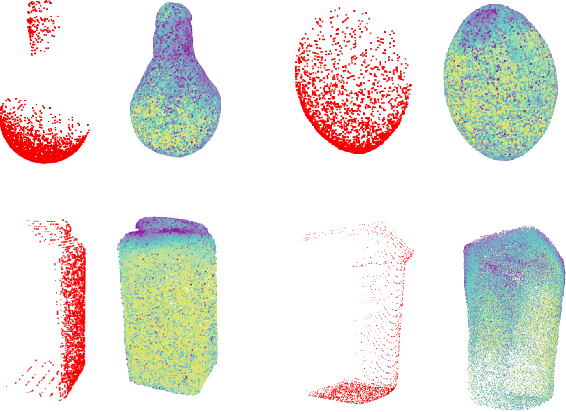

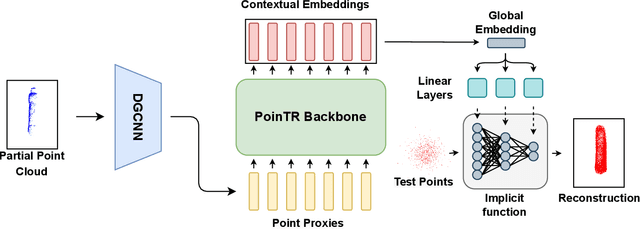

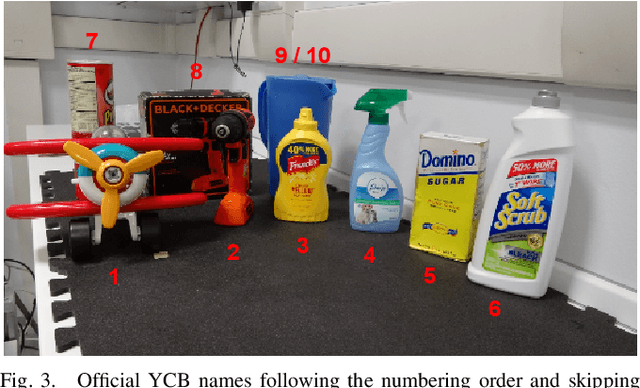

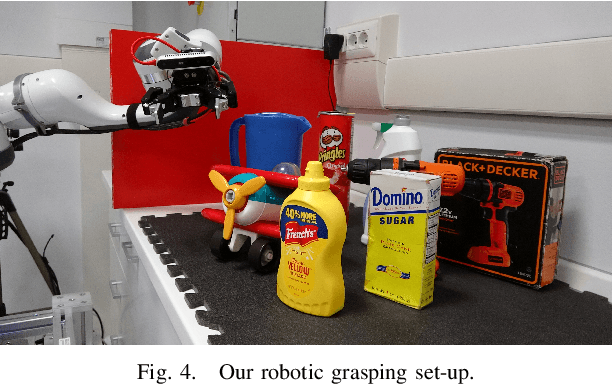

Abstract:Many robotic tasks involving some form of 3D visual perception greatly benefit from a complete knowledge of the working environment. However, robots often have to tackle unstructured environments and their onboard visual sensors can only provide incomplete information due to limited workspaces, clutter or object self-occlusion. In recent years, deep learning architectures for shape completion have begun taking traction as effective means of inferring a complete 3D object representation from partial visual data. Nevertheless, most of the existing state-of-the-art approaches provide a fixed output resolution in the form of voxel grids, strictly related to the size of the neural network output stage. While this is enough for some tasks, e.g. obstacle avoidance in navigation, grasping and manipulation require finer resolutions and simply scaling up the neural network outputs is computationally expensive. In this paper, we address this limitation by proposing an object shape completion method based on an implicit 3D representation providing a confidence value for each reconstructed point. As a second contribution, we propose a gradient-based method for efficiently sampling such implicit function at an arbitrary resolution, tunable at inference time. We experimentally validate our approach by comparing reconstructed shapes with ground truths, and by deploying our shape completion algorithm in a robotic grasping pipeline. In both cases, we compare results with a state-of-the-art shape completion approach.

One-Shot Open-Set Skeleton-Based Action Recognition

Sep 09, 2022

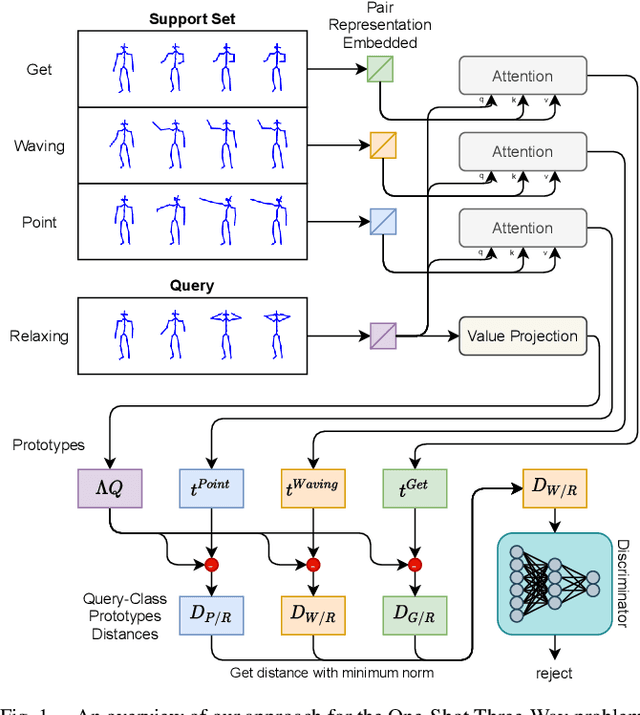

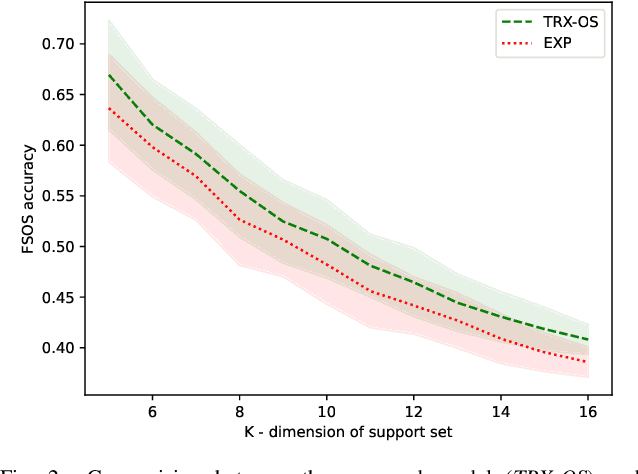

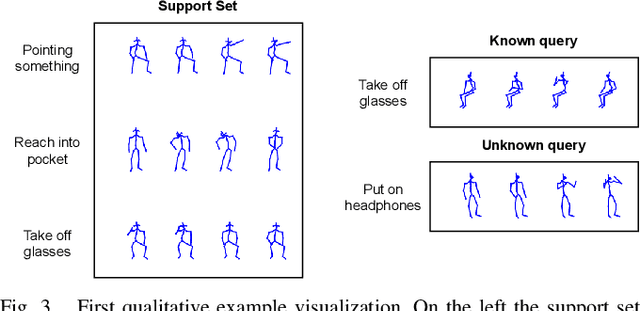

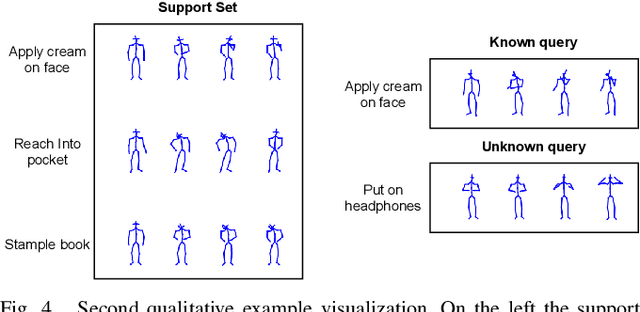

Abstract:Action recognition is a fundamental capability for humanoid robots to interact and cooperate with humans. This application requires the action recognition system to be designed so that new actions can be easily added, while unknown actions are identified and ignored. In recent years, deep-learning approaches represented the principal solution to the Action Recognition problem. However, most models often require a large dataset of manually-labeled samples. In this work we target One-Shot deep-learning models, because they can deal with just a single instance for class. Unfortunately, One-Shot models assume that, at inference time, the action to recognize falls into the support set and they fail when the action lies outside the support set. Few-Shot Open-Set Recognition (FSOSR) solutions attempt to address that flaw, but current solutions consider only static images and not sequences of images. Static images remain insufficient to discriminate actions such as sitting-down and standing-up. In this paper we propose a novel model that addresses the FSOSR problem with a One-Shot model that is augmented with a discriminator that rejects unknown actions. This model is useful for applications in humanoid robotics, because it allows to easily add new classes and determine whether an input sequence is among the ones that are known to the system. We show how to train the whole model in an end-to-end fashion and we perform quantitative and qualitative analyses. Finally, we provide real-world examples.

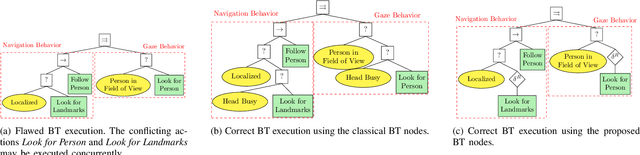

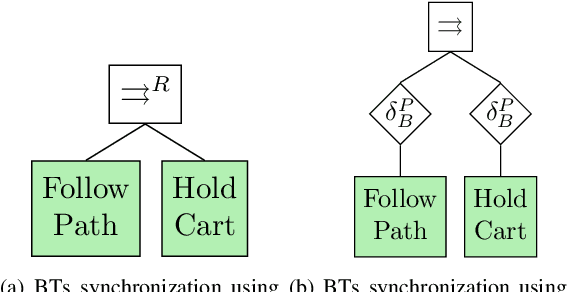

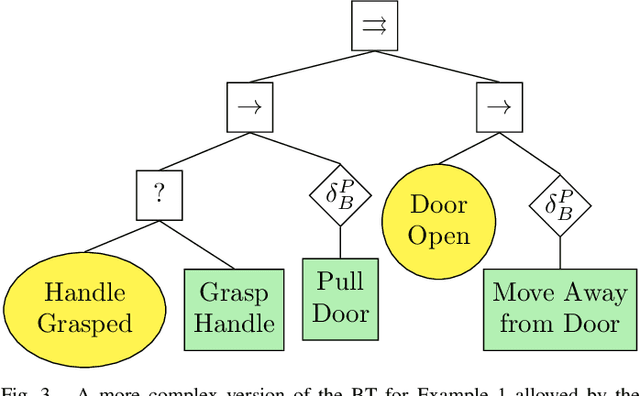

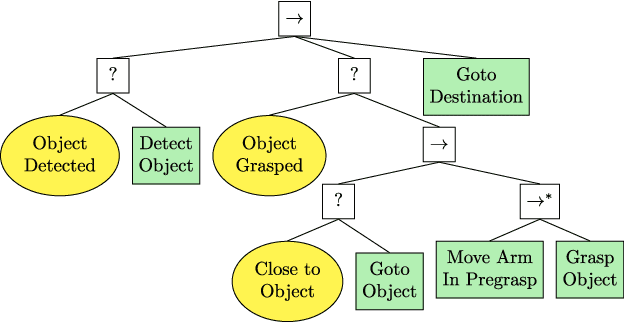

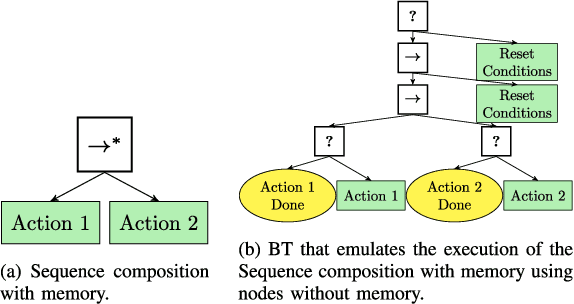

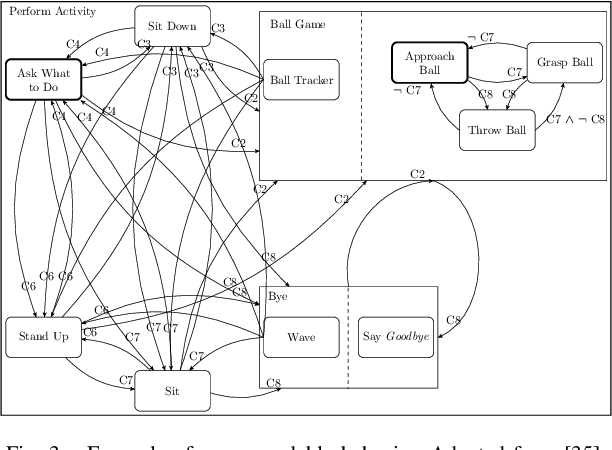

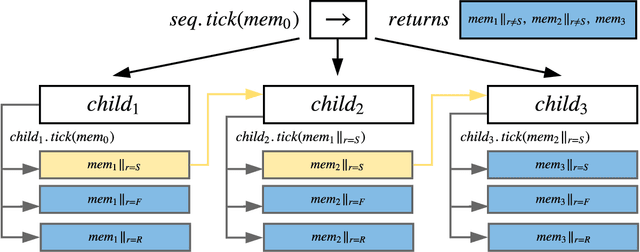

Handling Concurrency in Behavior Trees

Oct 22, 2021

Abstract:This paper addresses the concurrency issues affecting Behavior Trees (BTs), a popular tool to model the behaviors of autonomous agents in the video game and the robotics industry. BT designers can easily build complex behaviors composing simpler ones, which represents a key advantage of BTs. The parallel composition of BTs expresses a way to combine concurrent behaviors that has high potential, since composing pre-existing BTs in parallel results easier than composing in parallel classical control architectures, as finite state machines or teleo-reactive programs. However, BT designers rarely use such composition due to the underlying concurrency problems similar to the ones faced in concurrent programming. As a result, the parallel composition, despite its potential, finds application only in the composition of simple behaviors or where the designer can guarantee the absence of conflicts by design. In this paper, we define two new BT nodes to tackle the concurrency problems in BTs and we show how to exploit them to create predictable behaviors. In addition, we introduce measures to assess execution performance and show how different design choices affect them. We validate our approach in both simulations and the real world. Simulated experiments provide statistically significant data, whereas real-world experiments show the applicability of our method on real robots. We provided an open-source implementation of the novel BT formulation and published all the source code to reproduce the numerical examples and experiments.

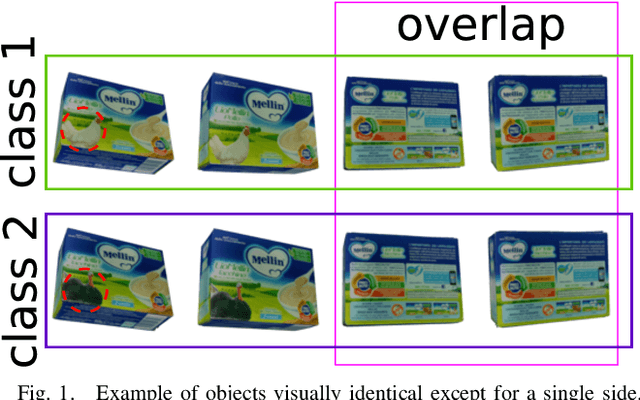

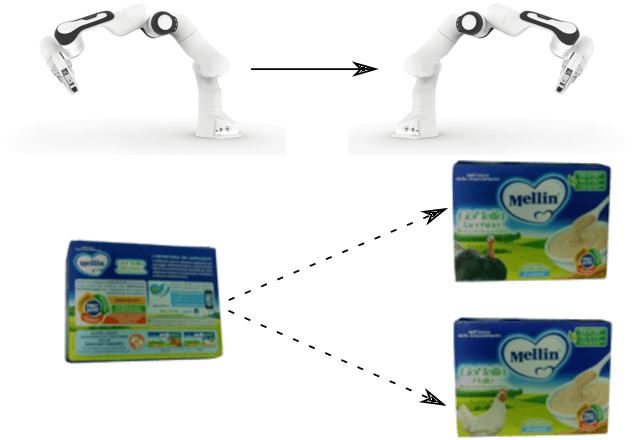

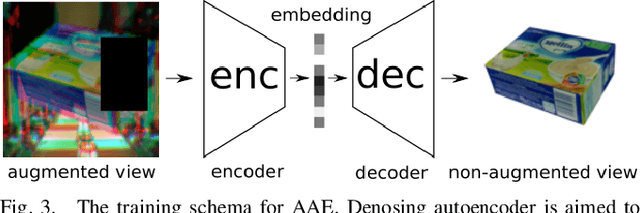

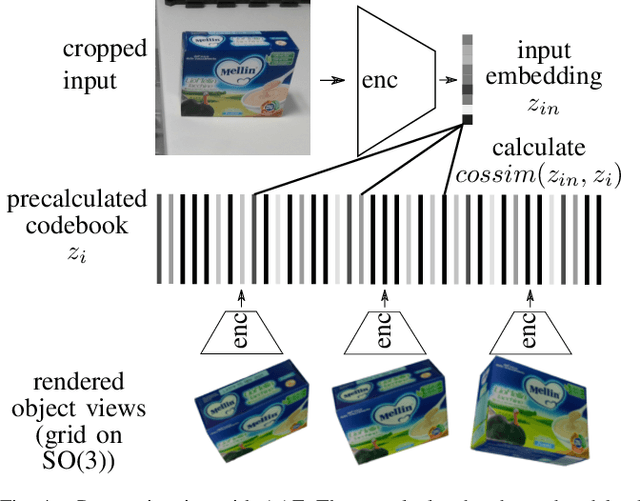

Active Perception for Ambiguous Objects Classification

Aug 02, 2021

Abstract:Recent visual pose estimation and tracking solutions provide notable results on popular datasets such as T-LESS and YCB. However, in the real world, we can find ambiguous objects that do not allow exact classification and detection from a single view. In this work, we propose a framework that, given a single view of an object, provides the coordinates of a next viewpoint to discriminate the object against similar ones, if any, and eliminates ambiguities. We also describe a complete pipeline from a real object's scans to the viewpoint selection and classification. We validate our approach with a Franka Emika Panda robot and common household objects featured with ambiguities. We released the source code to reproduce our experiments.

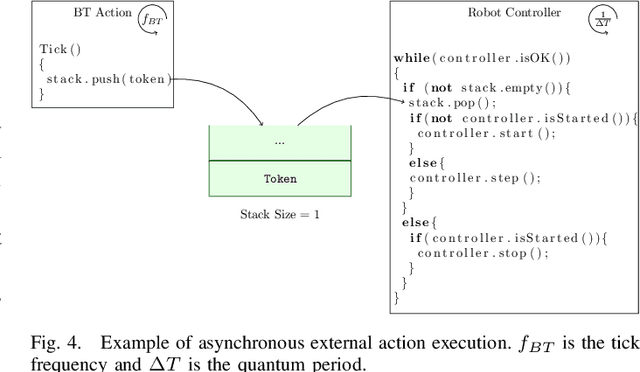

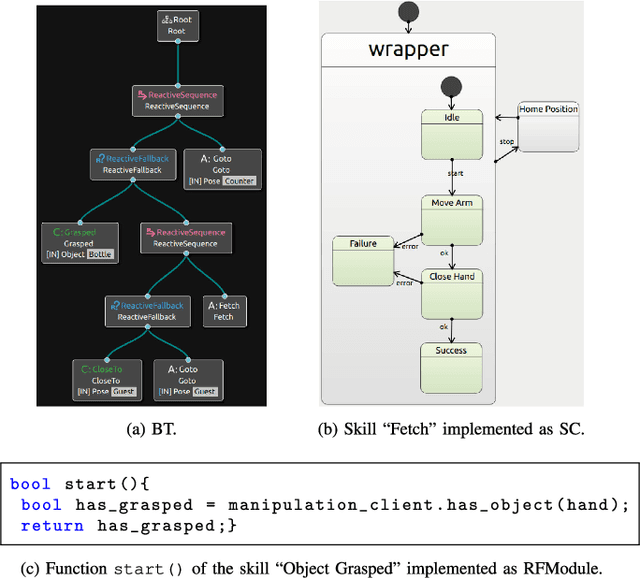

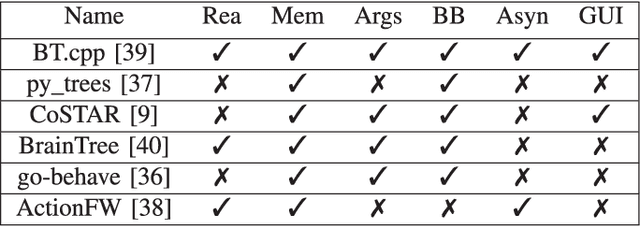

On the Implementation of Behavior Trees in Robotics

Jun 29, 2021

Abstract:There is a growing interest in Behavior Trees (BTs) as a tool to describe and implement robot behaviors. BTs were devised in the video game industry and their adoption in robotics resulted in the development of ad-hoc libraries to design and execute BTs that fit complex robotics software architectures. While there is broad consensus on how BTs work, some characteristics rely on the implementation choices done in the specific software library used. In this letter, we outline practical aspects in the adoption of BTs and the solutions devised by the robotics community to fully exploit the advantages of BTs in real robots. We also overview the solutions proposed in open-source libraries used in robotics, we show how BTs fit in robotic software architecture, and we present a use case example.

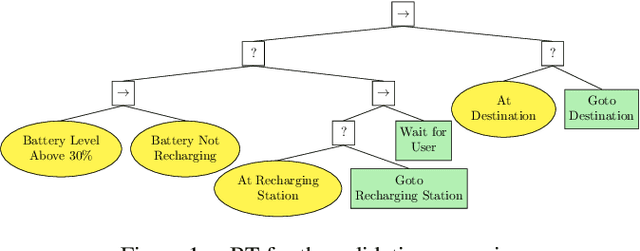

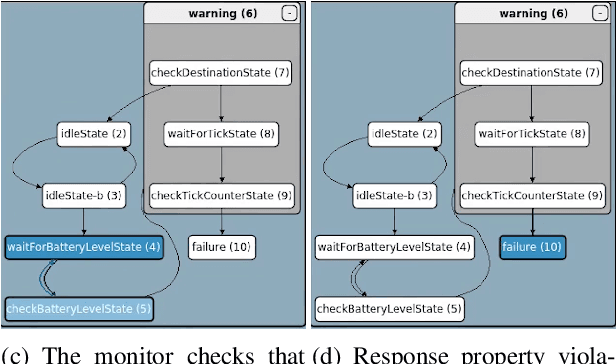

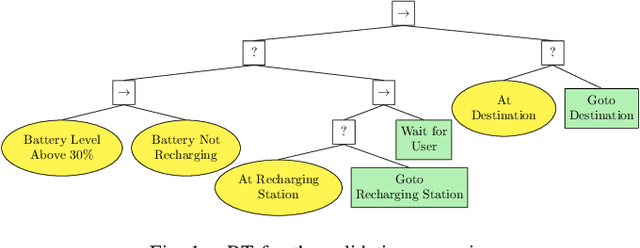

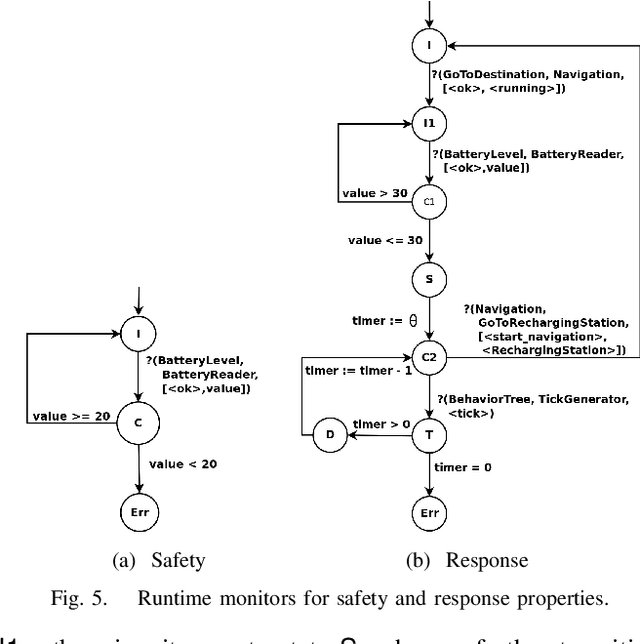

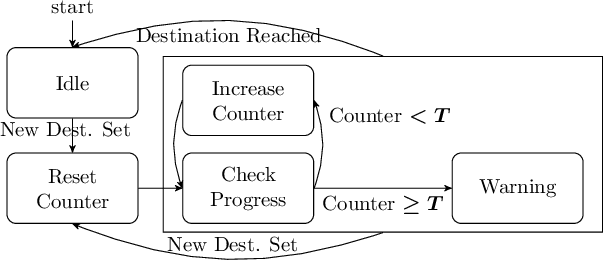

A Toolchain to Design, Execute, and Monitor Robots Behaviors

Jun 29, 2021

Abstract:In this paper, we present a toolchain to design, execute, and verify robot behaviors. The toolchain follows the guidelines defined by the EU H2020 project RobMoSys and encodes the robot deliberation as a Behavior Tree (BT), a directed tree where the internal nodes model behavior composition and leaf nodes model action or measurement operations. Such leaf nodes take the form of a statechart (SC), which runs in separate threads, whose states perform basic arithmetic operations and send commands to the robot. The toolchain provides the ability to define a runtime monitor for a given system specification that warns the user whenever a given specification is violated. We validated the toolchain in a simulated experiment that we made reproducible in an OS-virtualization environment.

* arXiv admin note: text overlap with arXiv:2106.12474

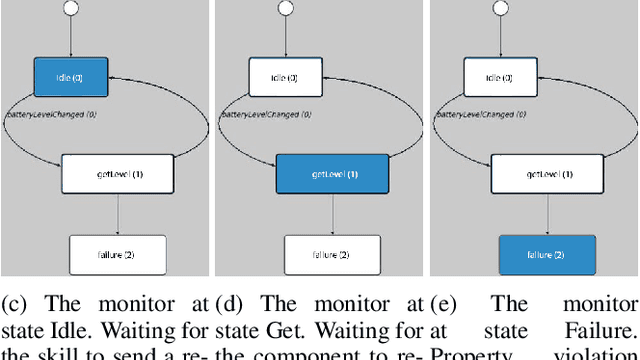

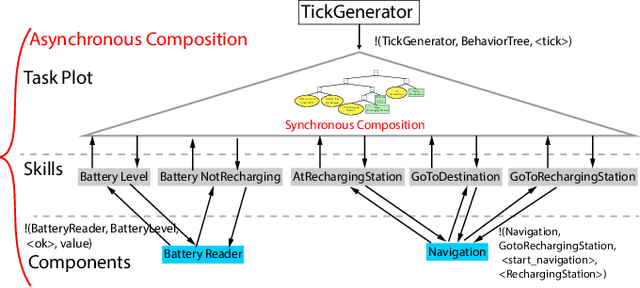

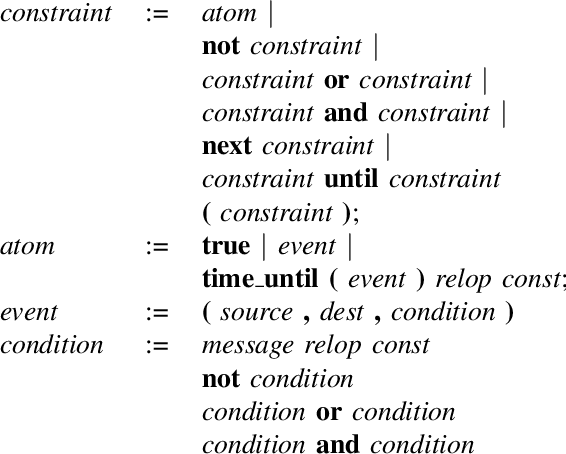

Formalizing the Execution Context of Behavior Trees for Runtime Verification of Deliberative Policies

Jun 23, 2021

Abstract:Our research aims to enable automated property verification of deliberative components in robot control architectures. We focus on a formalization of the execution context of Behavior Trees (BTs) to provide a scalable, yet formally grounded, methodology to enable runtime verification and prevent unexpected robot behaviors to hamper deployment. To this end, we consider a message-passing model that accommodates both synchronous and asynchronous composition of parallel components, in which BTs and other components execute and interact according to the communication patterns commonly adopted in robotic software architectures. We introduce a formal property specification language to encode requirements and build runtime monitors. We performed a set of experiments both on simulations and on the real robot, demonstrating the feasibility of our approach in a realistic application, and its integration in a typical robot software architecture. We also provide an OS-level virtualization environment to reproduce the experiments in the simulated scenario.

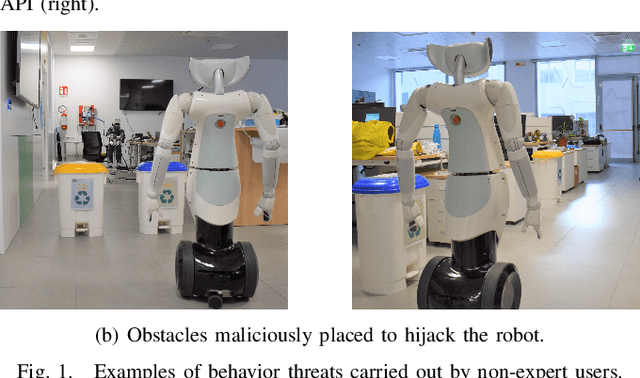

A New Paradigm of Threats in Robotics Behaviors

Mar 24, 2021

Abstract:Robots applications in our daily life increase at an unprecedented pace. As robots will soon operate "out in the wild", we must identify the safety and security vulnerabilities they will face. Robotics researchers and manufacturers focus their attention on new, cheaper, and more reliable applications. Still, they often disregard the operability in adversarial environments where a trusted or untrusted user can jeopardize or even alter the robot's task. In this paper, we identify a new paradigm of security threats in the next generation of robots. These threats fall beyond the known hardware or network-based ones, and we must find new solutions to address them. These new threats include malicious use of the robot's privileged access, tampering with the robot sensors system, and tricking the robot's deliberation into harmful behaviors. We provide a taxonomy of attacks that exploit these vulnerabilities with realistic examples, and we outline effective countermeasures to prevent better, detect, and mitigate them.

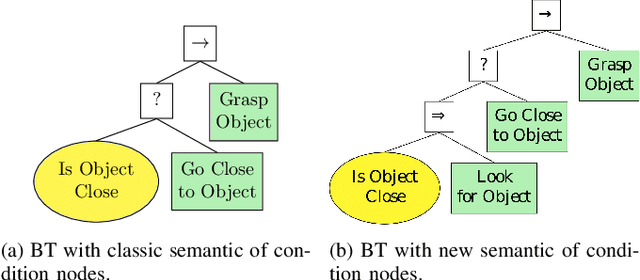

Task Planning with Belief Behavior Trees

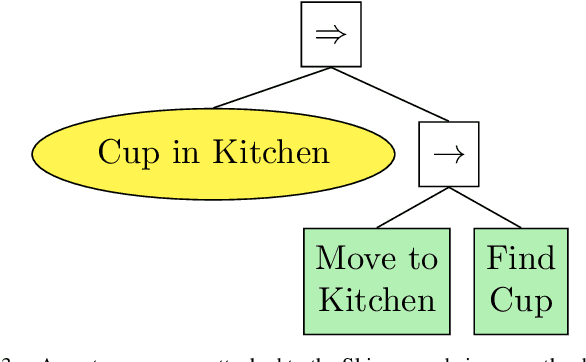

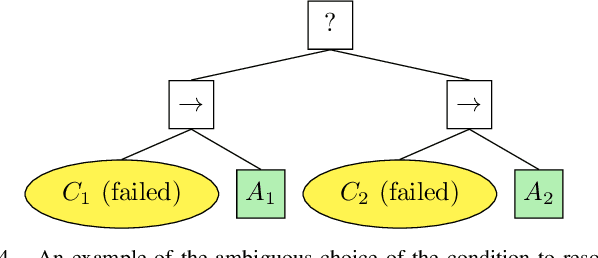

Aug 21, 2020

Abstract:In this paper, we propose Belief Behavior Trees (BBTs), an extension to Behavior Trees (BTs) that allows to automatically create a policy that controls a robot in partially observable environments. We extend the semantic of BTs to account for the uncertainty that affects both the conditions and action nodes of the BT. The tree gets synthesized following a planning strategy for BTs proposed recently: from a set of goal conditions we iteratively select a goal and find the action, or in general the subtree, that satisfies it. Such action may have preconditions that do not hold. For those preconditions, we find an action or subtree in the same fashion. We extend this approach by including, in the planner, actions that have the purpose to reduce the uncertainty that affects the value of a condition node in the BT (for example, turning on the lights to have better lighting conditions). We demonstrate that BBTs allows task planning with non-deterministic outcomes for actions. We provide experimental validation of our approach in a real robotic scenario and - for sake of reproducibility - in a simulated one.

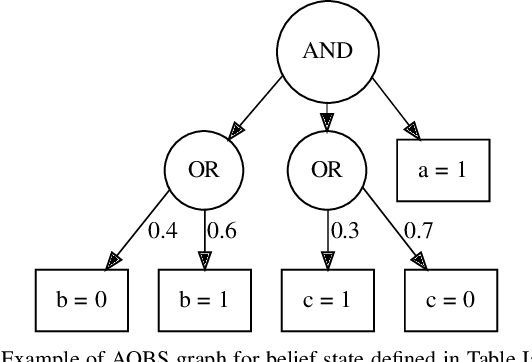

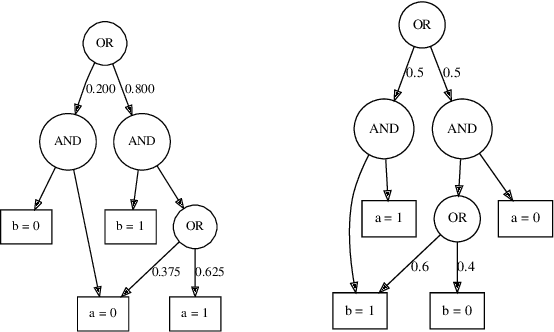

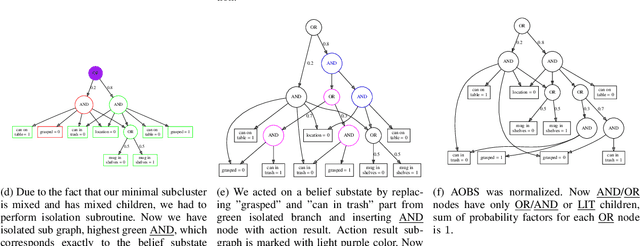

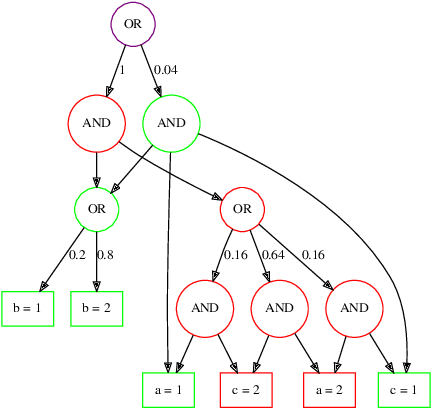

Compact Belief State Representation for Task Planning

Aug 21, 2020

Abstract:Task planning in a probabilistic belief state domains allows generating complex and robust execution policies in those domains affected by state uncertainty. The performance of a task planner relies on the belief state representation. However, current belief state representation becomes easily intractable as the number of variables and execution time grows. To address this problem, we developed a novel belief state representation based on cartesian product and union operations over belief substates. These two operations and single variable assignment nodes form And-Or directed acyclic graph of Belief State (AOBS). We show how to apply actions with probabilistic outcomes and measure the probability of conditions holding over belief state. We evaluated AOBS performance in simulated forward state space exploration. We compared the size of AOBS with the size of Binary Decision Diagrams (BDD) that were previously used to represent belief state. We show that AOBS representation is not only much more compact than a full belief state but it also scales better than BDD for most of the cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge