Michelangelo Paci

Feature transforms for image data augmentation

Jan 24, 2022

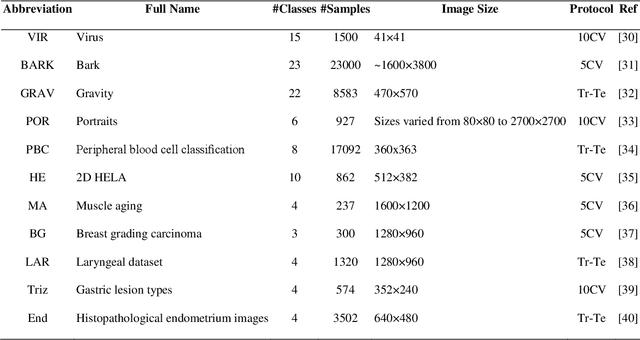

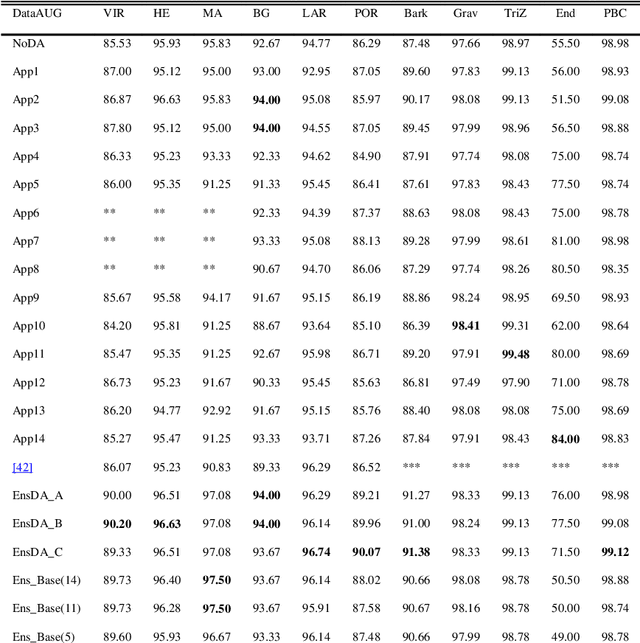

Abstract:A problem with Convolutional Neural Networks (CNNs) is that they require large datasets to obtain adequate robustness; on small datasets, they are prone to overfitting. Many methods have been proposed to overcome this shortcoming with CNNs. In cases where additional samples cannot easily be collected, a common approach is to generate more data points from existing data using an augmentation technique. In image classification, many augmentation approaches utilize simple image manipulation algorithms. In this work, we build ensembles on the data level by adding images generated by combining fourteen augmentation approaches, three of which are proposed here for the first time. These novel methods are based on the Fourier Transform (FT), the Radon Transform (RT) and the Discrete Cosine Transform (DCT). Pretrained ResNet50 networks are finetuned on training sets that include images derived from each augmentation method. These networks and several fusions are evaluated and compared across eleven benchmarks. Results show that building ensembles on the data level by combining different data augmentation methods produce classifiers that not only compete competitively against the state-of-the-art but often surpass the best approaches reported in the literature.

Comparison of different convolutional neural network activation functions and methods for building ensembles

Apr 02, 2021

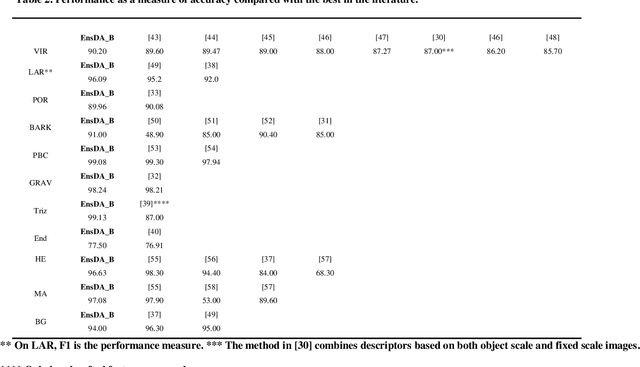

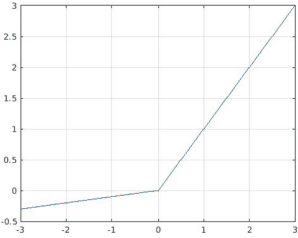

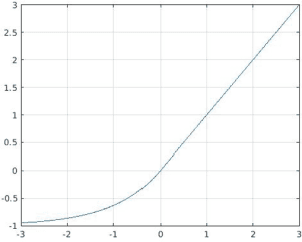

Abstract:Recently, much attention has been devoted to finding highly efficient and powerful activation functions for CNN layers. Because activation functions inject different nonlinearities between layers that affect performance, varying them is one method for building robust ensembles of CNNs. The objective of this study is to examine the performance of CNN ensembles made with different activation functions, including six new ones presented here: 2D Mexican ReLU, TanELU, MeLU+GaLU, Symmetric MeLU, Symmetric GaLU, and Flexible MeLU. The highest performing ensemble was built with CNNs having different activation layers that randomly replaced the standard ReLU. A comprehensive evaluation of the proposed approach was conducted across fifteen biomedical data sets representing various classification tasks. The proposed method was tested on two basic CNN architectures: Vgg16 and ResNet50. Results demonstrate the superiority in performance of this approach. The MATLAB source code for this study will be available at https://github.com/LorisNanni.

An Ensemble of Convolutional Neural Networks for Audio Classification

Jul 15, 2020

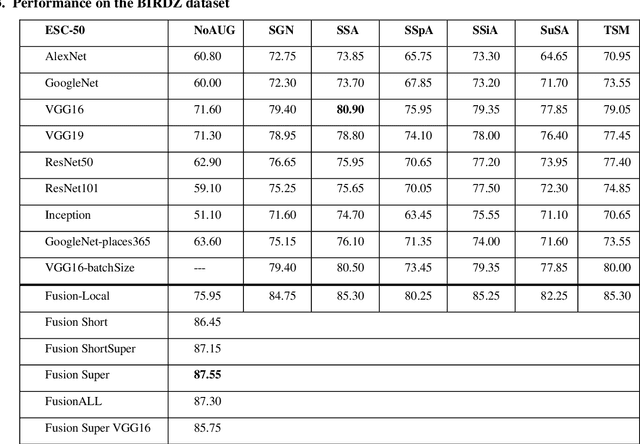

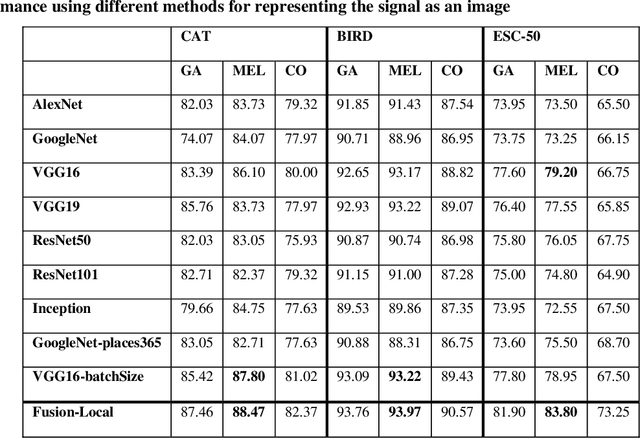

Abstract:In this paper, ensembles of classifiers that exploit several data augmentation techniques and four signal representations for training Convolutional Neural Networks (CNNs) for audio classification are presented and tested on three freely available audio classification datasets: i) bird calls, ii) cat sounds, and iii) the Environmental Sound Classification dataset. The best performing ensembles combining data augmentation techniques with different signal representations are compared and shown to outperform the best methods reported in the literature on these datasets. The approach proposed here obtains state-of-the-art results in the widely used ESC-50 dataset. To the best of our knowledge, this is the most extensive study investigating ensembles of CNNs for audio classification. Results demonstrate not only that CNNs can be trained for audio classification but also that their fusion using different techniques works better than the stand-alone classifiers.

Data augmentation approaches for improving animal audio classification

Dec 16, 2019

Abstract:In this paper we present ensembles of classifiers for automated animal audio classification, exploiting different data augmentation techniques for training Convolutional Neural Networks (CNNs). The specific animal audio classification problems are i) birds and ii) cat sounds, whose datasets are freely available. We train five different CNNs on the original datasets and on their versions augmented by four augmentation protocols, working on the raw audio signals or their representations as spectrograms. We compared our best approaches with the state of the art, showing that we obtain the best recognition rate on the same datasets, without ad hoc parameter optimization. Our study shows that different CNNs can be trained for the purpose of animal audio classification and that their fusion works better than the stand-alone classifiers. To the best of our knowledge this is the largest study on data augmentation for CNNs in animal audio classification audio datasets using the same set of classifiers and parameters. Our MATLAB code is available at https://github.com/LorisNanni .

Audiogmenter: a MATLAB Toolbox for Audio Data Augmentation

Dec 11, 2019

Abstract:Audio data augmentation is a key step in training deep neural networks for solving audio classification tasks. In this paper, we introduce Audiogmenter, a novel audio data augmentation library in MATLAB. We provide 15 different augmentation algorithms for raw audio data and 8 for spectrograms. We integrate the MATLAB built-in audio data augmenter with other methods that proved their effectiveness in literature. To the best of our knowledge, this is the largest MATLAB audio data augmentation library freely available. The toolbox and its documentation can be downloaded at https://github.com/LorisNanni/Audiogmenter

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge