Michel Jezequel

Efficient Hardware Implementation of Incremental Learning and Inference on Chip

Nov 18, 2019

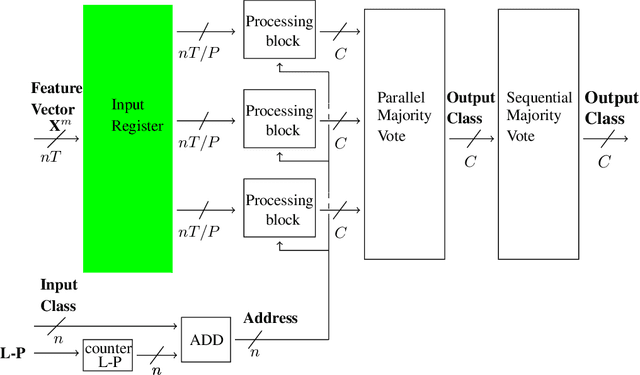

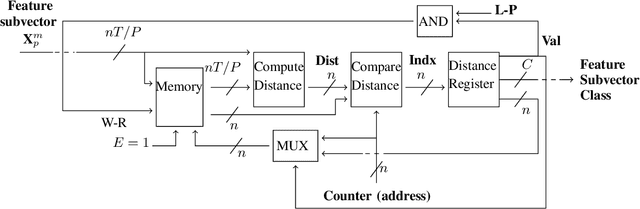

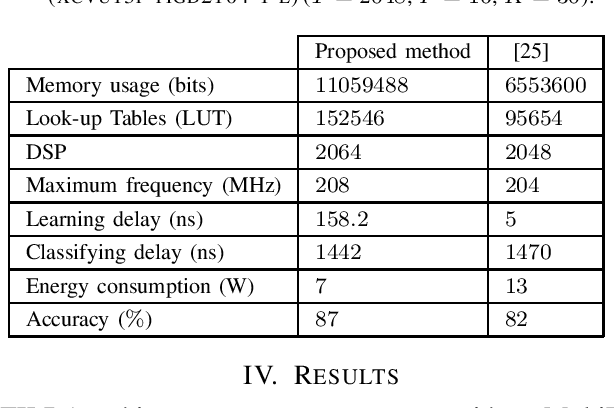

Abstract:In this paper, we tackle the problem of incrementally learning a classifier, one example at a time, directly on chip. To this end, we propose an efficient hardware implementation of a recently introduced incremental learning procedure that achieves state-of-the-art performance by combining transfer learning with majority votes and quantization techniques. The proposed design is able to accommodate for both new examples and new classes directly on the chip. We detail the hardware implementation of the method (implemented on FPGA target) and show it requires limited resources while providing a significant acceleration compared to using a CPU.

Transfer Incremental Learning using Data Augmentation

Oct 04, 2018

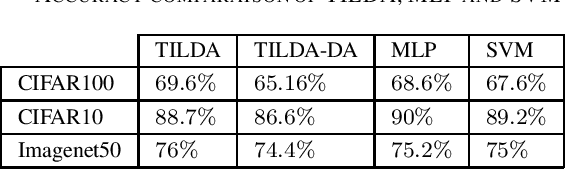

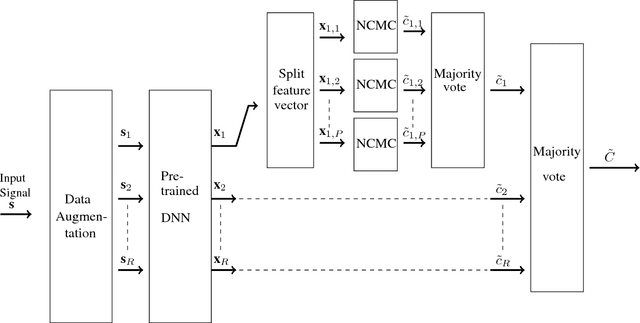

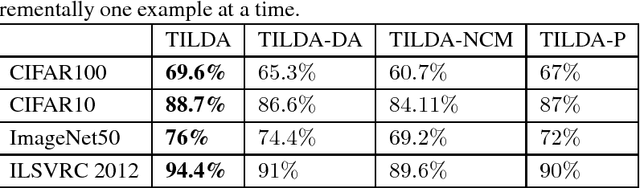

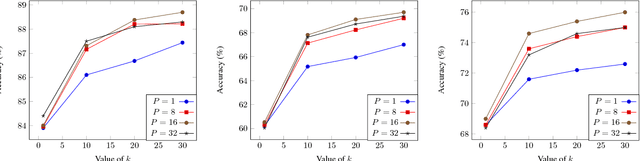

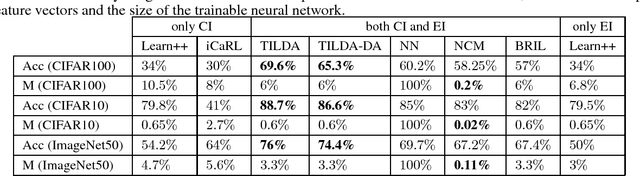

Abstract:Deep learning-based methods have reached state of the art performances, relying on large quantity of available data and computational power. Such methods still remain highly inappropriate when facing a major open machine learning problem, which consists of learning incrementally new classes and examples over time. Combining the outstanding performances of Deep Neural Networks (DNNs) with the flexibility of incremental learning techniques is a promising venue of research. In this contribution, we introduce Transfer Incremental Learning using Data Augmentation (TILDA). TILDA is based on pre-trained DNNs as feature extractor, robust selection of feature vectors in subspaces using a nearest-class-mean based technique, majority votes and data augmentation at both the training and the prediction stages. Experiments on challenging vision datasets demonstrate the ability of the proposed method for low complexity incremental learning, while achieving significantly better accuracy than existing incremental counterparts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge