Michal Ptaszynski

Cyberbullying Detection for Low-resource Languages and Dialects: Review of the State of the Art

Aug 30, 2023Abstract:The struggle of social media platforms to moderate content in a timely manner, encourages users to abuse such platforms to spread vulgar or abusive language, which, when performed repeatedly becomes cyberbullying a social problem taking place in virtual environments, yet with real-world consequences, such as depression, withdrawal, or even suicide attempts of its victims. Systems for the automatic detection and mitigation of cyberbullying have been developed but, unfortunately, the vast majority of them are for the English language, with only a handful available for low-resource languages. To estimate the present state of research and recognize the needs for further development, in this paper we present a comprehensive systematic survey of studies done so far for automatic cyberbullying detection in low-resource languages. We analyzed all studies on this topic that were available. We investigated more than seventy published studies on automatic detection of cyberbullying or related language in low-resource languages and dialects that were published between around 2017 and January 2023. There are 23 low-resource languages and dialects covered by this paper, including Bangla, Hindi, Dravidian languages and others. In the survey, we identify some of the research gaps of previous studies, which include the lack of reliable definitions of cyberbullying and its relevant subcategories, biases in the acquisition, and annotation of data. Based on recognizing those research gaps, we provide some suggestions for improving the general research conduct in cyberbullying detection, with a primary focus on low-resource languages. Based on those proposed suggestions, we collect and release a cyberbullying dataset in the Chittagonian dialect of Bangla and propose a number of initial ML solutions trained on that dataset. In addition, pre-trained transformer-based the BanglaBERT model was also attempted.

* 52 Pages

Vulgar Remarks Detection in Chittagonian Dialect of Bangla

Aug 29, 2023Abstract:The negative effects of online bullying and harassment are increasing with Internet popularity, especially in social media. One solution is using natural language processing (NLP) and machine learning (ML) methods for the automatic detection of harmful remarks, but these methods are limited in low-resource languages like the Chittagonian dialect of Bangla.This study focuses on detecting vulgar remarks in social media using supervised ML and deep learning algorithms.Logistic Regression achieved promising accuracy (0.91) while simple RNN with Word2vec and fastTex had lower accuracy (0.84-0.90), highlighting the issue that NN algorithms require more data.

* 5 pages

Improving Polish to English Neural Machine Translation with Transfer Learning: Effects of Data Volume and Language Similarity

Jun 01, 2023Abstract:This paper investigates the impact of data volume and the use of similar languages on transfer learning in a machine translation task. We find out that having more data generally leads to better performance, as it allows the model to learn more patterns and generalizations from the data. However, related languages can also be particularly effective when there is limited data available for a specific language pair, as the model can leverage the similarities between the languages to improve performance. To demonstrate, we fine-tune mBART model for a Polish-English translation task using the OPUS-100 dataset. We evaluate the performance of the model under various transfer learning configurations, including different transfer source languages and different shot levels for Polish, and report the results. Our experiments show that a combination of related languages and larger amounts of data outperforms the model trained on related languages or larger amounts of data alone. Additionally, we show the importance of related languages in zero-shot and few-shot configurations.

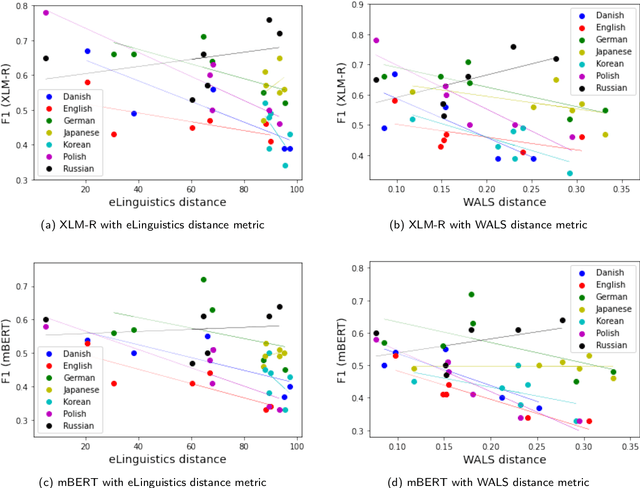

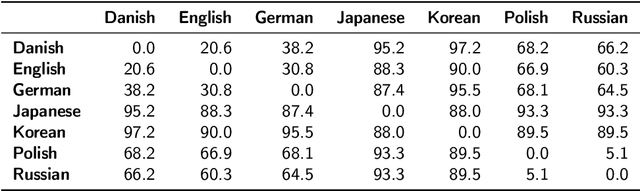

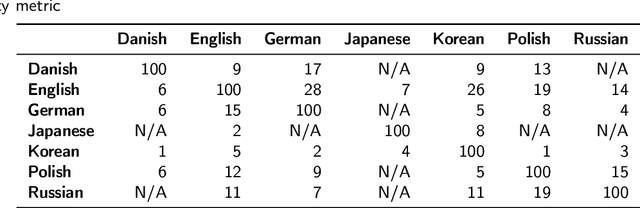

Zero-shot cross-lingual transfer language selection using linguistic similarity

Jan 31, 2023Abstract:We study the selection of transfer languages for different Natural Language Processing tasks, specifically sentiment analysis, named entity recognition and dependency parsing. In order to select an optimal transfer language, we propose to utilize different linguistic similarity metrics to measure the distance between languages and make the choice of transfer language based on this information instead of relying on intuition. We demonstrate that linguistic similarity correlates with cross-lingual transfer performance for all of the proposed tasks. We also show that there is a statistically significant difference in choosing the optimal language as the transfer source instead of English. This allows us to select a more suitable transfer language which can be used to better leverage knowledge from high-resource languages in order to improve the performance of language applications lacking data. For the study, we used datasets from eight different languages from three language families.

Adapting Multilingual Speech Representation Model for a New, Underresourced Language through Multilingual Fine-tuning and Continued Pretraining

Jan 18, 2023

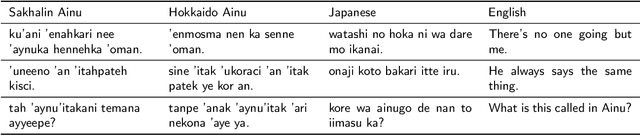

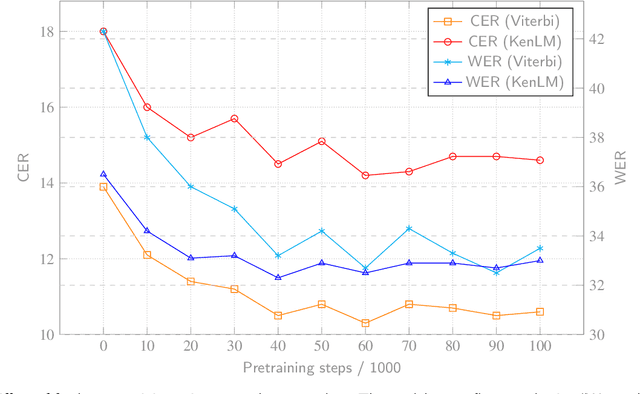

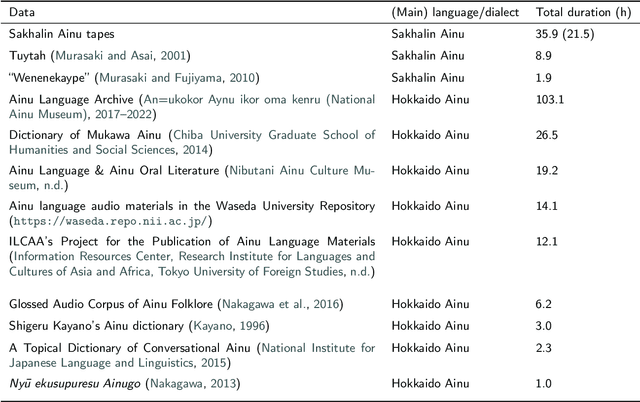

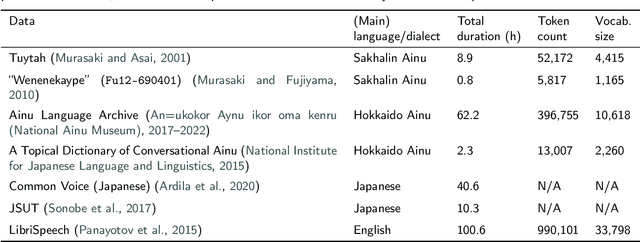

Abstract:In recent years, neural models learned through self-supervised pretraining on large scale multilingual text or speech data have exhibited promising results for underresourced languages, especially when a relatively large amount of data from related language(s) is available. While the technology has a potential for facilitating tasks carried out in language documentation projects, such as speech transcription, pretraining a multilingual model from scratch for every new language would be highly impractical. We investigate the possibility for adapting an existing multilingual wav2vec 2.0 model for a new language, focusing on actual fieldwork data from a critically endangered tongue: Ainu. Specifically, we (i) examine the feasibility of leveraging data from similar languages also in fine-tuning; (ii) verify whether the model's performance can be improved by further pretraining on target language data. Our results show that continued pretraining is the most effective method to adapt a wav2vec 2.0 model for a new language and leads to considerable reduction in error rates. Furthermore, we find that if a model pretrained on a related speech variety or an unrelated language with similar phonological characteristics is available, multilingual fine-tuning using additional data from that language can have positive impact on speech recognition performance when there is very little labeled data in the target language.

* 14 pages

Can You Fool AI by Doing a 180? $\unicode{x2013}$ A Case Study on Authorship Analysis of Texts by Arata Osada

Jul 19, 2022

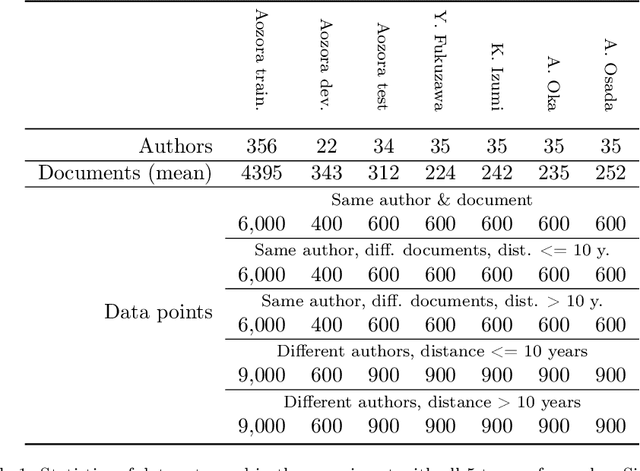

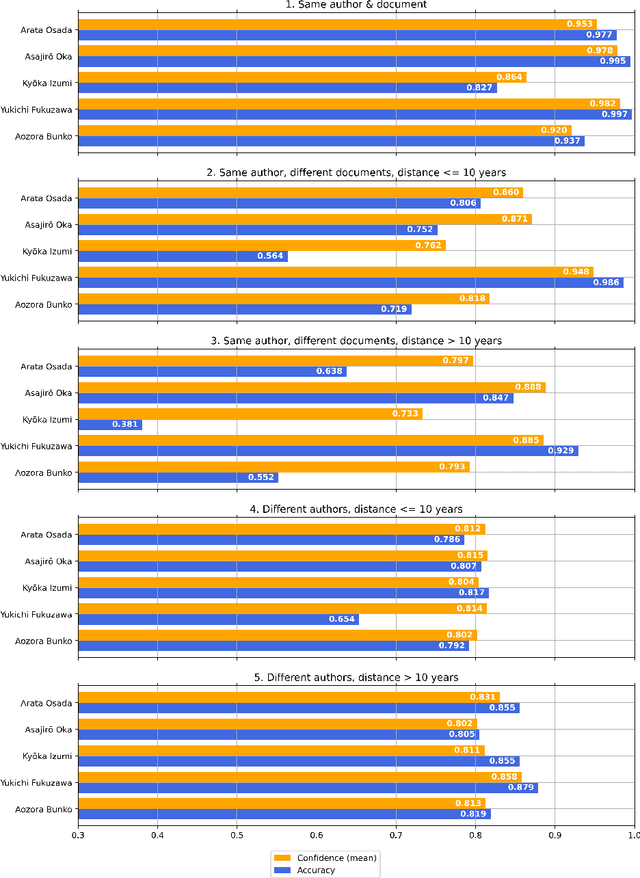

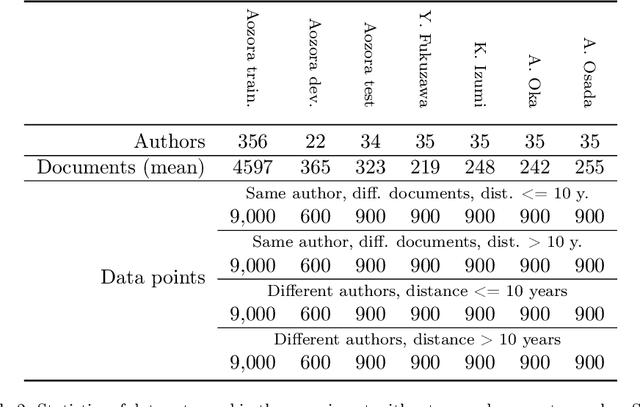

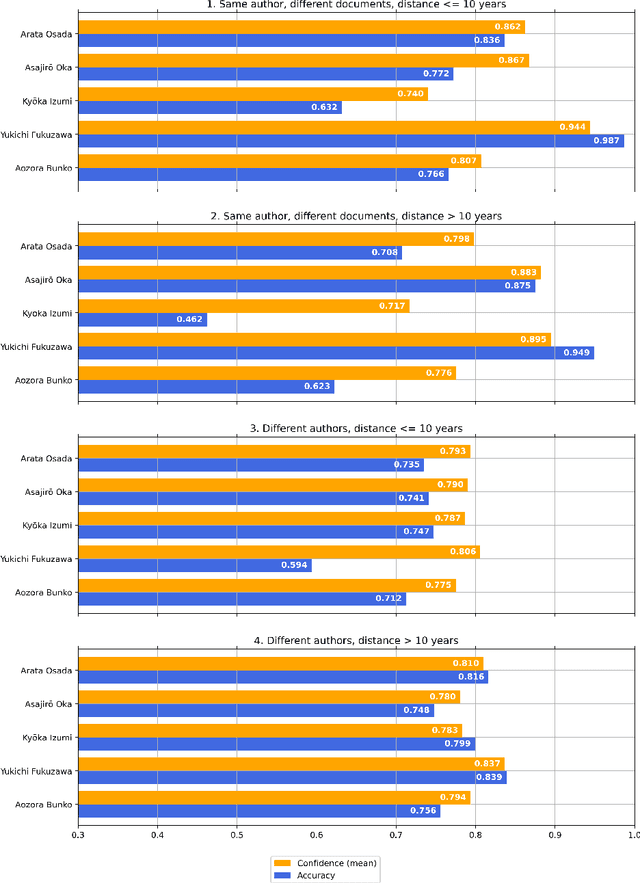

Abstract:This paper is our attempt at answering a twofold question covering the areas of ethics and authorship analysis. Firstly, since the methods used for performing authorship analysis imply that an author can be recognized by the content he or she creates, we were interested in finding out whether it would be possible for an author identification system to correctly attribute works to authors if in the course of years they have undergone a major psychological transition. Secondly, and from the point of view of the evolution of an author's ethical values, we checked what it would mean if the authorship attribution system encounters difficulties in detecting single authorship. We set out to answer those questions through performing a binary authorship analysis task using a text classifier based on a pre-trained transformer model and a baseline method relying on conventional similarity metrics. For the test set, we chose works of Arata Osada, a Japanese educator and specialist in the history of education, with half of them being books written before the World War II and another half in the 1950s, in between which he underwent a transformation in terms of political opinions. As a result, we were able to confirm that in the case of texts authored by Arata Osada in a time span of more than 10 years, while the classification accuracy drops by a large margin and is substantially lower than for texts by other non-fiction writers, confidence scores of the predictions remain at a similar level as in the case of a shorter time span, indicating that the classifier was in many instances tricked into deciding that texts written over a time span of multiple years were actually written by two different people, which in turn leads us to believe that such a change can affect authorship analysis, and that historical events have great impact on a person's ethical outlook as expressed in their writings.

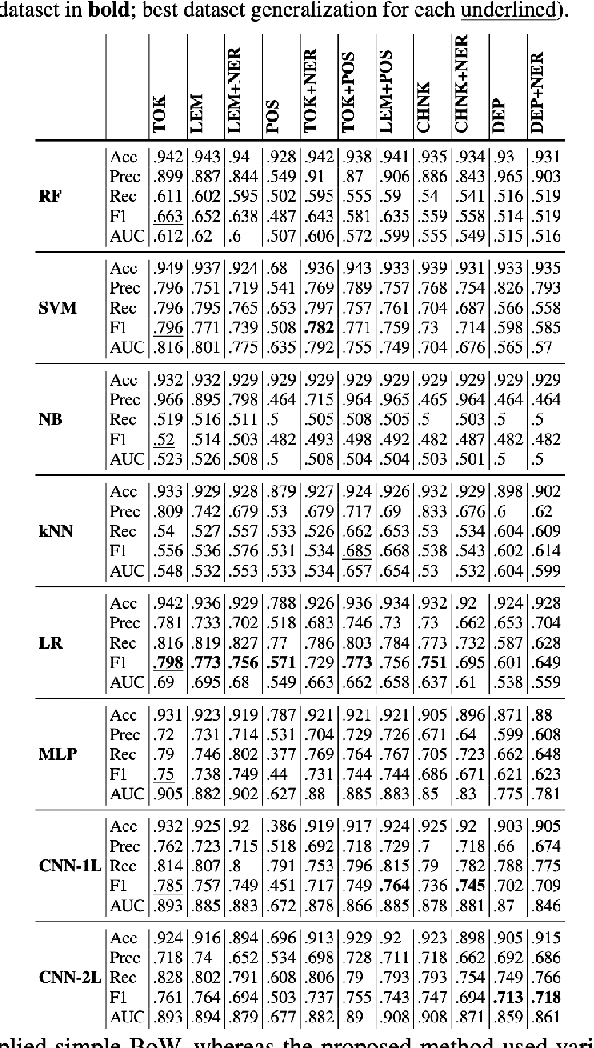

Comparing Performance of Different Linguistically-Backed Word Embeddings for Cyberbullying Detection

Jun 04, 2022Abstract:In most cases, word embeddings are learned only from raw tokens or in some cases, lemmas. This includes pre-trained language models like BERT. To investigate on the potential of capturing deeper relations between lexical items and structures and to filter out redundant information, we propose to preserve the morphological, syntactic and other types of linguistic information by combining them with the raw tokens or lemmas. This means, for example, including parts-of-speech or dependency information within the used lexical features. The word embeddings can then be trained on the combinations instead of just raw tokens. It is also possible to later apply this method to the pre-training of huge language models and possibly enhance their performance. This would aid in tackling problems which are more sophisticated from the point of view of linguistic representation, such as detection of cyberbullying.

Exploring the Potential of Feature Density in Estimating Machine Learning Classifier Performance with Application to Cyberbullying Detection

Jun 04, 2022

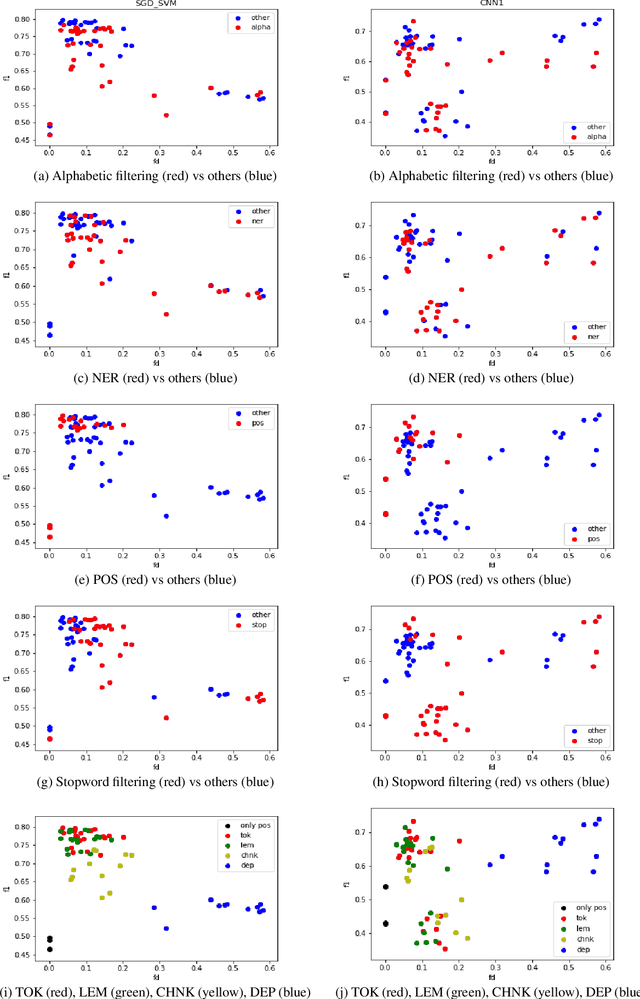

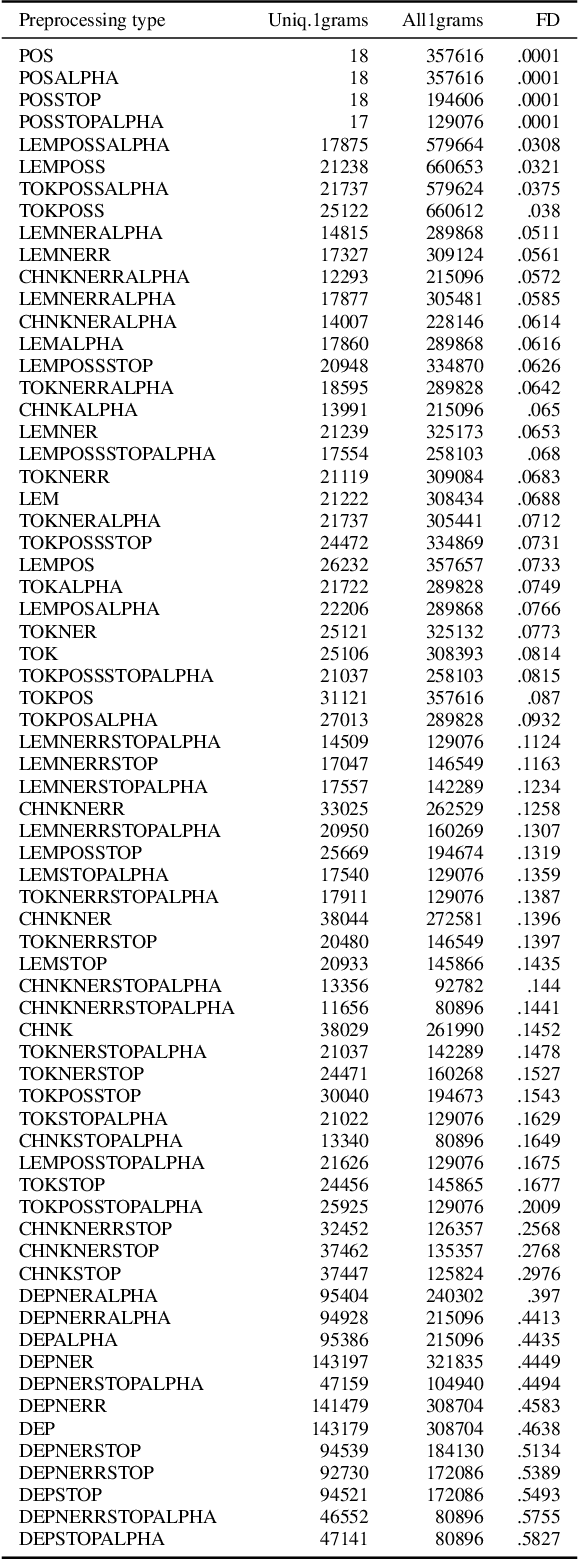

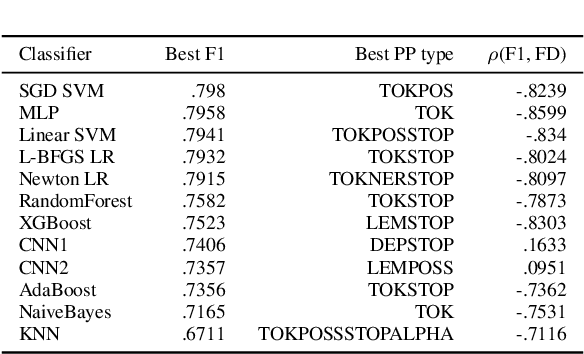

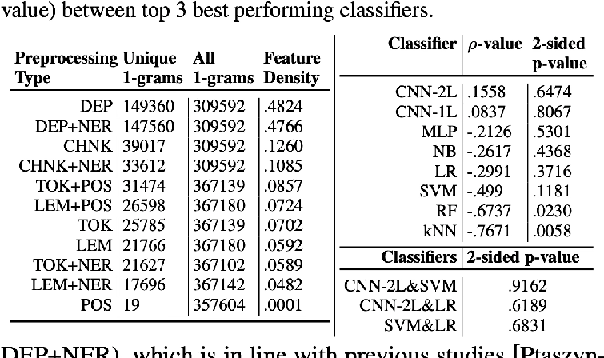

Abstract:In this research. we analyze the potential of Feature Density (HD) as a way to comparatively estimate machine learning (ML) classifier performance prior to training. The goal of the study is to aid in solving the problem of resource-intensive training of ML models which is becoming a serious issue due to continuously increasing dataset sizes and the ever rising popularity of Deep Neural Networks (DNN). The issue of constantly increasing demands for more powerful computational resources is also affecting the environment, as training large-scale ML models are causing alarmingly-growing amounts of CO2, emissions. Our approach 1s to optimize the resource-intensive training of ML models for Natural Language Processing to reduce the number of required experiments iterations. We expand on previous attempts on improving classifier training efficiency with FD while also providing an insight to the effectiveness of various linguistically-backed feature preprocessing methods for dialog classification, specifically cyberbullying detection.

* arXiv admin note: substantial text overlap with arXiv:2111.01689

Initial Study into Application of Feature Density and Linguistically-backed Embedding to Improve Machine Learning-based Cyberbullying Detection

Jun 04, 2022

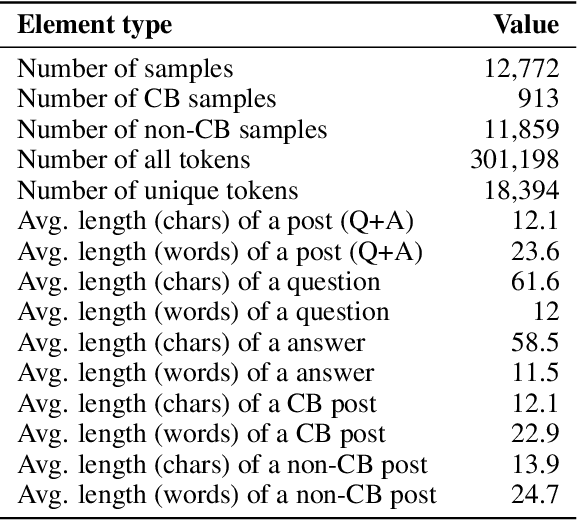

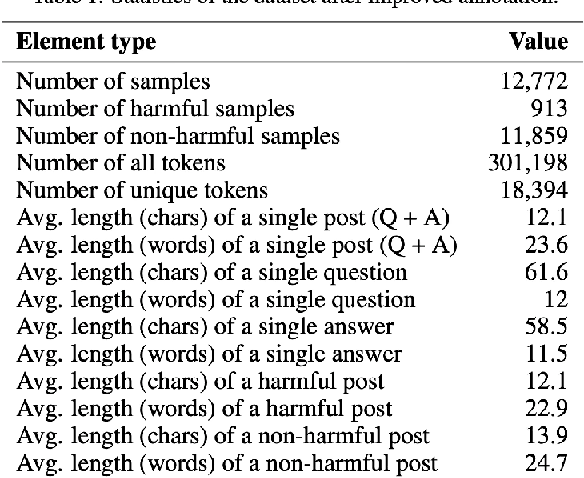

Abstract:In this research, we study the change in the performance of machine learning (ML) classifiers when various linguistic preprocessing methods of a dataset were used, with the specific focus on linguistically-backed embeddings in Convolutional Neural Networks (CNN). Moreover, we study the concept of Feature Density and confirm its potential to comparatively predict the performance of ML classifiers, including CNN. The research was conducted on a Formspring dataset provided in a Kaggle competition on automatic cyberbullying detection. The dataset was re-annotated by objective experts (psychologists), as the importance of professional annotation in cyberbullying research has been indicated multiple times. The study confirmed the effectiveness of Neural Networks in cyberbullying detection and the correlation between classifier performance and Feature Density while also proposing a new approach of training various linguistically-backed embeddings for Convolutional Neural Networks.

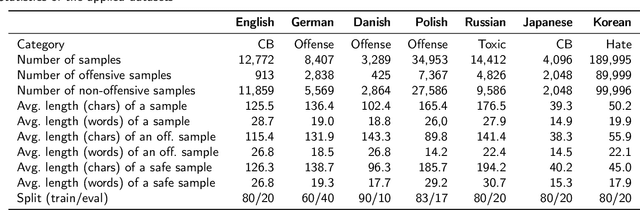

Transfer Language Selection for Zero-Shot Cross-Lingual Abusive Language Detection

Jun 02, 2022

Abstract:We study the selection of transfer languages for automatic abusive language detection. Instead of preparing a dataset for every language, we demonstrate the effectiveness of cross-lingual transfer learning for zero-shot abusive language detection. This way we can use existing data from higher-resource languages to build better detection systems for low-resource languages. Our datasets are from seven different languages from three language families. We measure the distance between the languages using several language similarity measures, especially by quantifying the World Atlas of Language Structures. We show that there is a correlation between linguistic similarity and classifier performance. This discovery allows us to choose an optimal transfer language for zero shot abusive language detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge