Michal Lukáč

ReGroup: Recursive Neural Networks for Hierarchical Grouping of Vector Graphic Primitives

Nov 23, 2021

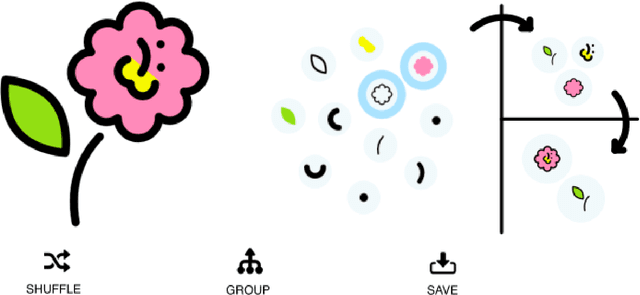

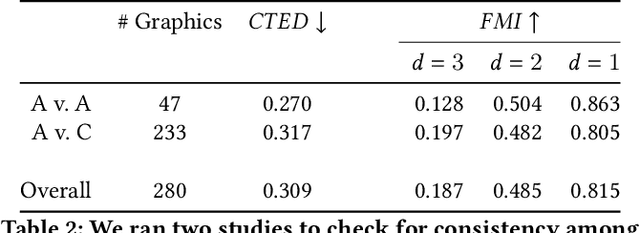

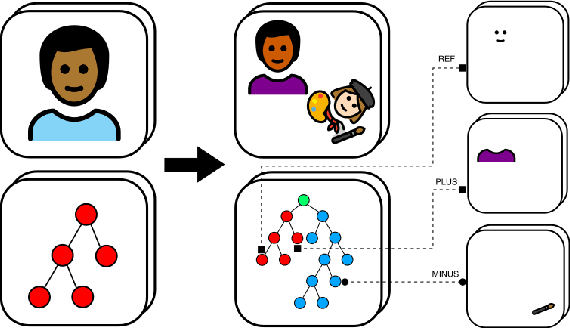

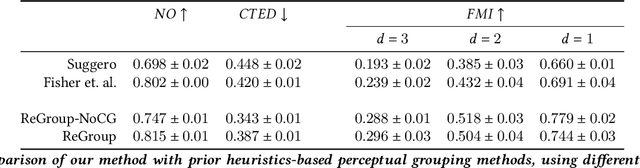

Abstract:Selection functionality is as fundamental to vector graphics as it is for raster data. But vector selection is quite different: instead of pixel-level labeling, we make a binary decision to include or exclude each vector primitive. In the absence of intelligible metadata, this becomes a perceptual grouping problem. These have previously relied on heuristics derived from empirical principles like Gestalt Theory, but since these are ill-defined and subjective, they often result in ambiguity. Here we take a data-centric approach to the problem. By exploiting the recursive nature of perceptual grouping, we interpret the task as constructing a hierarchy over the primitives of a vector graphic, which is amenable to learning with recursive neural networks with few human annotations. We verify this by building a dataset of these hierarchies on which we train a hierarchical grouping network. We then demonstrate how this can underpin a prototype selection tool.

STALP: Style Transfer with Auxiliary Limited Pairing

Oct 20, 2021Abstract:We present an approach to example-based stylization of images that uses a single pair of a source image and its stylized counterpart. We demonstrate how to train an image translation network that can perform real-time semantically meaningful style transfer to a set of target images with similar content as the source image. A key added value of our approach is that it considers also consistency of target images during training. Although those have no stylized counterparts, we constrain the translation to keep the statistics of neural responses compatible with those extracted from the stylized source. In contrast to concurrent techniques that use a similar input, our approach better preserves important visual characteristics of the source style and can deliver temporally stable results without the need to explicitly handle temporal consistency. We demonstrate its practical utility on various applications including video stylization, style transfer to panoramas, faces, and 3D models.

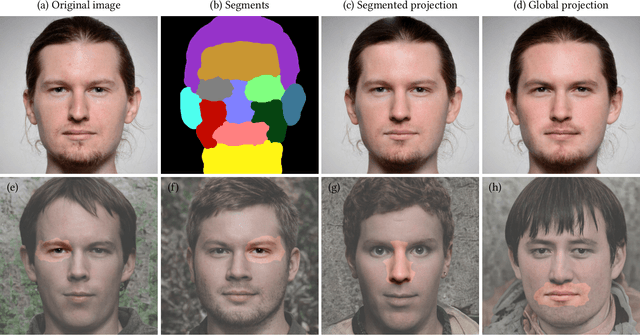

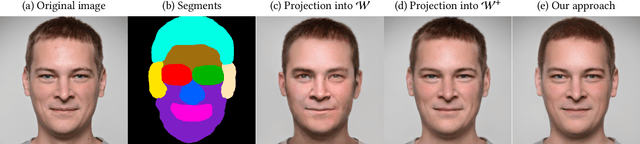

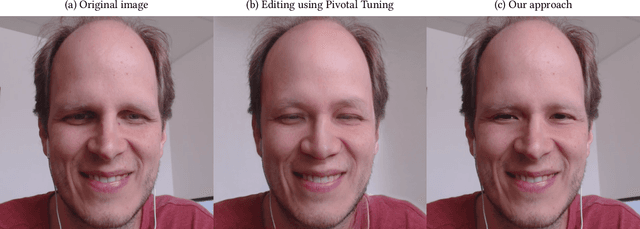

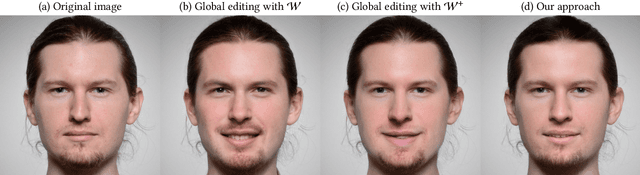

Real Image Inversion via Segments

Oct 12, 2021

Abstract:In this short report, we present a simple, yet effective approach to editing real images via generative adversarial networks (GAN). Unlike previous techniques, that treat all editing tasks as an operation that affects pixel values in the entire image in our approach we cut up the image into a set of smaller segments. For those segments corresponding latent codes of a generative network can be estimated with greater accuracy due to the lower number of constraints. When codes are altered by the user the content in the image is manipulated locally while the rest of it remains unaffected. Thanks to this property the final edited image better retains the original structures and thus helps to preserve natural look.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge