Michael Schober

Fast and Robust Shortest Paths on Manifolds Learned from Data

Jan 22, 2019

Abstract:We propose a fast, simple and robust algorithm for computing shortest paths and distances on Riemannian manifolds learned from data. This amounts to solving a system of ordinary differential equations (ODEs) subject to boundary conditions. Here standard solvers perform poorly because they require well-behaved Jacobians of the ODE, and usually, manifolds learned from data imply unstable and ill-conditioned Jacobians. Instead, we propose a fixed-point iteration scheme for solving the ODE that avoids Jacobians. This enhances the stability of the solver, while reduces the computational cost. In experiments involving both Riemannian metric learning and deep generative models we demonstrate significant improvements in speed and stability over both general-purpose state-of-the-art solvers as well as over specialized solvers.

Bayesian Filtering for ODEs with Bounded Derivatives

Sep 25, 2017

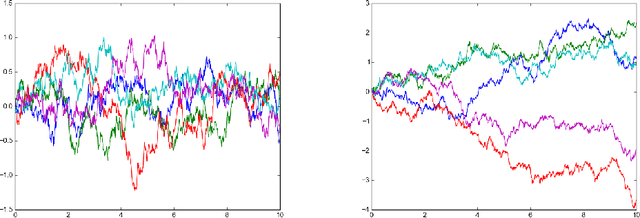

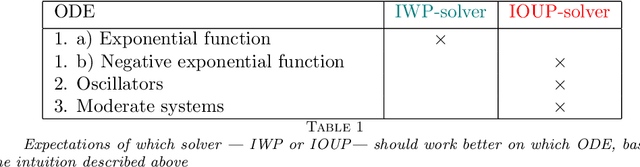

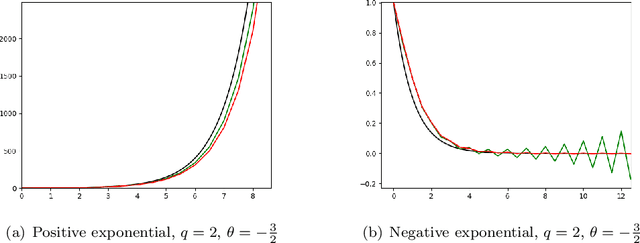

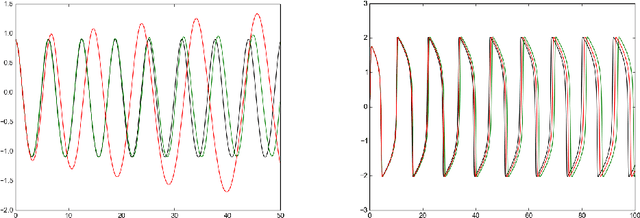

Abstract:Recently there has been increasing interest in probabilistic solvers for ordinary differential equations (ODEs) that return full probability measures, instead of point estimates, over the solution and can incorporate uncertainty over the ODE at hand, e.g. if the vector field or the initial value is only approximately known or evaluable. The ODE filter proposed in recent work models the solution of the ODE by a Gauss-Markov process which serves as a prior in the sense of Bayesian statistics. While previous work employed a Wiener process prior on the (possibly multiple times) differentiated solution of the ODE and established equivalence of the corresponding solver with classical numerical methods, this paper raises the question whether other priors also yield practically useful solvers. To this end, we discuss a range of possible priors which enable fast filtering and propose a new prior--the Integrated Ornstein Uhlenbeck Process (IOUP)--that complements the existing Integrated Wiener process (IWP) filter by encoding the property that a derivative in time of the solution is bounded in the sense that it tends to drift back to zero. We provide experiments comparing IWP and IOUP filters which support the belief that IWP approximates better divergent ODE's solutions whereas IOUP is a better prior for trajectories with bounded derivatives.

A probabilistic model for the numerical solution of initial value problems

Aug 10, 2017

Abstract:Like many numerical methods, solvers for initial value problems (IVPs) on ordinary differential equations estimate an analytically intractable quantity, using the results of tractable computations as inputs. This structure is closely connected to the notion of inference on latent variables in statistics. We describe a class of algorithms that formulate the solution to an IVP as inference on a latent path that is a draw from a Gaussian process probability measure (or equivalently, the solution of a linear stochastic differential equation). We then show that certain members of this class are connected precisely to generalized linear methods for ODEs, a number of Runge--Kutta methods, and Nordsieck methods. This probabilistic formulation of classic methods is valuable in two ways: analytically, it highlights implicit prior assumptions favoring certain approximate solutions to the IVP over others, and gives a precise meaning to the old observation that these methods act like filters. Practically, it endows the classic solvers with `docking points' for notions of uncertainty and prior information about the initial value, the value of the ODE itself, and the solution of the problem.

Probabilistic ODE Solvers with Runge-Kutta Means

Oct 24, 2014

Abstract:Runge-Kutta methods are the classic family of solvers for ordinary differential equations (ODEs), and the basis for the state of the art. Like most numerical methods, they return point estimates. We construct a family of probabilistic numerical methods that instead return a Gauss-Markov process defining a probability distribution over the ODE solution. In contrast to prior work, we construct this family such that posterior means match the outputs of the Runge-Kutta family exactly, thus inheriting their proven good properties. Remaining degrees of freedom not identified by the match to Runge-Kutta are chosen such that the posterior probability measure fits the observed structure of the ODE. Our results shed light on the structure of Runge-Kutta solvers from a new direction, provide a richer, probabilistic output, have low computational cost, and raise new research questions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge