Michael Schäfer

Optimizing Learned Image Compression on Scalar and Entropy-Constraint Quantization

Jun 10, 2025Abstract:The continuous improvements on image compression with variational autoencoders have lead to learned codecs competitive with conventional approaches in terms of rate-distortion efficiency. Nonetheless, taking the quantization into account during the training process remains a problem, since it produces zero derivatives almost everywhere and needs to be replaced with a differentiable approximation which allows end-to-end optimization. Though there are different methods for approximating the quantization, none of them model the quantization noise correctly and thus, result in suboptimal networks. Hence, we propose an additional finetuning training step: After conventional end-to-end training, parts of the network are retrained on quantized latents obtained at the inference stage. For entropy-constraint quantizers like Trellis-Coded Quantization, the impact of the quantizer is particularly difficult to approximate by rounding or adding noise as the quantized latents are interdependently chosen through a trellis search based on both the entropy model and a distortion measure. We show that retraining on correctly quantized data consistently yields additional coding gain for both uniform scalar and especially for entropy-constraint quantization, without increasing inference complexity. For the Kodak test set, we obtain average savings between 1% and 2%, and for the TecNick test set up to 2.2% in terms of Bj{\o}ntegaard-Delta bitrate.

* Accepted at ICIP2024, the IEEE International Conference on Image Processing

TwinLab: a framework for data-efficient training of non-intrusive reduced-order models for digital twins

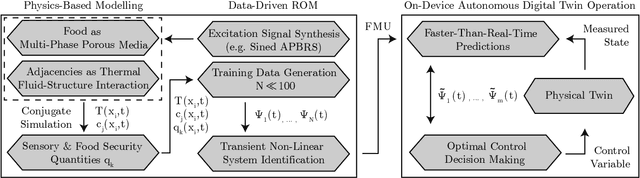

Jul 04, 2024Abstract:Purpose: Simulation-based digital twins represent an effort to provide high-accuracy real-time insights into operational physical processes. However, the computation time of many multi-physical simulation models is far from real-time. It might even exceed sensible time frames to produce sufficient data for training data-driven reduced-order models. This study presents TwinLab, a framework for data-efficient, yet accurate training of neural-ODE type reduced-order models with only two data sets. Design/methodology/approach: Correlations between test errors of reduced-order models and distinct features of corresponding training data are investigated. Having found the single best data sets for training, a second data set is sought with the help of similarity and error measures to enrich the training process effectively. Findings: Adding a suitable second training data set in the training process reduces the test error by up to 49% compared to the best base reduced-order model trained only with one data set. Such a second training data set should at least yield a good reduced-order model on its own and exhibit higher levels of dissimilarity to the base training data set regarding the respective excitation signal. Moreover, the base reduced-order model should have elevated test errors on the second data set. The relative error of the time series ranges from 0.18% to 0.49%. Prediction speed-ups of up to a factor of 36,000 are observed. Originality: The proposed computational framework facilitates the automated, data-efficient extraction of non-intrusive reduced-order models for digital twins from existing simulation models, independent of the simulation software.

Neuromorphic hardware for sustainable AI data centers

Feb 04, 2024

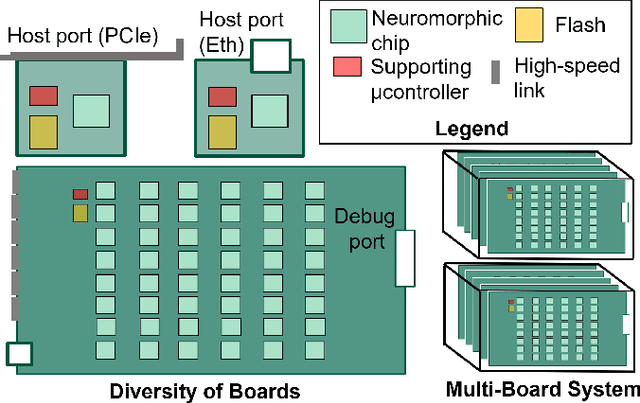

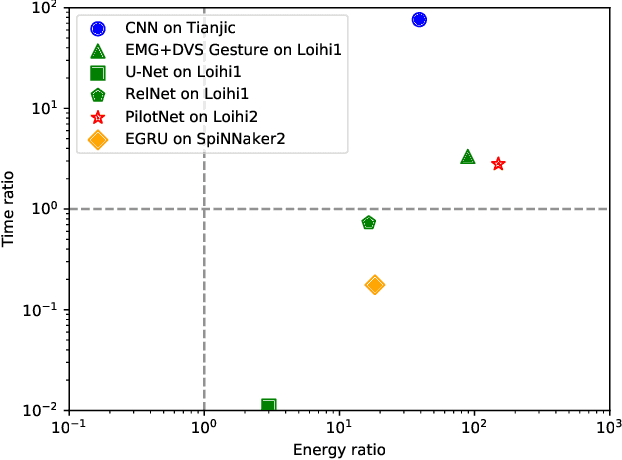

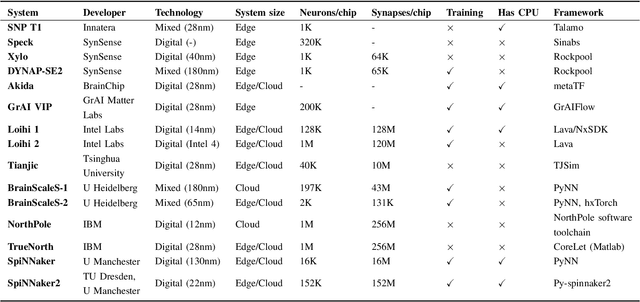

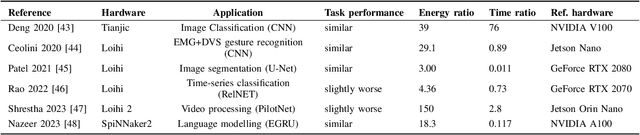

Abstract:As humans advance toward a higher level of artificial intelligence, it is always at the cost of escalating computational resource consumption, which requires developing novel solutions to meet the exponential growth of AI computing demand. Neuromorphic hardware takes inspiration from how the brain processes information and promises energy-efficient computing of AI workloads. Despite its potential, neuromorphic hardware has not found its way into commercial AI data centers. In this article, we try to analyze the underlying reasons for this and derive requirements and guidelines to promote neuromorphic systems for efficient and sustainable cloud computing: We first review currently available neuromorphic hardware systems and collect examples where neuromorphic solutions excel conventional AI processing on CPUs and GPUs. Next, we identify applications, models and algorithms which are commonly deployed in AI data centers as further directions for neuromorphic algorithms research. Last, we derive requirements and best practices for the hardware and software integration of neuromorphic systems into data centers. With this article, we hope to increase awareness of the challenges of integrating neuromorphic hardware into data centers and to guide the community to enable sustainable and energy-efficient AI at scale.

Autonomous Cooking with Digital Twin Methodology

Sep 07, 2022

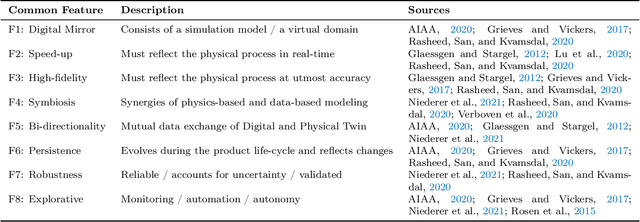

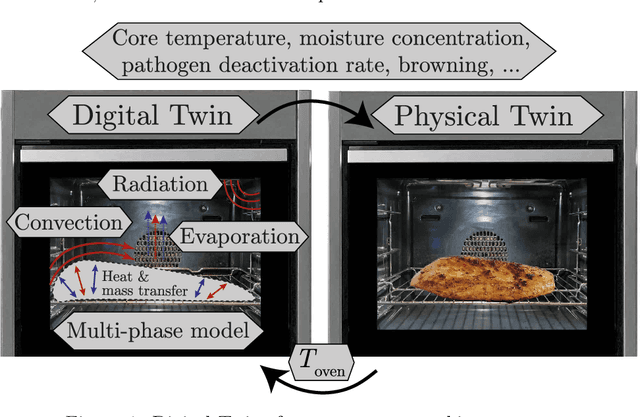

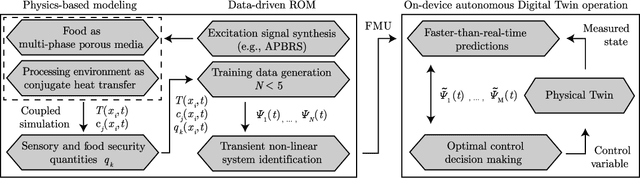

Abstract:This work introduces the concept of an autonomous cooking process based on Digital Twin method- ology. It proposes a hybrid approach of physics-based full order simulations followed by a data-driven system identification process with low errors. It makes faster-than-real-time simulations of Digital Twins feasible on a device level, without the need for cloud or high-performance computing. The concept is universally applicable to various physical processes.

Physics-based Digital Twins for Autonomous Thermal Food Processing: Efficient, Non-intrusive Reduced-order Modeling

Sep 07, 2022

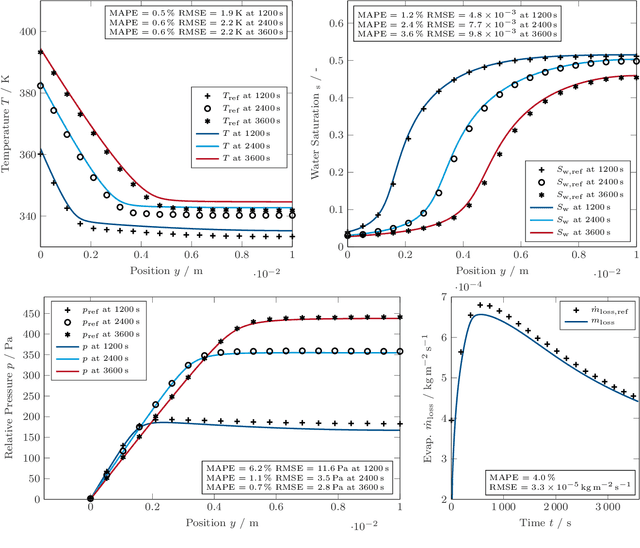

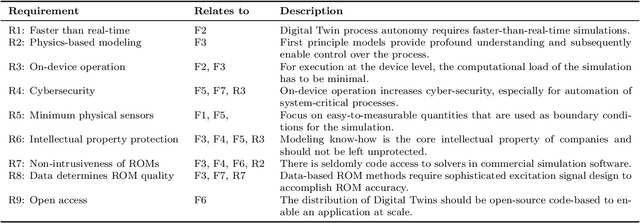

Abstract:One possible way of making thermal processing controllable is to gather real-time information on the product's current state. Often, sensory equipment cannot capture all relevant information easily or at all. Digital Twins close this gap with virtual probes in real-time simulations, synchronized with the process. This paper proposes a physics-based, data-driven Digital Twin framework for autonomous food processing. We suggest a lean Digital Twin concept that is executable at the device level, entailing minimal computational load, data storage, and sensor data requirements. This study focuses on a parsimonious experimental design for training non-intrusive reduced-order models (ROMs) of a thermal process. A correlation ($R=-0.76$) between a high standard deviation of the surface temperatures in the training data and a low root mean square error in ROM testing enables efficient selection of training data. The mean test root mean square error of the best ROM is less than 1 Kelvin (0.2 % mean average percentage error) on representative test sets. Simulation speed-ups of Sp $\approx$ 1.8E4 allow on-device model predictive control. The proposed Digital Twin framework is designed to be applicable within the industry. Typically, non-intrusive reduced-order modeling is required as soon as the modeling of the process is performed in software, where root-level access to the solver is not provided, such as commercial simulation software. The data-driven training of the reduced-order model is achieved with only one data set, as correlations are utilized to predict the training success a priori.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge