Michael E. Walker

TOKCS: Tool for Organizing Key Characteristics of VAM-HRI Systems

Aug 07, 2021

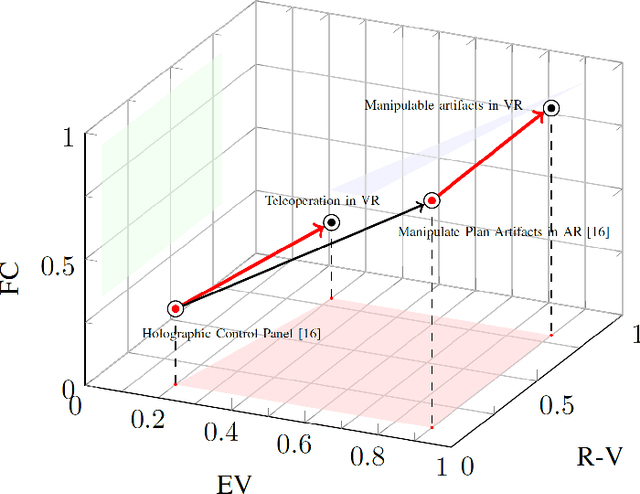

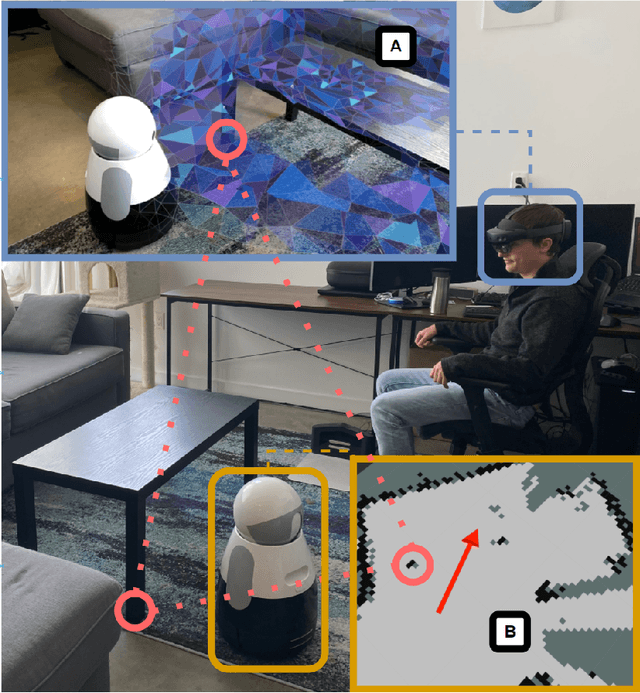

Abstract:Frameworks have begun to emerge to categorize Virtual, Augmented, and Mixed Reality (VAM) technologies that provide immersive, intuitive interfaces to facilitate Human-Robot Interaction. These frameworks, however, fail to capture key characteristics of the growing subfield of VAM-HRI and can be difficult to consistently apply. This work builds upon these prior frameworks through the creation of a Tool for Organizing Key Characteristics of VAM-HRI Systems (TOKCS). TOKCS discretizes the continuous scales used within prior works for more consistent classification and adds additional characteristics related to a robot's internal model, anchor locations, manipulability, and the system's software and hardware. To showcase the tool's capability, TOKCS is applied to find trends and takeaways from the fourth VAM-HRI workshop. These trends highlight the expressive capability of TOKCS while also helping frame newer trends and future work recommendations for VAM-HRI research.

Virtual-to-Real-World Transfer Learning for Robots on Wilderness Trails

Jan 17, 2019

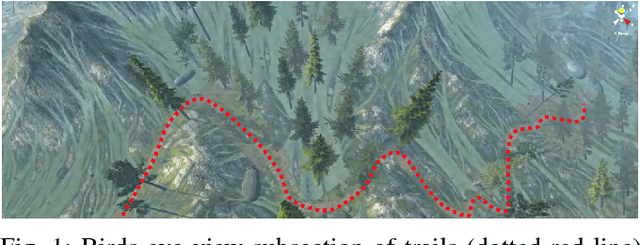

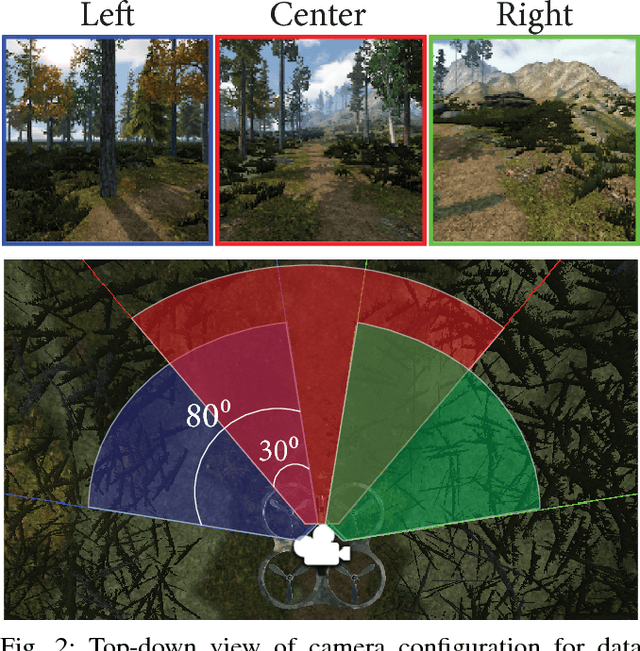

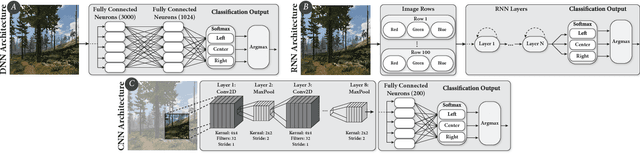

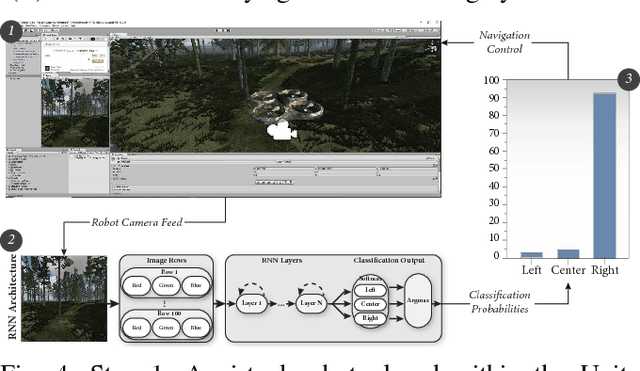

Abstract:Robots hold promise in many scenarios involving outdoor use, such as search-and-rescue, wildlife management, and collecting data to improve environment, climate, and weather forecasting. However, autonomous navigation of outdoor trails remains a challenging problem. Recent work has sought to address this issue using deep learning. Although this approach has achieved state-of-the-art results, the deep learning paradigm may be limited due to a reliance on large amounts of annotated training data. Collecting and curating training datasets may not be feasible or practical in many situations, especially as trail conditions may change due to seasonal weather variations, storms, and natural erosion. In this paper, we explore an approach to address this issue through virtual-to-real-world transfer learning using a variety of deep learning models trained to classify the direction of a trail in an image. Our approach utilizes synthetic data gathered from virtual environments for model training, bypassing the need to collect a large amount of real images of the outdoors. We validate our approach in three main ways. First, we demonstrate that our models achieve classification accuracies upwards of 95% on our synthetic data set. Next, we utilize our classification models in the control system of a simulated robot to demonstrate feasibility. Finally, we evaluate our models on real-world trail data and demonstrate the potential of virtual-to-real-world transfer learning.

* iROS 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge