Mengchao Bai

External Prompt Features Enhanced Parameter-efficient Fine-tuning for Salient Object Detection

Apr 23, 2024

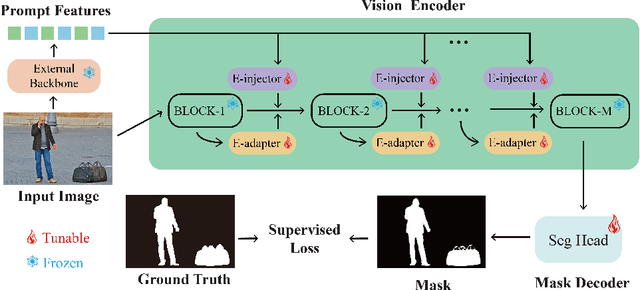

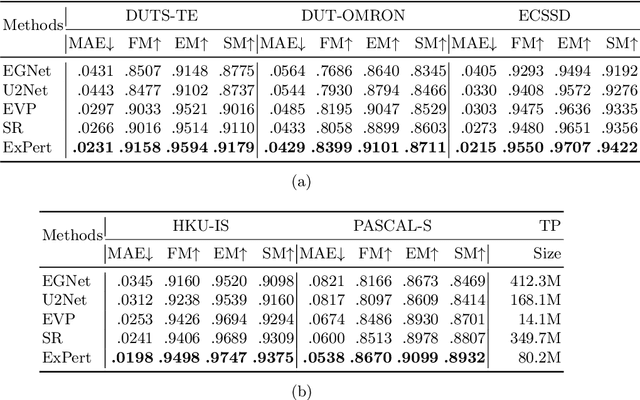

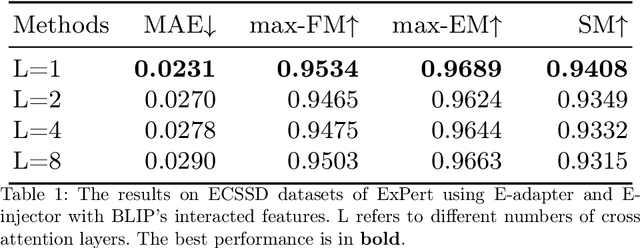

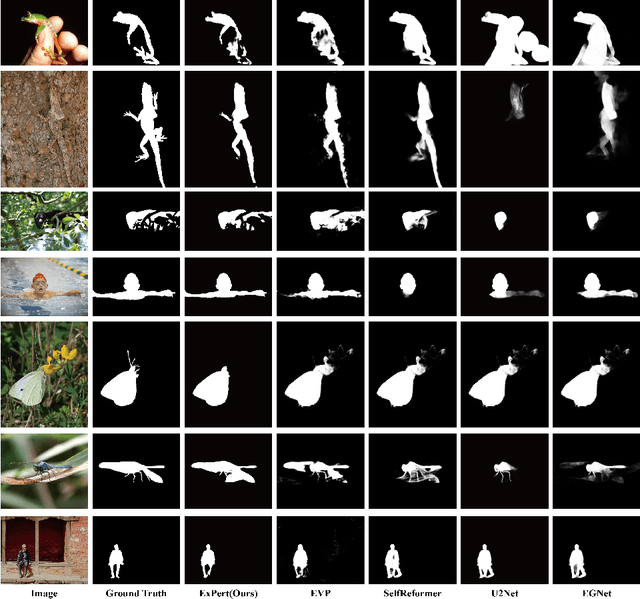

Abstract:Salient object detection (SOD) aims at finding the most salient objects in images and outputs pixel-level binary masks. Transformer-based methods achieve promising performance due to their global semantic understanding, crucial for identifying salient objects. However, these models tend to be large and require numerous training parameters. To better harness the potential of transformers for SOD, we propose a novel parameter-efficient fine-tuning method aimed at reducing the number of training parameters while enhancing the salient object detection capability. Our model, termed EXternal Prompt features Enhanced adapteR Tuning (ExPert), features an encoder-decoder structure with adapters and injectors interspersed between the layers of a frozen transformer encoder. The adapter modules adapt the pre-trained backbone to SOD while the injector modules incorporate external prompt features to enhance the awareness of salient objects. Comprehensive experiments demonstrate the superiority of our method. Surpassing former state-of-the-art (SOTA) models across five SOD datasets, ExPert achieves 0.215 mean absolute error (MAE) in ECSSD dataset with 80.2M trained parameters, 21% better than transformer-based SOTA model and 47% better than CNN-based SOTA model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge