Md Sirajul Islam

FedClust: Tackling Data Heterogeneity in Federated Learning through Weight-Driven Client Clustering

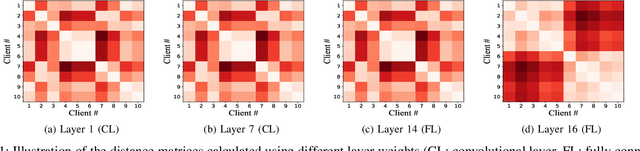

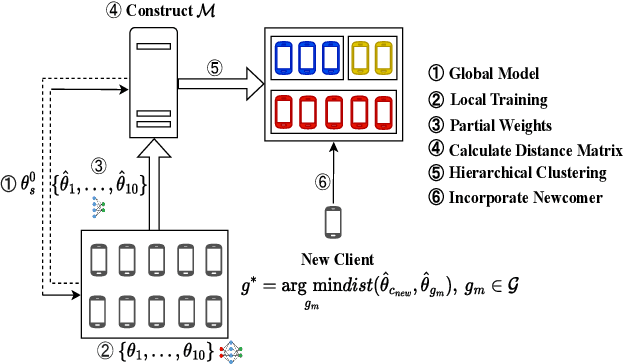

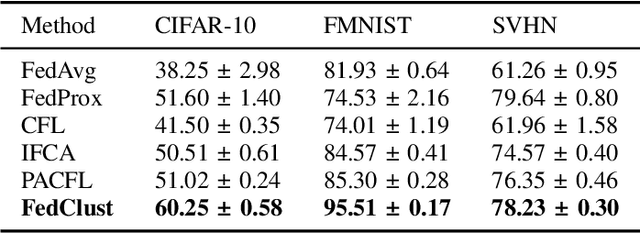

Jul 09, 2024Abstract:Federated learning (FL) is an emerging distributed machine learning paradigm that enables collaborative training of machine learning models over decentralized devices without exposing their local data. One of the major challenges in FL is the presence of uneven data distributions across client devices, violating the well-known assumption of independent-and-identically-distributed (IID) training samples in conventional machine learning. To address the performance degradation issue incurred by such data heterogeneity, clustered federated learning (CFL) shows its promise by grouping clients into separate learning clusters based on the similarity of their local data distributions. However, state-of-the-art CFL approaches require a large number of communication rounds to learn the distribution similarities during training until the formation of clusters is stabilized. Moreover, some of these algorithms heavily rely on a predefined number of clusters, thus limiting their flexibility and adaptability. In this paper, we propose {\em FedClust}, a novel approach for CFL that leverages the correlation between local model weights and the data distribution of clients. {\em FedClust} groups clients into clusters in a one-shot manner by measuring the similarity degrees among clients based on the strategically selected partial weights of locally trained models. We conduct extensive experiments on four benchmark datasets with different non-IID data settings. Experimental results demonstrate that {\em FedClust} achieves higher model accuracy up to $\sim$45\% as well as faster convergence with a significantly reduced communication cost up to 2.7$\times$ compared to its state-of-the-art counterparts.

Skin Cancer Images Classification using Transfer Learning Techniques

Jun 18, 2024Abstract:Skin cancer is one of the most common and deadliest types of cancer. Early diagnosis of skin cancer at a benign stage is critical to reducing cancer mortality. To detect skin cancer at an earlier stage an automated system is compulsory that can save the life of many patients. Many previous studies have addressed the problem of skin cancer diagnosis using various deep learning and transfer learning models. However, existing literature has limitations in its accuracy and time-consuming procedure. In this work, we applied five different pre-trained transfer learning approaches for binary classification of skin cancer detection at benign and malignant stages. To increase the accuracy of these models we fine-tune different layers and activation functions. We used a publicly available ISIC dataset to evaluate transfer learning approaches. For model stability, data augmentation techniques are applied to improve the randomness of the input dataset. These approaches are evaluated using different hyperparameters such as batch sizes, epochs, and optimizers. The experimental results show that the ResNet-50 model provides an accuracy of 0.935, F1-score of 0.86, and precision of 0.94.

FedClust: Optimizing Federated Learning on Non-IID Data through Weight-Driven Client Clustering

Mar 07, 2024

Abstract:Federated learning (FL) is an emerging distributed machine learning paradigm enabling collaborative model training on decentralized devices without exposing their local data. A key challenge in FL is the uneven data distribution across client devices, violating the well-known assumption of independent-and-identically-distributed (IID) training samples in conventional machine learning. Clustered federated learning (CFL) addresses this challenge by grouping clients based on the similarity of their data distributions. However, existing CFL approaches require a large number of communication rounds for stable cluster formation and rely on a predefined number of clusters, thus limiting their flexibility and adaptability. This paper proposes FedClust, a novel CFL approach leveraging correlations between local model weights and client data distributions. FedClust groups clients into clusters in a one-shot manner using strategically selected partial model weights and dynamically accommodates newcomers in real-time. Experimental results demonstrate FedClust outperforms baseline approaches in terms of accuracy and communication costs.

FedFair^3: Unlocking Threefold Fairness in Federated Learning

Jan 29, 2024

Abstract:Federated Learning (FL) is an emerging paradigm in machine learning without exposing clients' raw data. In practical scenarios with numerous clients, encouraging fair and efficient client participation in federated learning is of utmost importance, which is also challenging given the heterogeneity in data distribution and device properties. Existing works have proposed different client-selection methods that consider fairness; however, they fail to select clients with high utilities while simultaneously achieving fair accuracy levels. In this paper, we propose a fair client-selection approach that unlocks threefold fairness in federated learning. In addition to having a fair client-selection strategy, we enforce an equitable number of rounds for client participation and ensure a fair accuracy distribution over the clients. The experimental results demonstrate that FedFair^3, in comparison to the state-of-the-art baselines, achieves 18.15% less accuracy variance on the IID data and 54.78% on the non-IID data, without decreasing the global accuracy. Furthermore, it shows 24.36% less wall-clock training time on average.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge