Mauricio Perez

Skeleton-based Relational Reasoning for Group Activity Analysis

Nov 11, 2020

Abstract:Research on group activity recognition mostly leans on standard two-stream approach (RGB and Optical Flow) as their input features. Few have explored explicit pose information, with none using it directly to reason about the individuals interactions. In this paper, we leverage the skeleton information to learn the interactions between the individuals straight from it. With our proposed method GIRN, multiple relationship types are inferred from independent modules, that describe the relations between the joints pair-by-pair. Additionally to the joints relations, we also experiment with previously unexplored relationship between individuals and relevant objects (e.g. volleyball). The individuals distinct relations are then merged through an attention mechanism, that gives more importance to those more relevant for distinguishing the group activity. We evaluate our method in the Volleyball dataset, obtaining competitive results to the state-of-the-art, even though using a single modality. Therefore demonstrating the potential of skeleton-based approaches for modeling multi-person interactions.

Interaction Relational Network for Mutual Action Recognition

Oct 11, 2019

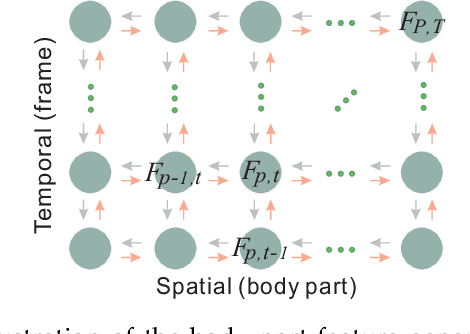

Abstract:Person-person mutual action recognition (also referred to as interaction recognition) is an important research branch of human activity analysis. Current solutions in the field are mainly dominated by CNNs, GCNs and LSTMs. These approaches often consist of complicated architectures and mechanisms to embed the relationships between the two persons on the architecture itself, to ensure the interaction patterns can be properly learned. In this paper, we propose a more simple yet very powerful architecture, named Interaction Relational Network (IRN), which utilizes minimal prior knowledge about the structure of the human body. We drive the network to identify by itself how to relate the body parts from the individuals interacting. In order to better represent the interaction, we define two different relationships, leading to specialized architectures and models for each. These multiple relationship models will then be fused into a single and special architecture, in order to leverage both streams of information for further enhancing the relational reasoning capability. Furthermore we define important structured pair-wise operations to extract meaningful extra information from each pair of joints -- distance and motion. Ultimately, with the coupling of an LSTM, our IRN is capable of paramount sequential relational reasoning. These important extensions we made to our network can also be valuable to other problems that require sophisticated relational reasoning. Our solution is able to achieve state-of-the-art performance on the traditional interaction recognition datasets SBU and UT, and also on the mutual actions from the large-scale NTU RGB+D and NTU RGB+D 120 datasets.

NTU RGB+D 120: A Large-Scale Benchmark for 3D Human Activity Understanding

May 12, 2019

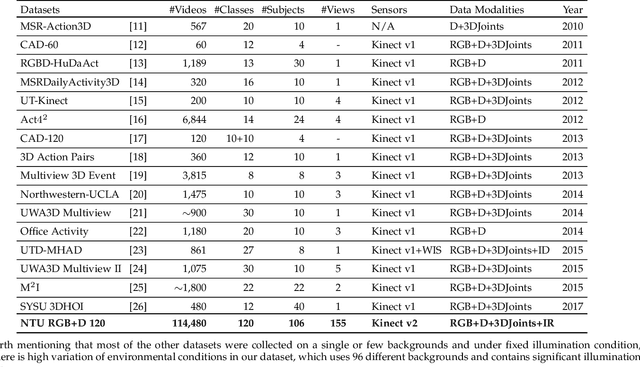

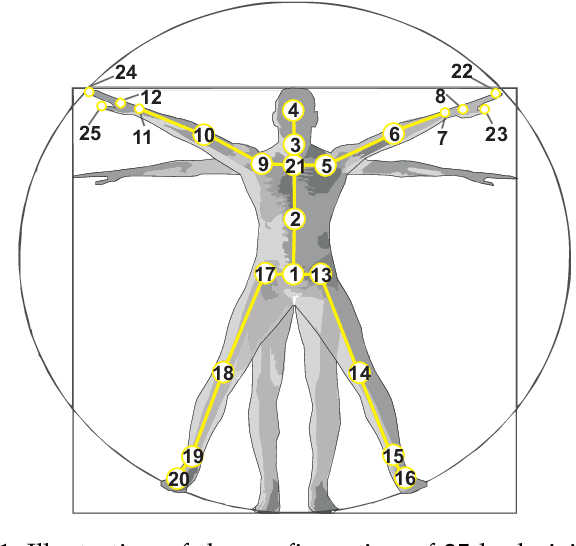

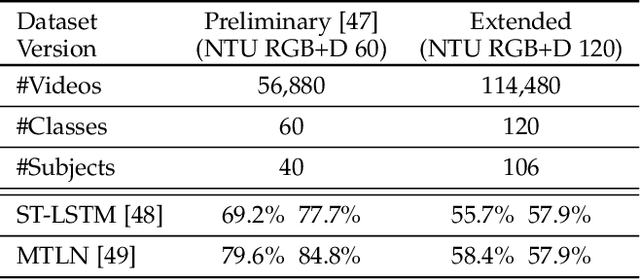

Abstract:Research on depth-based human activity analysis achieved outstanding performance and demonstrated the effectiveness of 3D representation for action recognition. The existing depth-based and RGB+D-based action recognition benchmarks have a number of limitations, including the lack of large-scale training samples, realistic number of distinct class categories, diversity in camera views, varied environmental conditions, and variety of human subjects. In this work, we introduce a large-scale dataset for RGB+D human action recognition, which is collected from 106 distinct subjects and contains more than 114 thousand video samples and 8 million frames. This dataset contains 120 different action classes including daily, mutual, and health-related activities. We evaluate the performance of a series of existing 3D activity analysis methods on this dataset, and show the advantage of applying deep learning methods for 3D-based human action recognition. Furthermore, we investigate a novel one-shot 3D activity recognition problem on our dataset, and a simple yet effective Action-Part Semantic Relevance-aware (APSR) framework is proposed for this task, which yields promising results for recognition of the novel action classes. We believe the introduction of this large-scale dataset will enable the community to apply, adapt, and develop various data-hungry learning techniques for depth-based and RGB+D-based human activity understanding. [The dataset is available at: http://rose1.ntu.edu.sg/Datasets/actionRecognition.asp]

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge