Maura Casadio

DIBRIS, University of Genoa, Genoa, Italy

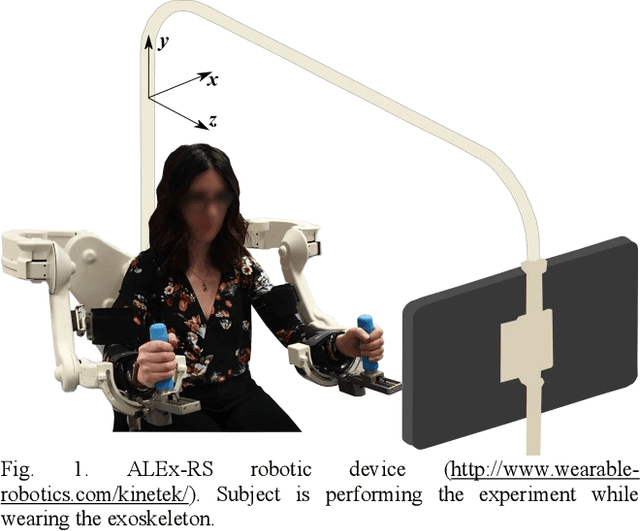

Bimanual Motor Strategies and Handedness Role During Human-Exoskeleton Haptic Interaction

Nov 22, 2022

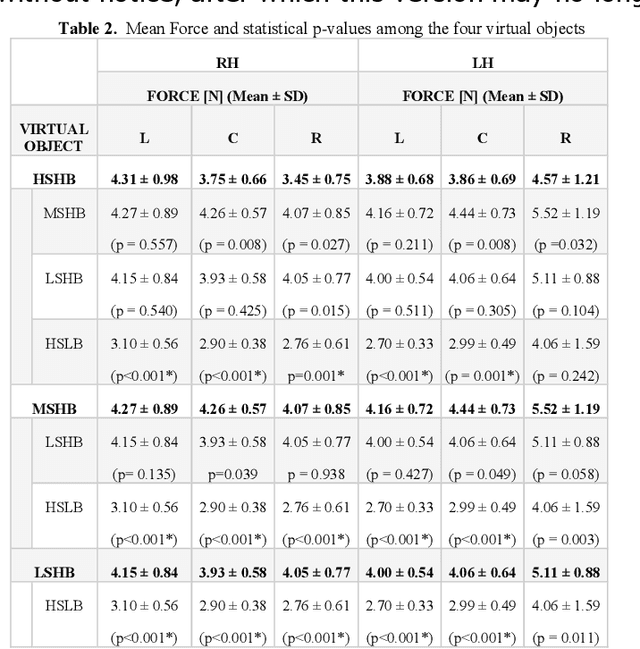

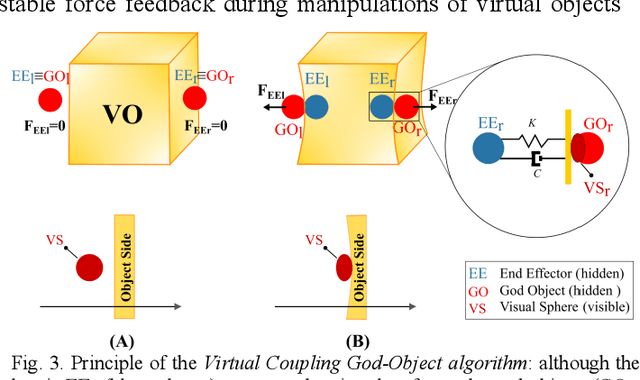

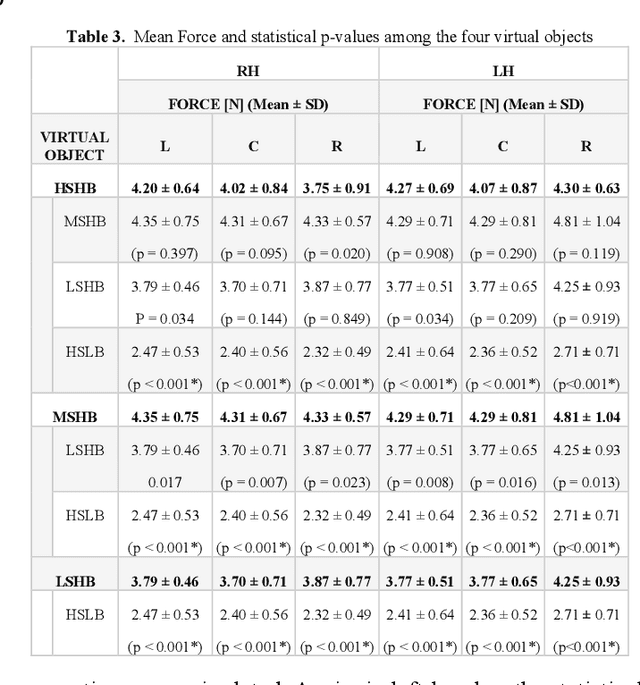

Abstract:Bimanual object manipulation involves multiple visuo-haptic sensory feedbacks arising from the interaction with the environment that are managed from the central nervous system and consequently translated in motor commands. Kinematic strategies that occur during bimanual coupled tasks are still a scientific debate despite modern advances in haptics and robotics. Current technologies may have the potential to provide realistic scenarios involving the entire upper limb extremities during multi-joint movements but are not yet exploited to their full potential. The present study explores how hands dynamically interact when manipulating a shared object through the use of two impedance-controlled exoskeletons programmed to simulate bimanually coupled manipulation of virtual objects. We enrolled twenty-six participants (2 groups: right-handed and left-handed) who were requested to use both hands to grab simulated objects across the robot workspace and place them in specific locations. The virtual objects were rendered with different dynamic proprieties and textures influencing the manipulation strategies to complete the tasks. Results revealed that the roles of hands are related to the movement direction, the haptic features, and the handedness preference. Outcomes suggested that the haptic feedback affects bimanual strategies depending on the movement direction. However, left-handers show better control of the force applied between the two hands, probably due to environmental pressures for right-handed manipulations.

Automated Classification of General Movements in Infants Using a Two-stream Spatiotemporal Fusion Network

Jul 04, 2022

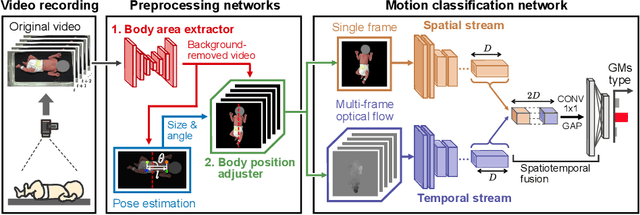

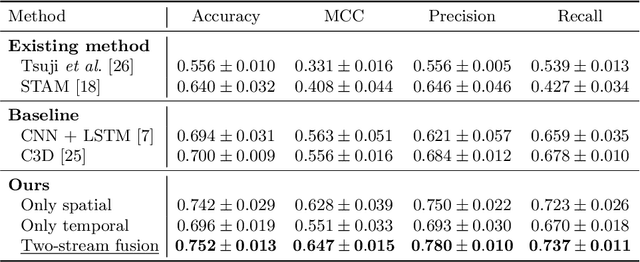

Abstract:The assessment of general movements (GMs) in infants is a useful tool in the early diagnosis of neurodevelopmental disorders. However, its evaluation in clinical practice relies on visual inspection by experts, and an automated solution is eagerly awaited. Recently, video-based GMs classification has attracted attention, but this approach would be strongly affected by irrelevant information, such as background clutter in the video. Furthermore, for reliability, it is necessary to properly extract the spatiotemporal features of infants during GMs. In this study, we propose an automated GMs classification method, which consists of preprocessing networks that remove unnecessary background information from GMs videos and adjust the infant's body position, and a subsequent motion classification network based on a two-stream structure. The proposed method can efficiently extract the essential spatiotemporal features for GMs classification while preventing overfitting to irrelevant information for different recording environments. We validated the proposed method using videos obtained from 100 infants. The experimental results demonstrate that the proposed method outperforms several baseline models and the existing methods.

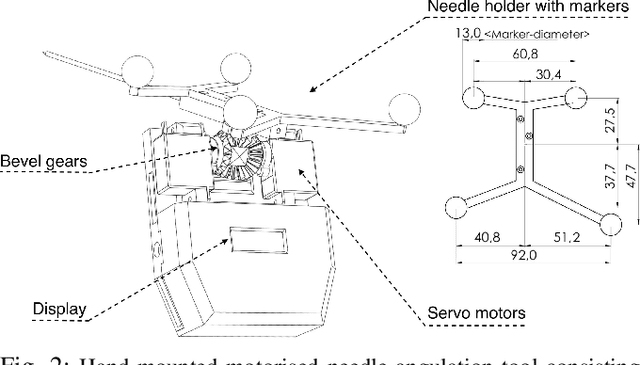

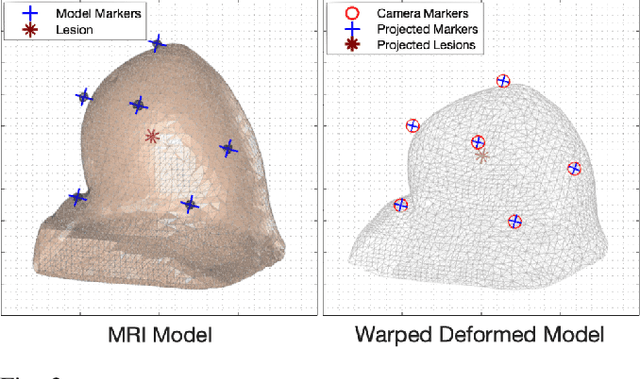

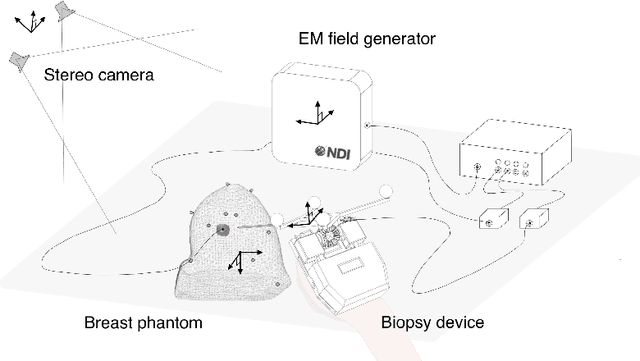

Image-guided Breast Biopsy of MRI-visible Lesions with a Hand-mounted Motorised Needle Steering Tool

Jun 20, 2021

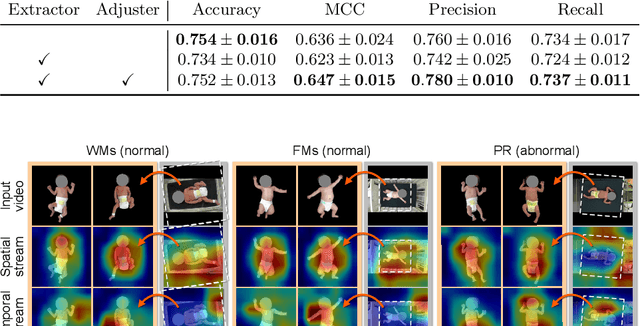

Abstract:A biopsy is the only diagnostic procedure for accurate histological confirmation of breast cancer. When sonographic placement is not feasible, a Magnetic Resonance Imaging(MRI)-guided biopsy is often preferred. The lack of real-time imaging information and the deformations of the breast make it challenging to bring the needle precisely towards the tumour detected in pre-interventional Magnetic Resonance (MR) images. The current manual MRI-guided biopsy workflow is inaccurate and would benefit from a technique that allows real-time tracking and localisation of the tumour lesion during needle insertion. This paper proposes a robotic setup and software architecture to assist the radiologist in targeting MR-detected suspicious tumours. The approach benefits from image fusion of preoperative images with intraoperative optical tracking of markers attached to the patient's skin. A hand-mounted biopsy device has been constructed with an actuated needle base to drive the tip toward the desired direction. The steering commands may be provided both by user input and by computer guidance. The workflow is validated through phantom experiments. On average, the suspicious breast lesion is targeted with a radius down to 2.3 mm. The results suggest that robotic systems taking into account breast deformations have the potentials to tackle this clinical challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge