Matthias Frey

Low-Rank-Based Approximate Computation with Memristors

Oct 06, 2025Abstract:Memristor crossbars enable vector-matrix multiplication (VMM), and are promising for low-power applications. However, it can be difficult to write the memristor conductance values exactly. To improve the accuracy of VMM, we propose a scheme based on low-rank matrix approximation. Specifically, singular value decomposition (SVD) is first applied to obtain a low-rank approximation of the target matrix, which is then factored into a pair of smaller matrices. Subsequently, a two-step serial VMM is executed, where the stochastic write errors are mitigated through step-wise averaging. To evaluate the performance of the proposed scheme, we derive a general expression for the resulting computation error and provide an asymptotic analysis under a prescribed singular-value profile, which reveals how the error scales with matrix size and rank. Both analytical and numerical results confirm the superiority of the proposed scheme compared with the benchmark scheme.

RIS-assisted Physical Layer Security

Jan 30, 2025

Abstract:We propose a reconfigurable intelligent surface (RIS)-assisted wiretap channel, where the RIS is strategically deployed to provide a spatial separation to the transmitter, and orthogonal combiners are employed at the legitimate receiver to extract the data streams from the direct and RIS-assisted links. Then we derive the achievable secrecy rate under semantic security for the RIS-assisted channel and design an algorithm for the secrecy rate optimization problem. The simulation results show the effects of total transmit power, the location and number of eavesdroppers on the security performance.

An Age of Information Characterization of SPS for V2X Applications

Dec 19, 2024Abstract:We derive a closed-form approximation of the stationary distribution of the Age of Information (AoI) of the semi-persistent scheduling (SPS) protocol which is a core part of NR-V2X, an important standard for vehicular communications. While prior works have studied the average AoI under similar assumptions, in this work we provide a full statistical characterization of the AoI by deriving an approximation of its probability mass function. As result, besides the average AoI, we are able to evaluate the age-violation probability, which is of particular relevance for safety-critical applications in vehicular domains, where the priority is to ensure that the AoI does not exceed a predefined threshold during system operation. The study reveals complementary behavior of the age-violation probability compared to the average AoI and highlights the role of the duration of the reservation as a key parameter in the SPS protocol. We use this to demonstrate how this crucial parameter should be tuned according to the performance requirements of the application.

Inverse Solvability and Security with Applications to Federated Learning

Nov 28, 2022Abstract:We introduce the concepts of inverse solvability and security for a generic linear forward model and demonstrate how they can be applied to models used in federated learning. We provide examples of such models which differ in the resulting inverse solvability and security as defined in this paper. We also show how the large number of users participating in a given iteration of federated learning can be leveraged to increase both solvability and security. Finally, we discuss possible extensions of the presented concepts including the nonlinear case.

A Learning-Based Approach to Approximate Coded Computation

May 19, 2022

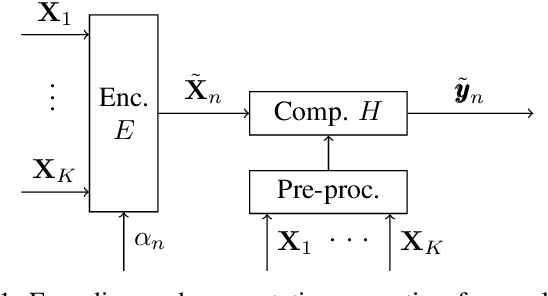

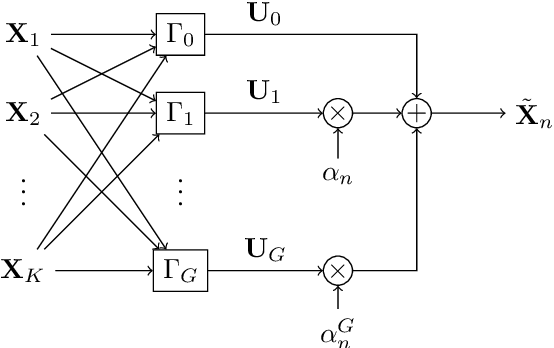

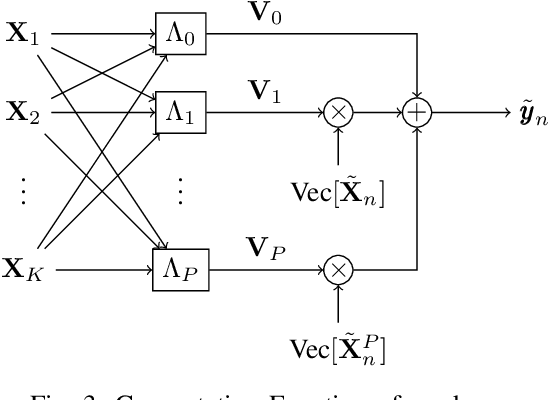

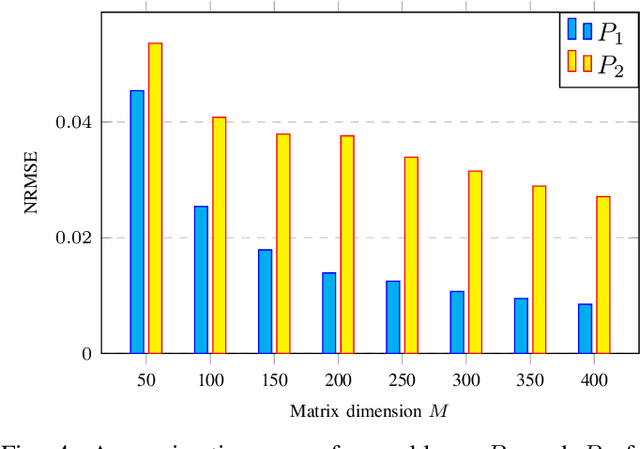

Abstract:Lagrange coded computation (LCC) is essential to solving problems about matrix polynomials in a coded distributed fashion; nevertheless, it can only solve the problems that are representable as matrix polynomials. In this paper, we propose AICC, an AI-aided learning approach that is inspired by LCC but also uses deep neural networks (DNNs). It is appropriate for coded computation of more general functions. Numerical simulations demonstrate the suitability of the proposed approach for the coded computation of different matrix functions that are often utilized in digital signal processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge