Matthew J. Hoffman

Uncertainty-enabled machine learning for emulation of regional sea-level change caused by the Antarctic Ice Sheet

Jun 21, 2024

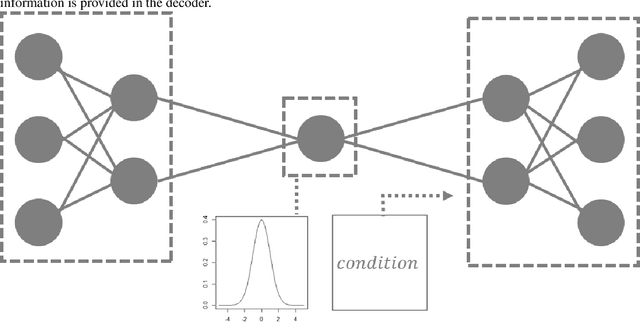

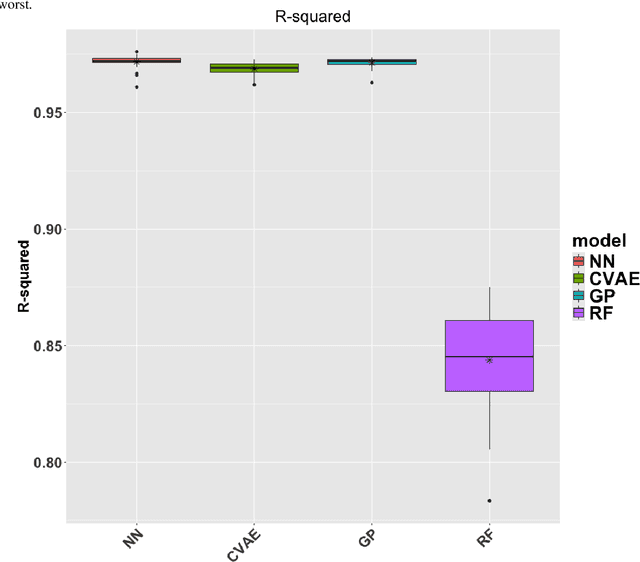

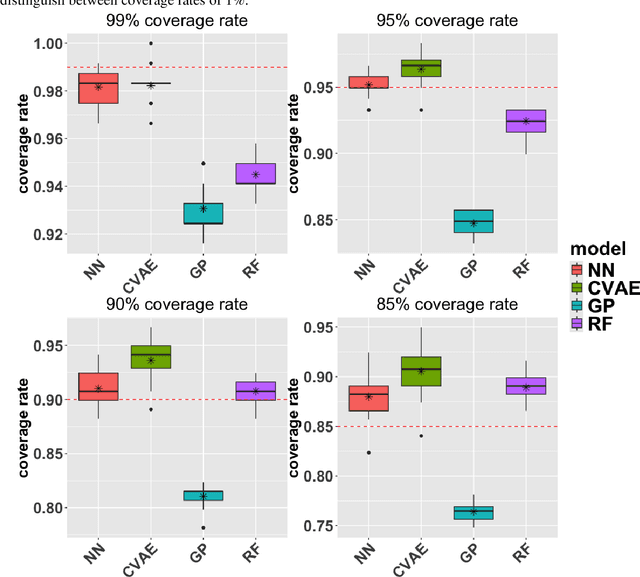

Abstract:Projecting sea-level change in various climate-change scenarios typically involves running forward simulations of the Earth's gravitational, rotational and deformational (GRD) response to ice mass change, which requires high computational cost and time. Here we build neural-network emulators of sea-level change at 27 coastal locations, due to the GRD effects associated with future Antarctic Ice Sheet mass change over the 21st century. The emulators are based on datasets produced using a numerical solver for the static sea-level equation and published ISMIP6-2100 ice-sheet model simulations referenced in the IPCC AR6 report. We show that the neural-network emulators have an accuracy that is competitive with baseline machine learning emulators. In order to quantify uncertainty, we derive well-calibrated prediction intervals for simulated sea-level change via a linear regression postprocessing technique that uses (nonlinear) machine learning model outputs, a technique that has previously been applied to numerical climate models. We also demonstrate substantial gains in computational efficiency: a feedforward neural-network emulator exhibits on the order of 100 times speedup in comparison to the numerical sea-level equation solver that is used for training.

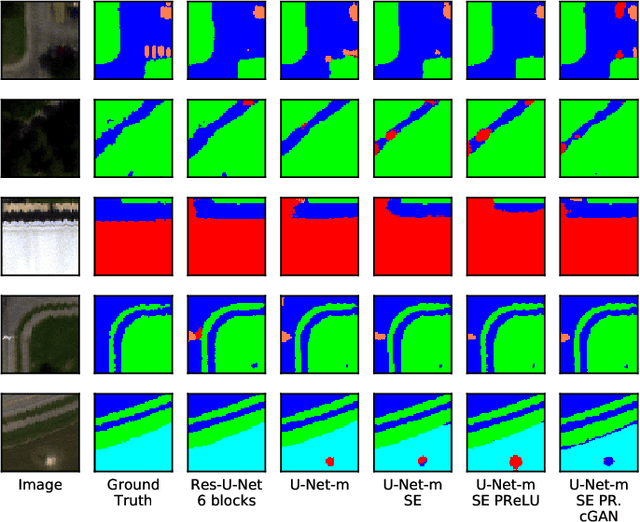

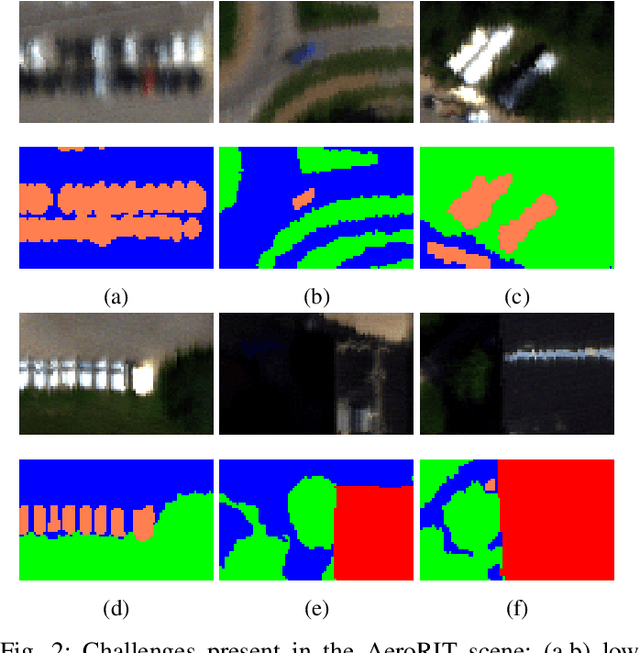

AeroRIT: A New Scene for Hyperspectral Image Analysis

Dec 17, 2019

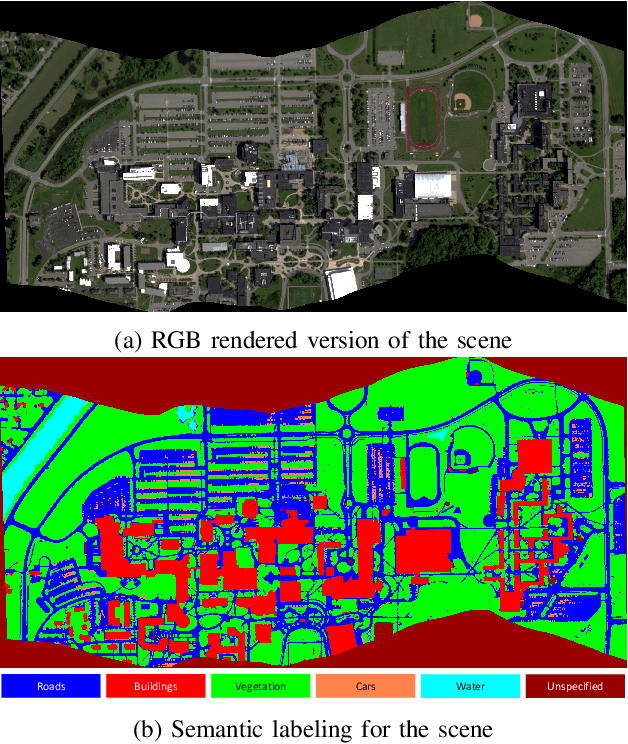

Abstract:Hyperspectral imagery oriented research like image super-resolution and image fusion is often conducted on open source datasets captured via point and shoot camera setups (ICVL, CAVE) that have high signal to noise ratio. In contrast, spectral images captured from aircrafts have low spatial resolution and suffer from higher noise interference due to factors pertaining to atmospheric conditions. This leads to challenges in extracting contextual information from the captured data as convolutional neural networks are very noise-sensitive and slight atmospheric changes can often lead to a large distribution spread in spectral values overlooking the same object. To understand the challenges faced with aerial spectral data, we collect and label a flight line over the university campus, AeroRIT, and explore the task of semantic segmentation. To the best of our knowledge, this is the first comprehensive large-scale hyperspectral scene with nearly seven million semantic annotations for identifying cars, roads and buildings. We compare the performance of three popular architectures - SegNet, U-Net and Res-U-Net, for scene understanding and object identification. To date, aerial hyperspectral image analysis has been restricted to small datasets with limited train/test splits capabilities. We believe AeroRIT will help advance the research in the field with a more complex object distribution.

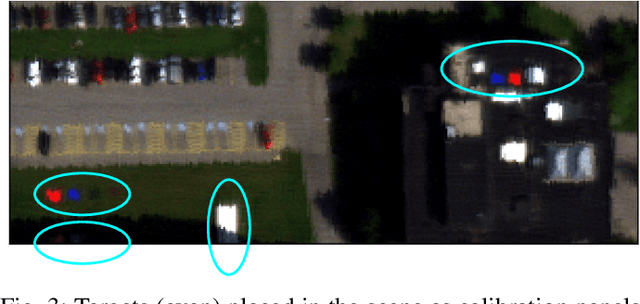

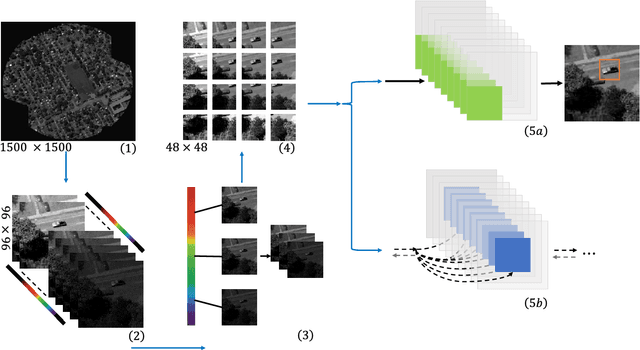

Tracking in Aerial Hyperspectral Videos using Deep Kernelized Correlation Filters

May 06, 2018

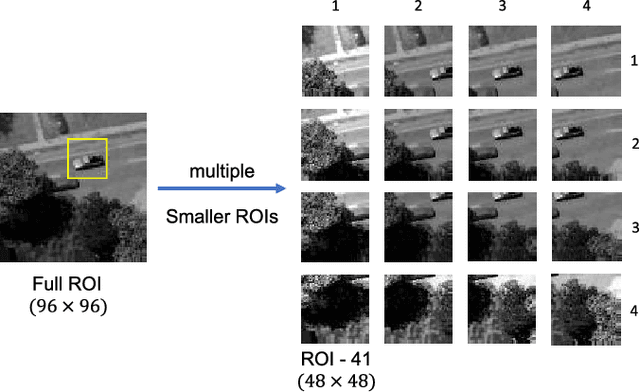

Abstract:Hyperspectral imaging holds enormous potential to improve the state-of-the-art in aerial vehicle tracking with low spatial and temporal resolutions. Recently, adaptive multi-modal hyperspectral sensors have attracted growing interest due to their ability to record extended data quickly from aerial platforms. In this study, we apply popular concepts from traditional object tracking, namely (1) Kernelized Correlation Filters (KCF) and (2) Deep Convolutional Neural Network (CNN) features to aerial tracking in hyperspectral domain. We propose the Deep Hyperspectral Kernelized Correlation Filter based tracker (DeepHKCF) to efficiently track aerial vehicles using an adaptive multi-modal hyperspectral sensor. We address low temporal resolution by designing a single KCF-in-multiple Regions-of-Interest (ROIs) approach to cover a reasonably large area. To increase the speed of deep convolutional features extraction from multiple ROIs, we design an effective ROI mapping strategy. The proposed tracker also provides flexibility to couple with the more advanced correlation filter trackers. The DeepHKCF tracker performs exceptionally well with deep features set up in a synthetic hyperspectral video generated by the Digital Imaging and Remote Sensing Image Generation (DIRSIG) software. Additionally, we generate a large, synthetic, single-channel dataset using DIRSIG to perform vehicle classification in the Wide Area Motion Imagery (WAMI) platform. This way, the high-fidelity of the DIRSIG software is proved and a large scale aerial vehicle classification dataset is released to support studies on vehicle detection and tracking in the WAMI platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge