AeroRIT: A New Scene for Hyperspectral Image Analysis

Paper and Code

Dec 17, 2019

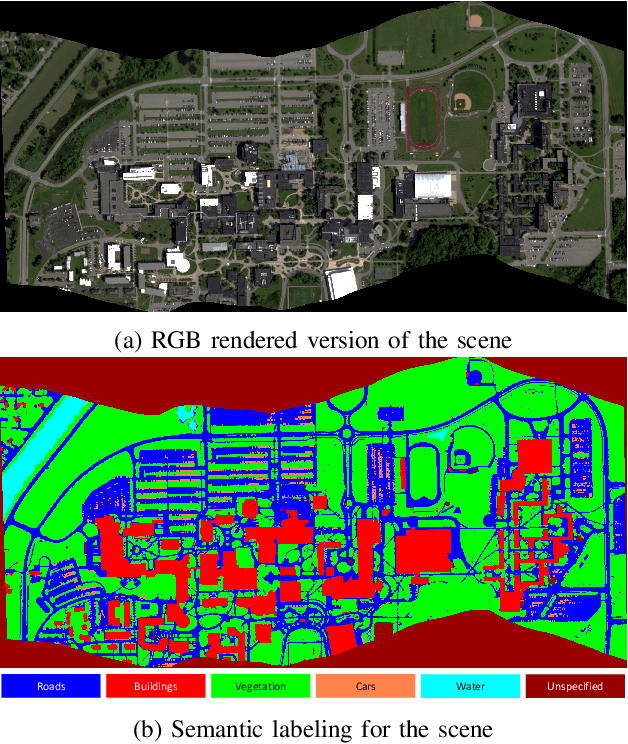

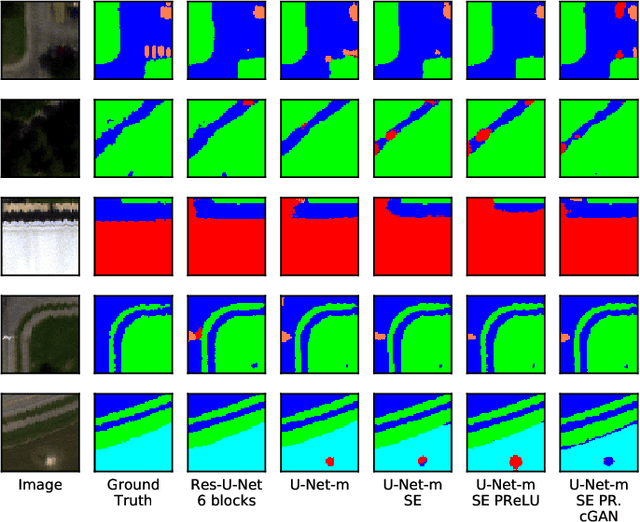

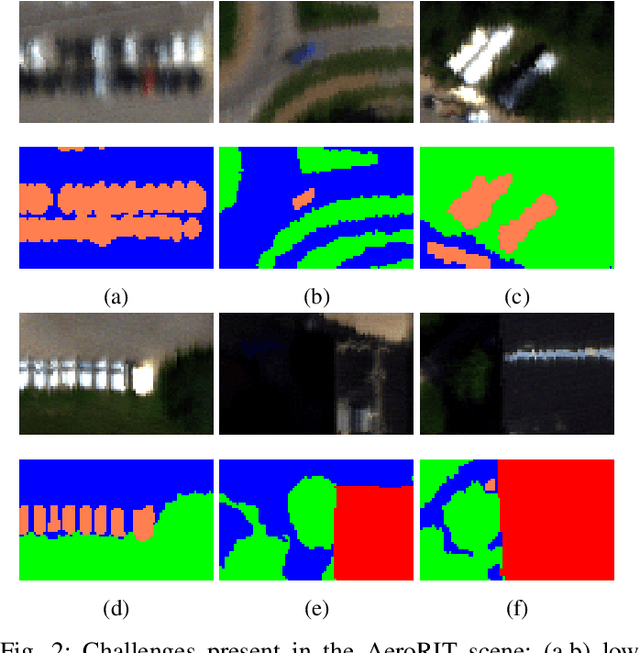

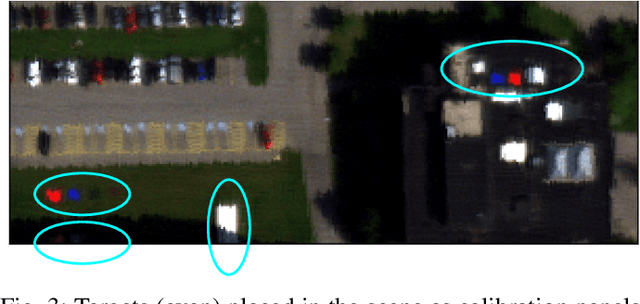

Hyperspectral imagery oriented research like image super-resolution and image fusion is often conducted on open source datasets captured via point and shoot camera setups (ICVL, CAVE) that have high signal to noise ratio. In contrast, spectral images captured from aircrafts have low spatial resolution and suffer from higher noise interference due to factors pertaining to atmospheric conditions. This leads to challenges in extracting contextual information from the captured data as convolutional neural networks are very noise-sensitive and slight atmospheric changes can often lead to a large distribution spread in spectral values overlooking the same object. To understand the challenges faced with aerial spectral data, we collect and label a flight line over the university campus, AeroRIT, and explore the task of semantic segmentation. To the best of our knowledge, this is the first comprehensive large-scale hyperspectral scene with nearly seven million semantic annotations for identifying cars, roads and buildings. We compare the performance of three popular architectures - SegNet, U-Net and Res-U-Net, for scene understanding and object identification. To date, aerial hyperspectral image analysis has been restricted to small datasets with limited train/test splits capabilities. We believe AeroRIT will help advance the research in the field with a more complex object distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge