Matthew Forshaw

A New Self-organizing Interval Type-2 Fuzzy Neural Network for Multi-Step Time Series Prediction

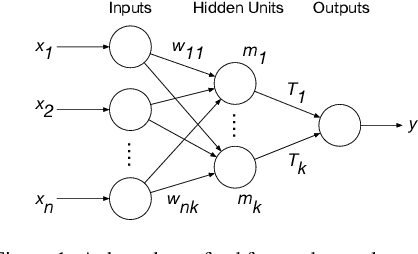

Jul 10, 2024Abstract:This paper proposes a new self-organizing interval type-2 fuzzy neural network with multiple outputs (SOIT2FNN-MO) for multi-step time series prediction. Differing from the traditional six-layer IT2FNN, a nine-layer network is developed to improve prediction accuracy, uncertainty handling and model interpretability. First, a new co-antecedent layer and a modified consequent layer are devised to improve the interpretability of the fuzzy model for multi-step predictions. Second, a new transformation layer is designed to address the potential issues in the vanished rule firing strength caused by highdimensional inputs. Third, a new link layer is proposed to build temporal connections between multi-step predictions. Furthermore, a two-stage self-organizing mechanism is developed to automatically generate the fuzzy rules, in which the first stage is used to create the rule base from empty and perform the initial optimization, while the second stage is to fine-tune all network parameters. Finally, various simulations are carried out on chaotic and microgrid time series prediction problems, demonstrating the superiority of our approach in terms of prediction accuracy, uncertainty handling and model interpretability.

Insights from the Use of Previously Unseen Neural Architecture Search Datasets

Apr 02, 2024Abstract:The boundless possibility of neural networks which can be used to solve a problem -- each with different performance -- leads to a situation where a Deep Learning expert is required to identify the best neural network. This goes against the hope of removing the need for experts. Neural Architecture Search (NAS) offers a solution to this by automatically identifying the best architecture. However, to date, NAS work has focused on a small set of datasets which we argue are not representative of real-world problems. We introduce eight new datasets created for a series of NAS Challenges: AddNIST, Language, MultNIST, CIFARTile, Gutenberg, Isabella, GeoClassing, and Chesseract. These datasets and challenges are developed to direct attention to issues in NAS development and to encourage authors to consider how their models will perform on datasets unknown to them at development time. We present experimentation using standard Deep Learning methods as well as the best results from challenge participants.

Predicting the Performance of a Computing System with Deep Networks

Feb 27, 2023Abstract:Predicting the performance and energy consumption of computing hardware is critical for many modern applications. This will inform procurement decisions, deployment decisions, and autonomic scaling. Existing approaches to understanding the performance of hardware largely focus around benchmarking -- leveraging standardised workloads which seek to be representative of an end-user's needs. Two key challenges are present; benchmark workloads may not be representative of an end-user's workload, and benchmark scores are not easily obtained for all hardware. Within this paper, we demonstrate the potential to build Deep Learning models to predict benchmark scores for unseen hardware. We undertake our evaluation with the openly available SPEC 2017 benchmark results. We evaluate three different networks, one fully-connected network along with two Convolutional Neural Networks (one bespoke and one ResNet inspired) and demonstrate impressive $R^2$ scores of 0.96, 0.98 and 0.94 respectively.

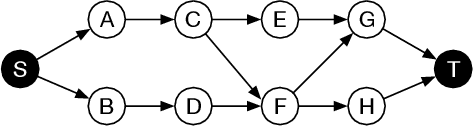

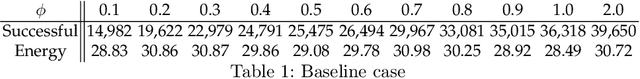

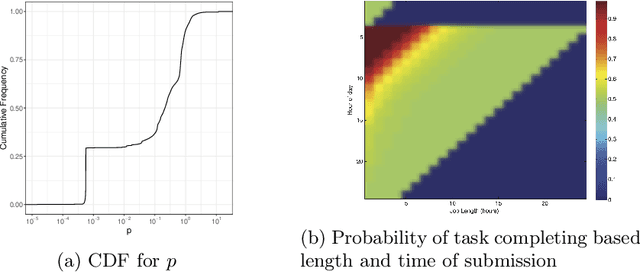

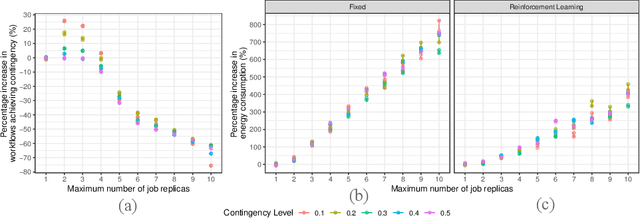

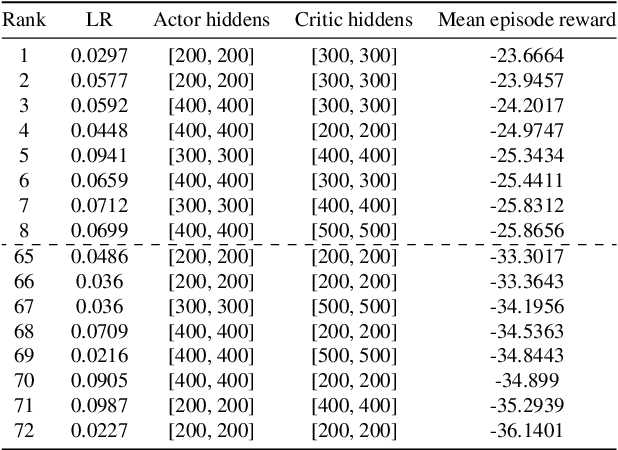

Analysis of Reinforcement Learning for determining task replication in workflows

Sep 14, 2022

Abstract:Executing workflows on volunteer computing resources where individual tasks may be forced to relinquish their resource for the resource's primary use leads to unpredictability and often significantly increases execution time. Task replication is one approach that can ameliorate this challenge. This comes at the expense of a potentially significant increase in system load and energy consumption. We propose the use of Reinforcement Learning (RL) such that a system may `learn' the `best' number of replicas to run to increase the number of workflows which complete promptly whilst minimising the additional workload on the system when replicas are not beneficial. We show, through simulation, that we can save 34% of the energy consumption using RL compared to a fixed number of replicas with only a 4% decrease in workflows achieving a pre-defined overhead bound.

Long-term Reproducibility for Neural Architecture Search

Jul 18, 2022

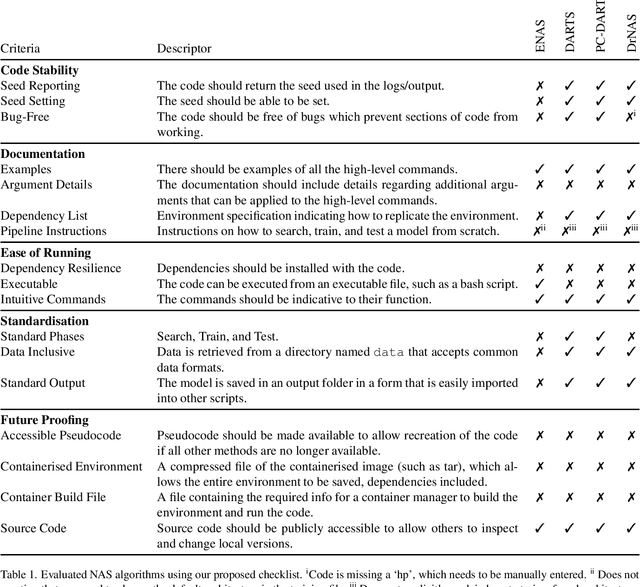

Abstract:It is a sad reflection of modern academia that code is often ignored after publication -- there is no academic 'kudos' for bug fixes / maintenance. Code is often unavailable or, if available, contains bugs, is incomplete, or relies on out-of-date / unavailable libraries. This has a significant impact on reproducibility and general scientific progress. Neural Architecture Search (NAS) is no exception to this, with some prior work in reproducibility. However, we argue that these do not consider long-term reproducibility issues. We therefore propose a checklist for long-term NAS reproducibility. We evaluate our checklist against common NAS approaches along with proposing how we can retrospectively make these approaches more long-term reproducible.

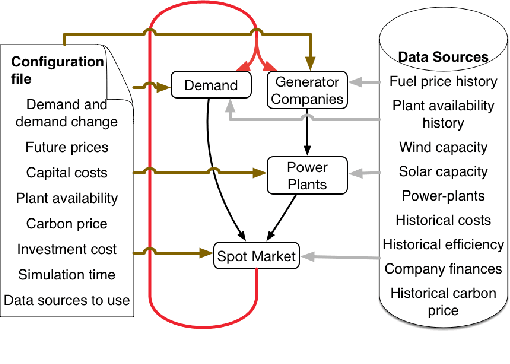

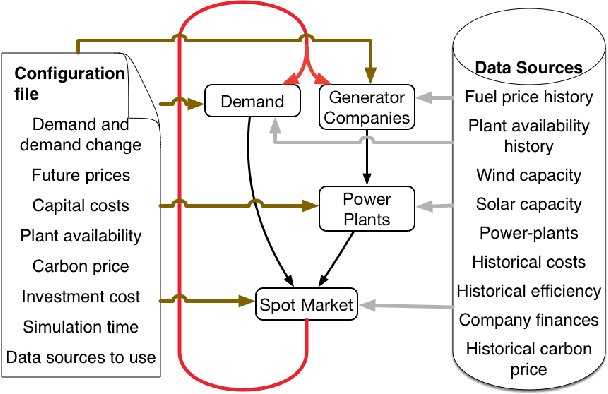

Machine learning applications for electricity market agent-based models: A systematic literature review

Jun 05, 2022

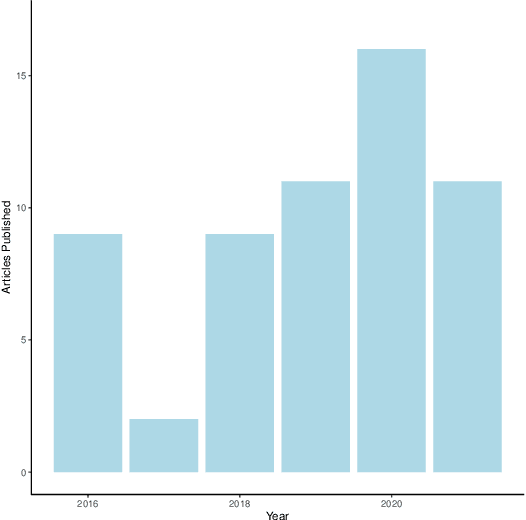

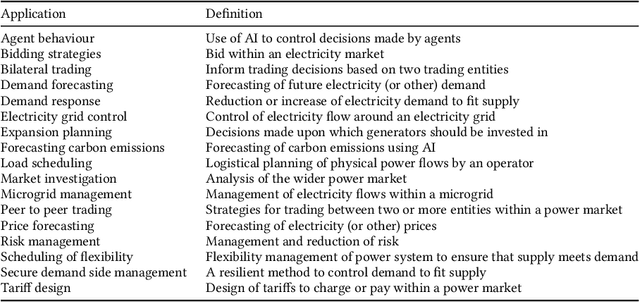

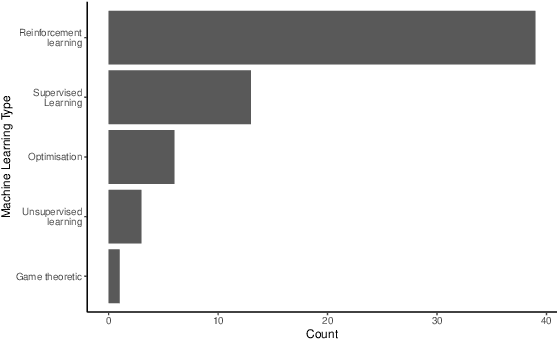

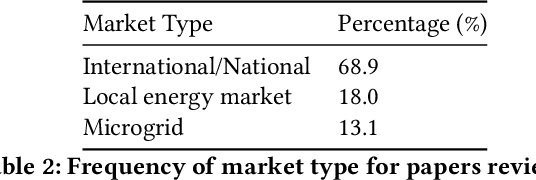

Abstract:The electricity market has a vital role to play in the decarbonisation of the energy system. However, the electricity market is made up of many different variables and data inputs. These variables and data inputs behave in sometimes unpredictable ways which can not be predicted a-priori. It has therefore been suggested that agent-based simulations are used to better understand the dynamics of the electricity market. Agent-based models provide the opportunity to integrate machine learning and artificial intelligence to add intelligence, make better forecasts and control the power market in better and more efficient ways. In this systematic literature review, we review 55 papers published between 2016 and 2021 which focus on machine learning applied to agent-based electricity market models. We find that research clusters around popular topics, such as bidding strategies. However, there exists a long-tail of different research applications that could benefit from the high intensity research from the more investigated applications.

Optimizing a domestic battery and solar photovoltaic system with deep reinforcement learning

Sep 10, 2021

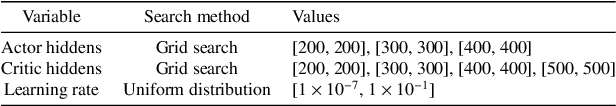

Abstract:A lowering in the cost of batteries and solar PV systems has led to a high uptake of solar battery home systems. In this work, we use the deep deterministic policy gradient algorithm to optimise the charging and discharging behaviour of a battery within such a system. Our approach outputs a continuous action space when it charges and discharges the battery, and can function well in a stochastic environment. We show good performance of this algorithm by lowering the expenditure of a single household on electricity to almost \$1AUD for large batteries across selected weeks within a year.

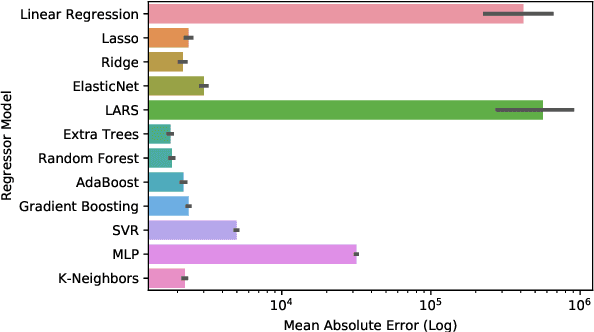

The impact of online machine-learning methods on long-term investment decisions and generator utilization in electricity markets

Mar 07, 2021

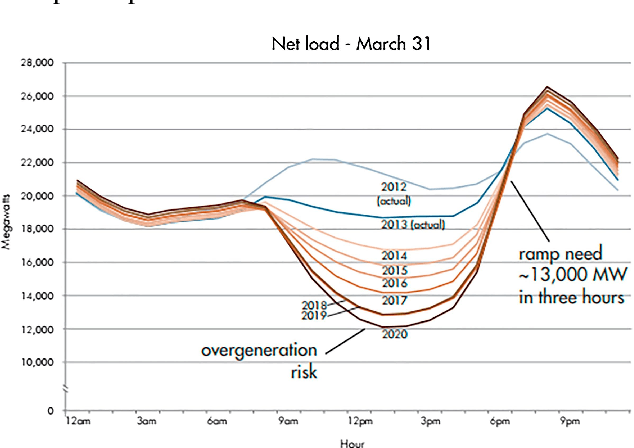

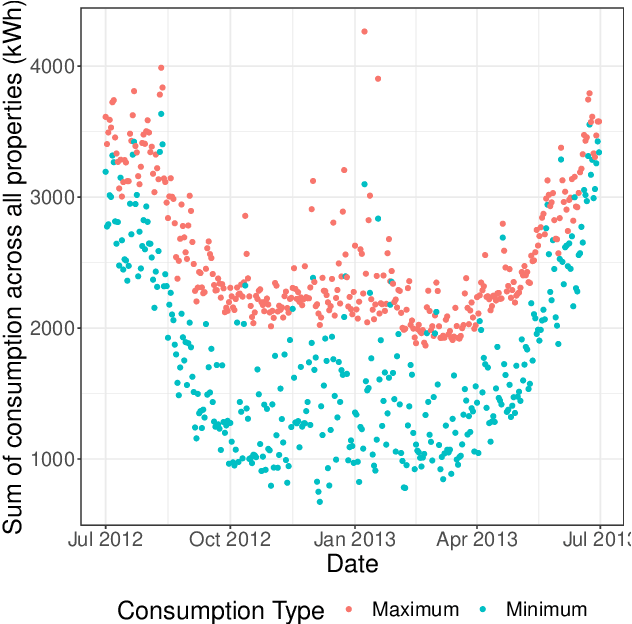

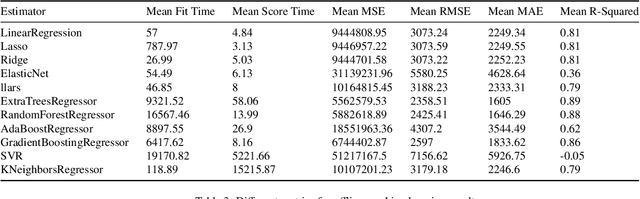

Abstract:Electricity supply must be matched with demand at all times. This helps reduce the chances of issues such as load frequency control and the chances of electricity blackouts. To gain a better understanding of the load that is likely to be required over the next 24h, estimations under uncertainty are needed. This is especially difficult in a decentralized electricity market with many micro-producers which are not under central control. In this paper, we investigate the impact of eleven offline learning and five online learning algorithms to predict the electricity demand profile over the next 24h. We achieve this through integration within the long-term agent-based model, ElecSim. Through the prediction of electricity demand profile over the next 24h, we can simulate the predictions made for a day-ahead market. Once we have made these predictions, we sample from the residual distributions and perturb the electricity market demand using the simulation, ElecSim. This enables us to understand the impact of errors on the long-term dynamics of a decentralized electricity market. We show we can reduce the mean absolute error by 30% using an online algorithm when compared to the best offline algorithm, whilst reducing the required tendered national grid reserve required. This reduction in national grid reserves leads to savings in costs and emissions. We also show that large errors in prediction accuracy have a disproportionate error on investments made over a 17-year time frame, as well as electricity mix.

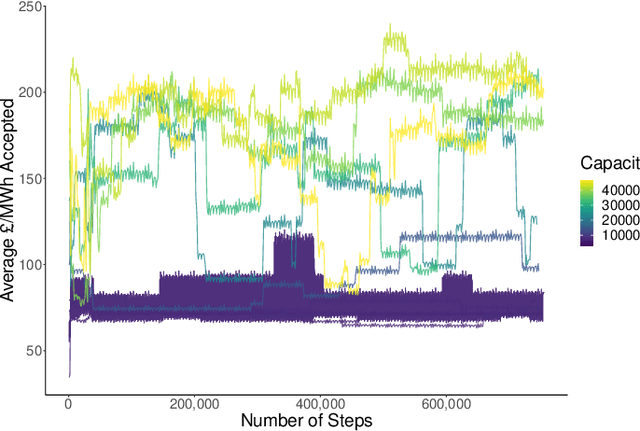

Exploring market power using deep reinforcement learning for intelligent bidding strategies

Nov 08, 2020

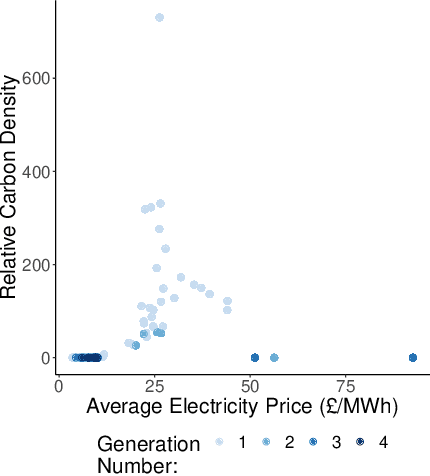

Abstract:Decentralized electricity markets are often dominated by a small set of generator companies who control the majority of the capacity. In this paper, we explore the effect of the total controlled electricity capacity by a single, or group, of generator companies can have on the average electricity price. We demonstrate this through the use of ElecSim, a simulation of a country-wide energy market. We develop a strategic agent, representing a generation company, which uses a deep deterministic policy gradient reinforcement learning algorithm to bid in a uniform pricing electricity market. A uniform pricing market is one where all players are paid the highest accepted price. ElecSim is parameterized to the United Kingdom for the year 2018. This work can help inform policy on how to best regulate a market to ensure that the price of electricity remains competitive. We find that capacity has an impact on the average electricity price in a single year. If any single generator company, or a collaborating group of generator companies, control more than ${\sim}$11$\%$ of generation capacity and bid strategically, prices begin to increase by ${\sim}$25$\%$. The value of ${\sim}$25\% and ${\sim}$11\% may vary between market structures and countries. For instance, different load profiles may favour a particular type of generator or a different distribution of generation capacity. Once the capacity controlled by a generator company, which bids strategically, is higher than ${\sim}$35\%, prices increase exponentially. We observe that the use of a market cap of approximately double the average market price has the effect of significantly decreasing this effect and maintaining a competitive market. A fair and competitive electricity market provides value to consumers and enables a more competitive economy through the utilisation of electricity by both industry and consumers.

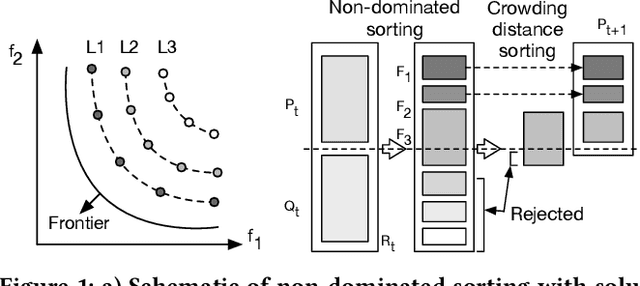

Optimizing carbon tax for decentralized electricity markets using an agent-based model

May 28, 2020

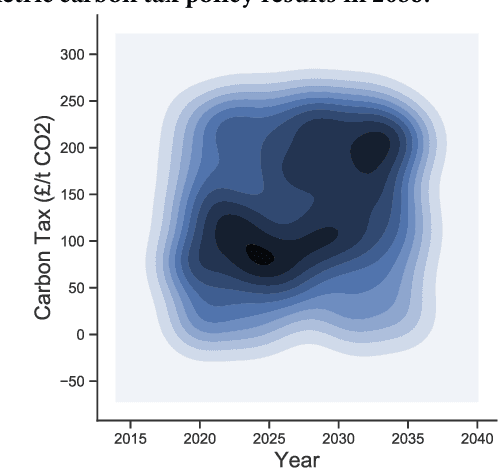

Abstract:Averting the effects of anthropogenic climate change requires a transition from fossil fuels to low-carbon technology. A way to achieve this is to decarbonize the electricity grid. However, further efforts must be made in other fields such as transport and heating for full decarbonization. This would reduce carbon emissions due to electricity generation, and also help to decarbonize other sources such as automotive and heating by enabling a low-carbon alternative. Carbon taxes have been shown to be an efficient way to aid in this transition. In this paper, we demonstrate how to to find optimal carbon tax policies through a genetic algorithm approach, using the electricity market agent-based model ElecSim. To achieve this, we use the NSGA-II genetic algorithm to minimize average electricity price and relative carbon intensity of the electricity mix. We demonstrate that it is possible to find a range of carbon taxes to suit differing objectives. Our results show that we are able to minimize electricity cost to below \textsterling10/MWh as well as carbon intensity to zero in every case. In terms of the optimal carbon tax strategy, we found that an increasing strategy between 2020 and 2035 was preferable. Each of the Pareto-front optimal tax strategies are at least above \textsterling81/tCO2 for every year. The mean carbon tax strategy was \textsterling240/tCO2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge