Matthew Choi

Large Language Models on Lexical Semantic Change Detection: An Evaluation

Dec 10, 2023

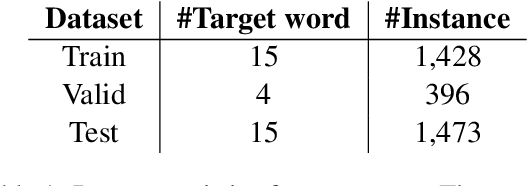

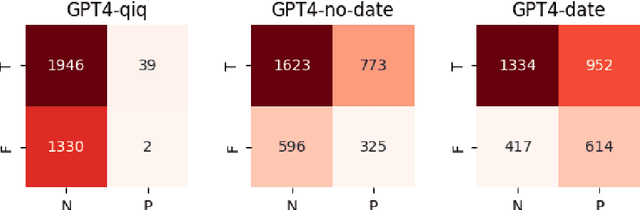

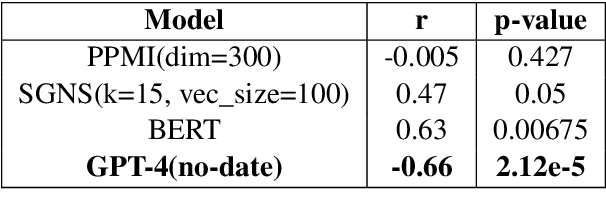

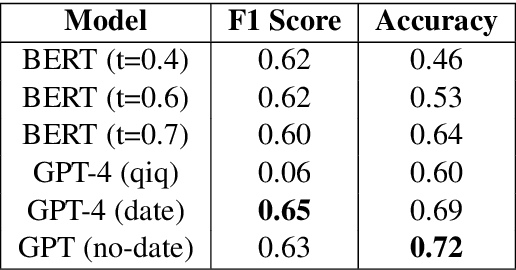

Abstract:Lexical Semantic Change Detection stands out as one of the few areas where Large Language Models (LLMs) have not been extensively involved. Traditional methods like PPMI, and SGNS remain prevalent in research, alongside newer BERT-based approaches. Despite the comprehensive coverage of various natural language processing domains by LLMs, there is a notable scarcity of literature concerning their application in this specific realm. In this work, we seek to bridge this gap by introducing LLMs into the domain of Lexical Semantic Change Detection. Our work presents novel prompting solutions and a comprehensive evaluation that spans all three generations of language models, contributing to the exploration of LLMs in this research area.

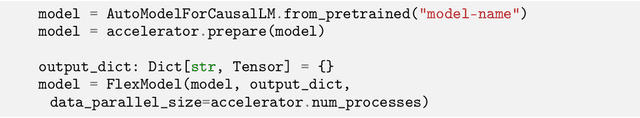

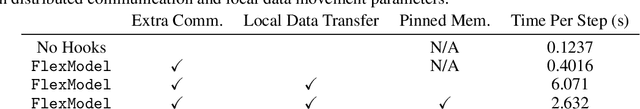

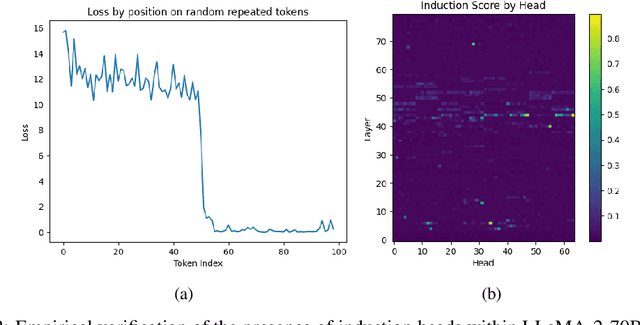

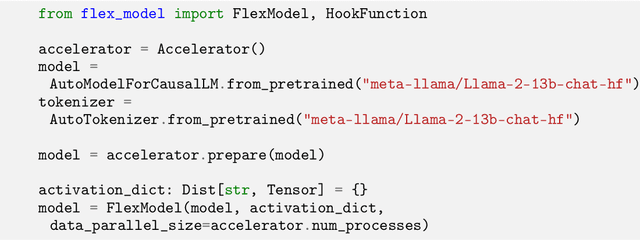

FlexModel: A Framework for Interpretability of Distributed Large Language Models

Dec 05, 2023

Abstract:With the growth of large language models, now incorporating billions of parameters, the hardware prerequisites for their training and deployment have seen a corresponding increase. Although existing tools facilitate model parallelization and distributed training, deeper model interactions, crucial for interpretability and responsible AI techniques, still demand thorough knowledge of distributed computing. This often hinders contributions from researchers with machine learning expertise but limited distributed computing background. Addressing this challenge, we present FlexModel, a software package providing a streamlined interface for engaging with models distributed across multi-GPU and multi-node configurations. The library is compatible with existing model distribution libraries and encapsulates PyTorch models. It exposes user-registerable HookFunctions to facilitate straightforward interaction with distributed model internals, bridging the gap between distributed and single-device model paradigms. Primarily, FlexModel enhances accessibility by democratizing model interactions and promotes more inclusive research in the domain of large-scale neural networks. The package is found at https://github.com/VectorInstitute/flex_model.

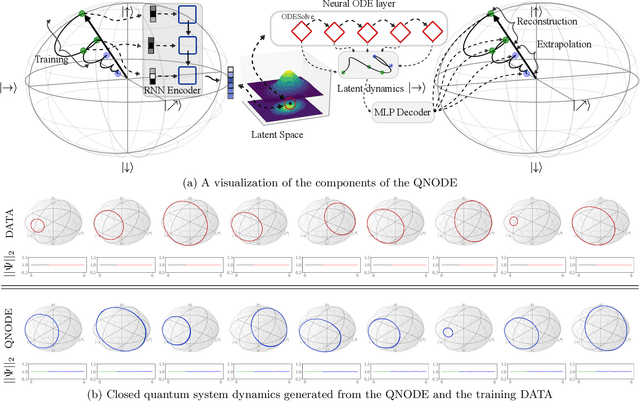

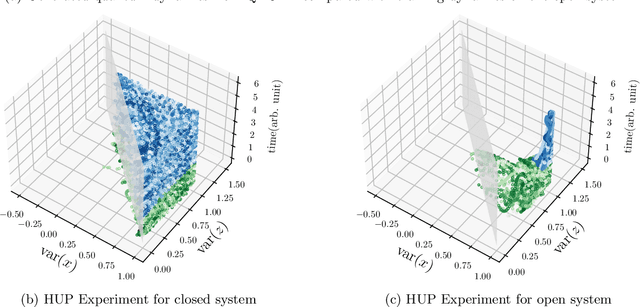

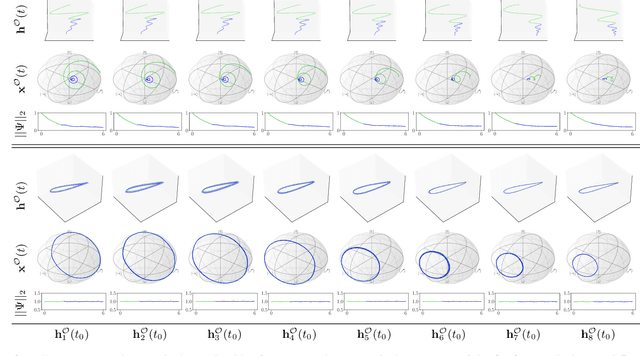

Learning quantum dynamics with latent neural ODEs

Oct 20, 2021

Abstract:The core objective of machine-assisted scientific discovery is to learn physical laws from experimental data without prior knowledge of the systems in question. In the area of quantum physics, making progress towards these goals is significantly more challenging due to the curse of dimensionality as well as the counter-intuitive nature of quantum mechanics. Here, we present the QNODE, a latent neural ODE trained on dynamics from closed and open quantum systems. The QNODE can learn to generate quantum dynamics and extrapolate outside of its training region that satisfy the von Neumann and time-local Lindblad master equations for closed and open quantum systems. Furthermore the QNODE rediscovers quantum mechanical laws such as Heisenberg's uncertainty principle in a totally data-driven way, without constraints or guidance. Additionally, we show that trajectories that are generated from the QNODE and are close in its latent space have similar quantum dynamics while preserving the physics of the training system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge