Martin Robert

Multi-Objective Algorithms for Learning Open-Ended Robotic Problems

Nov 11, 2024

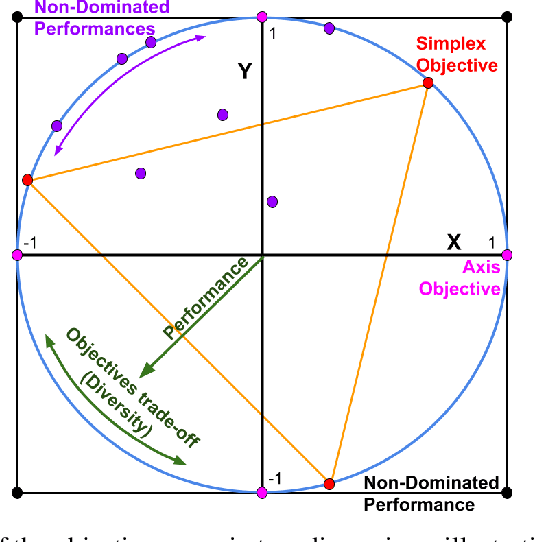

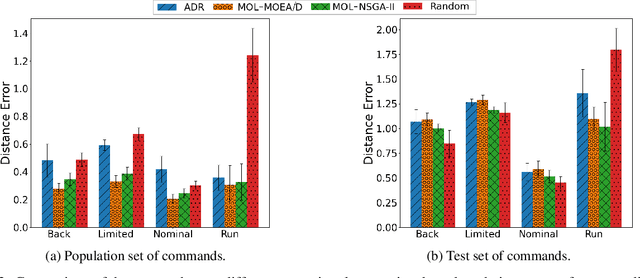

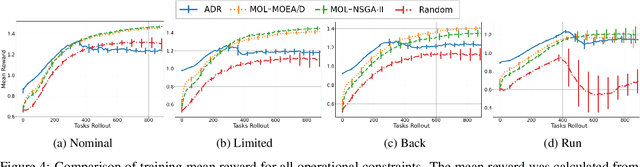

Abstract:Quadrupedal locomotion is a complex, open-ended problem vital to expanding autonomous vehicle reach. Traditional reinforcement learning approaches often fall short due to training instability and sample inefficiency. We propose a novel method leveraging multi-objective evolutionary algorithms as an automatic curriculum learning mechanism, which we named Multi-Objective Learning (MOL). Our approach significantly enhances the learning process by projecting velocity commands into an objective space and optimizing for both performance and diversity. Tested within the MuJoCo physics simulator, our method demonstrates superior stability and adaptability compared to baseline approaches. As such, it achieved 19\% and 44\% fewer errors against our best baseline algorithm in difficult scenarios based on a uniform and tailored evaluation respectively. This work introduces a robust framework for training quadrupedal robots, promising significant advancements in robotic locomotion and open-ended robotic problems.

Tree bark re-identification using a deep-learning feature descriptor

Dec 06, 2019

Abstract:The ability to visually re-identify objects is a fundamental capability in vision systems. Oftentimes, it relies on collections of visual signatures based on descriptors, such as Scale Invariant Feature Transform (SIFT) or Speeded Up Robust Features (SURF). However, these traditional descriptors were designed for a certain domain of surface appearances and geometries (limited relief). Consequently, highly-textured surfaces such as tree bark pose a challenge to them. In turns, this makes it more difficult to use trees as identifiable landmarks for navigational purposes (robotics) or to track felled lumber along a supply chain (logistics). We thus propose to use data-driven descriptors trained on bark images for tree surface re-identification. To this effect, we collected a large dataset containing 2,400 bark images with strong illumination changes, annotated by surface and with the ability to pixel-align them. We used this dataset to sample from more than 2 million 64x64 pixel patches to train our novel local descriptors DeepBark and SqueezeBark. Our DeepBark method has shown a clear advantage against the hand-crafted descriptors SIFT and SURF. Furthermore, we demonstrated that DeepBark can reach a Precision@1 of 99.8% in a database of 7,900 images with only 11 relevant images. Our work thus suggests that re-identifying tree surfaces in a challenging context is possible, while making public a new dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge