Martin Bach

2D Car Detection in Radar Data with PointNets

Apr 17, 2019

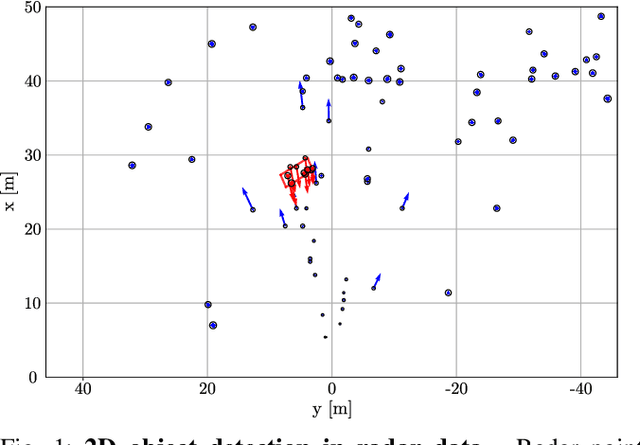

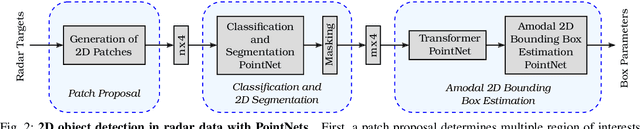

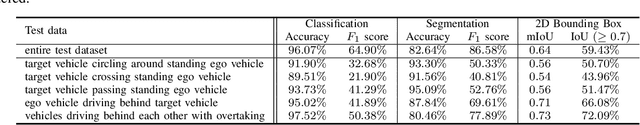

Abstract:For many automated driving functions, a highly accurate perception of the vehicle environment is a crucial prerequisite. Modern high-resolution radar sensors generate multiple radar targets per object, which makes these sensors particularly suitable for the 2D object detection task. This work presents an approach to detect object hypotheses solely depending on sparse radar data using PointNets. In literature, only methods are presented so far which perform either object classification or bounding box estimation for objects. In contrast, this method facilitates a classification together with a bounding box estimation of objects using a single radar sensor. To this end, PointNets are adjusted for radar data performing 2D object classification with segmentation, and 2D bounding box regression in order to estimate an amodal bounding box. The algorithm is evaluated using an automatically created dataset which consist of various realistic driving maneuvers. The results show the great potential of object detection in high-resolution radar data using PointNets.

Object Detection on Dynamic Occupancy Grid Maps Using Deep Learning and Automatic Label Generation

Jan 30, 2018

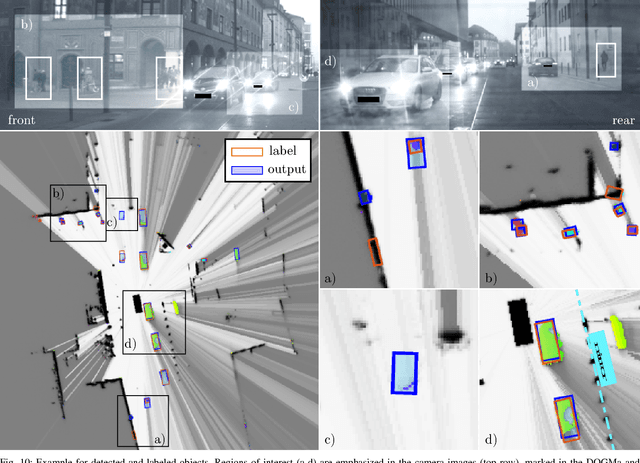

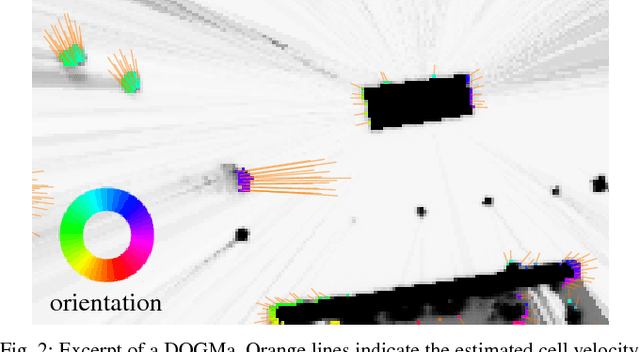

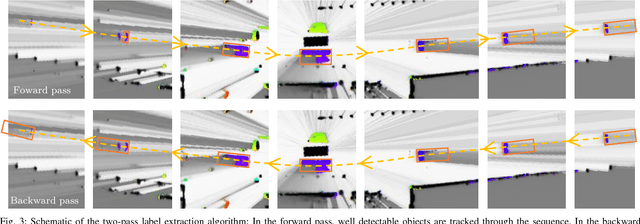

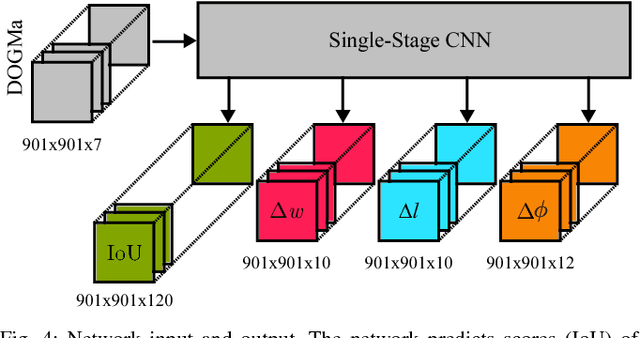

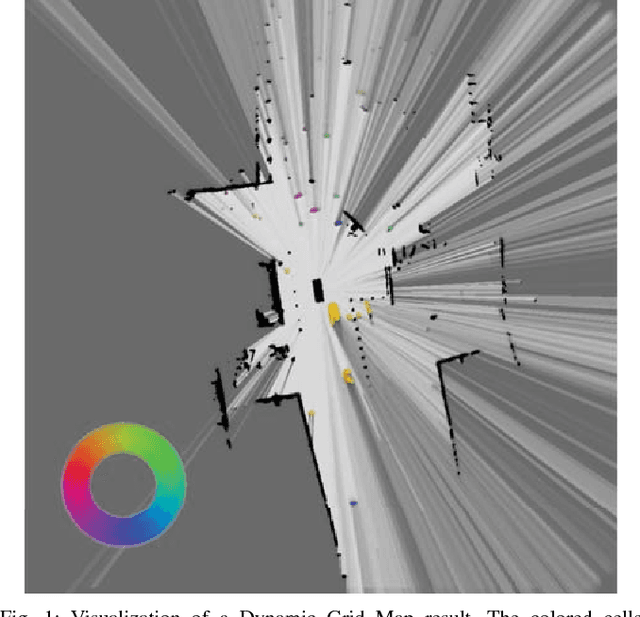

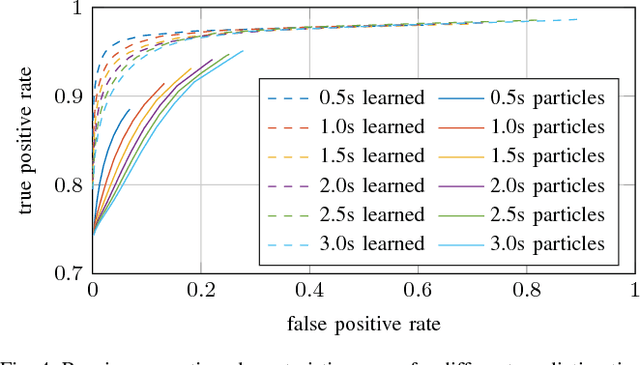

Abstract:We tackle the problem of object detection and pose estimation in a shared space downtown environment. For perception multiple laser scanners with 360{\deg} coverage were fused in a dynamic occupancy grid map (DOGMa). A single-stage deep convolutional neural network is trained to provide object hypotheses comprising of shape, position, orientation and an existence score from a single input DOGMa. Furthermore, an algorithm for offline object extraction was developed to automatically label several hours of training data. The algorithm is based on a two-pass trajectory extraction, forward and backward in time. Typical for engineered algorithms, the automatic label generation suffers from misdetections, which makes hard negative mining impractical. Therefore, we propose a loss function counteracting the high imbalance between mostly static background and extremely rare dynamic grid cells. Experiments indicate, that the trained network has good generalization capabilities since it detects objects occasionally lost by the label algorithm. Evaluation reaches an average precision (AP) of 75.9%

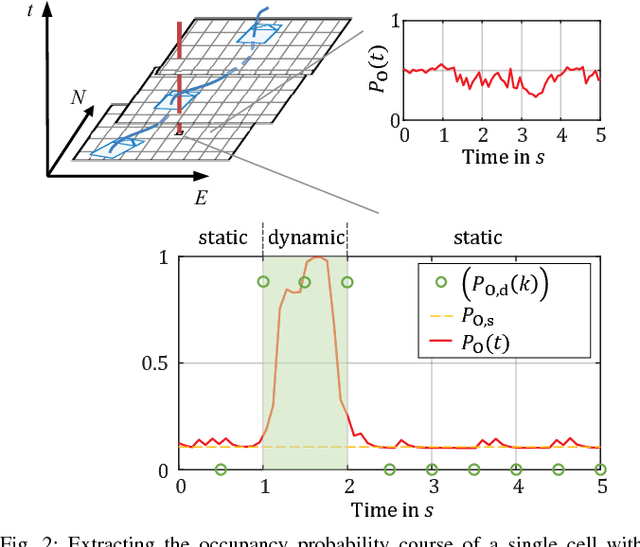

Dynamic Occupancy Grid Prediction for Urban Autonomous Driving: A Deep Learning Approach with Fully Automatic Labeling

Nov 07, 2017

Abstract:Long-term situation prediction plays a crucial role in the development of intelligent vehicles. A major challenge still to overcome is the prediction of complex downtown scenarios with multiple road users, e.g., pedestrians, bikes, and motor vehicles, interacting with each other. This contribution tackles this challenge by combining a Bayesian filtering technique for environment representation, and machine learning as long-term predictor. More specifically, a dynamic occupancy grid map is utilized as input to a deep convolutional neural network. This yields the advantage of using spatially distributed velocity estimates from a single time step for prediction, rather than a raw data sequence, alleviating common problems dealing with input time series of multiple sensors. Furthermore, convolutional neural networks have the inherent characteristic of using context information, enabling the implicit modeling of road user interaction. Pixel-wise balancing is applied in the loss function counteracting the extreme imbalance between static and dynamic cells. One of the major advantages is the unsupervised learning character due to fully automatic label generation. The presented algorithm is trained and evaluated on multiple hours of recorded sensor data and compared to Monte-Carlo simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge