Mark H. M. Winands

Asymmetric regularization mechanism for GAN training with Variational Inequalities

Jan 20, 2026Abstract:We formulate the training of generative adversarial networks (GANs) as a Nash equilibrium seeking problem. To stabilize the training process and find a Nash equilibrium, we propose an asymmetric regularization mechanism based on the classic Tikhonov step and on a novel zero-centered gradient penalty. Under smoothness and a local identifiability condition induced by a Gauss-Newton Gramian, we obtain explicit Lipschitz and (strong)-monotonicity constants for the regularized operator. These constants ensure last-iterate linear convergence of a single-call Extrapolation-from-the-Past (EFTP) method. Empirical simulations on an academic example show that, even when strong monotonicity cannot be achieved, the asymmetric regularization is enough to converge to an equilibrium and stabilize the trajectory.

Variational Quantum Algorithms for Particle Track Reconstruction

Nov 14, 2025Abstract:Quantum Computing is a rapidly developing field with the potential to tackle the increasing computational challenges faced in high-energy physics. In this work, we explore the potential and limitations of variational quantum algorithms in solving the particle track reconstruction problem. We present an analysis of two distinct formulations for identifying straight-line tracks in a multilayer detection system, inspired by the LHCb vertex detector. The first approach is formulated as a ground-state energy problem, while the second approach is formulated as a system of linear equations. This work addresses one of the main challenges when dealing with variational quantum algorithms on general problems, namely designing an expressive and efficient quantum ansatz working on tracking events with fixed detector geometry. For this purpose, we employed a quantum architecture search method based on Monte Carlo Tree Search to design the quantum circuits for different problem sizes. We provide experimental results to test our approach on both formulations for different problem sizes in terms of performance and computational cost.

Generalized Proof-Number Monte-Carlo Tree Search

Jun 16, 2025Abstract:This paper presents Generalized Proof-Number Monte-Carlo Tree Search: a generalization of recently proposed combinations of Proof-Number Search (PNS) with Monte-Carlo Tree Search (MCTS), which use (dis)proof numbers to bias UCB1-based Selection strategies towards parts of the search that are expected to be easily (dis)proven. We propose three core modifications of prior combinations of PNS with MCTS. First, we track proof numbers per player. This reduces code complexity in the sense that we no longer need disproof numbers, and generalizes the technique to be applicable to games with more than two players. Second, we propose and extensively evaluate different methods of using proof numbers to bias the selection strategy, achieving strong performance with strategies that are simpler to implement and compute. Third, we merge our technique with Score Bounded MCTS, enabling the algorithm to prove and leverage upper and lower bounds on scores - as opposed to only proving wins or not-wins. Experiments demonstrate substantial performance increases, reaching the range of 80% for 8 out of the 11 tested board games.

Towards Explaining Monte-Carlo Tree Search by Using Its Enhancements

Jun 16, 2025Abstract:Typically, research on Explainable Artificial Intelligence (XAI) focuses on black-box models within the context of a general policy in a known, specific domain. This paper advocates for the need for knowledge-agnostic explainability applied to the subfield of XAI called Explainable Search, which focuses on explaining the choices made by intelligent search techniques. It proposes Monte-Carlo Tree Search (MCTS) enhancements as a solution to obtaining additional data and providing higher-quality explanations while remaining knowledge-free, and analyzes the most popular enhancements in terms of the specific types of explainability they introduce. So far, no other research has considered the explainability of MCTS enhancements. We present a proof-of-concept that demonstrates the advantages of utilizing enhancements.

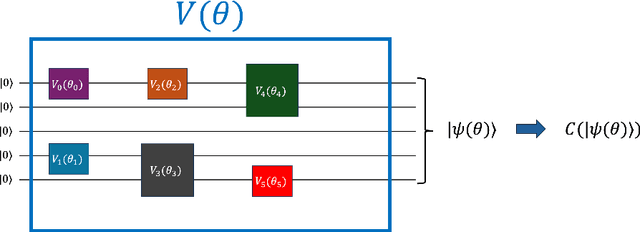

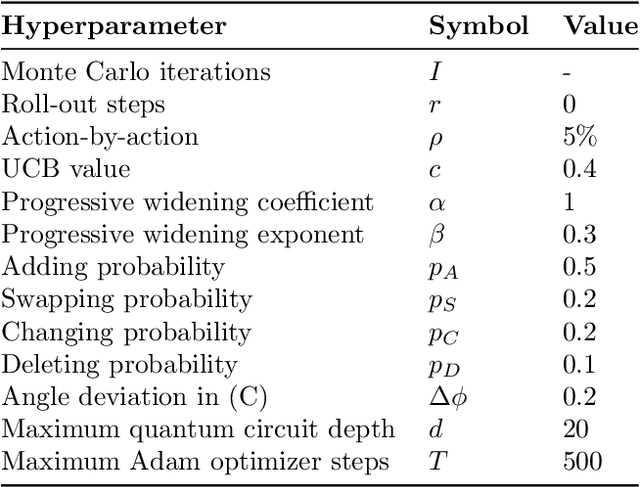

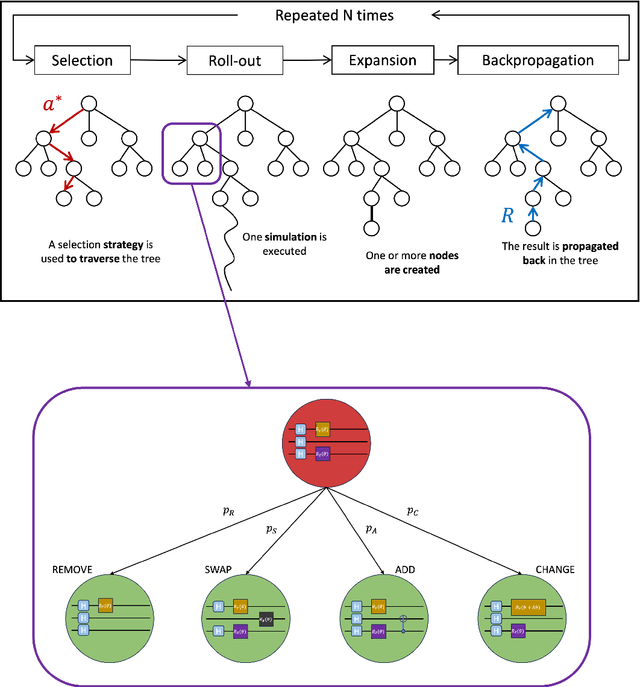

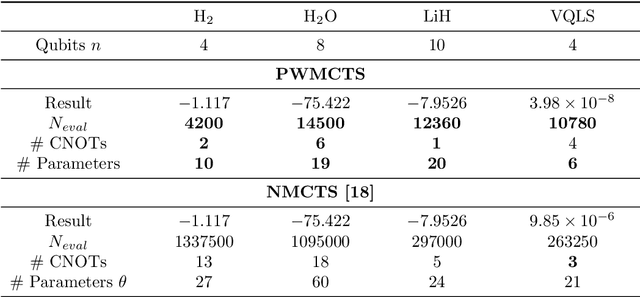

Quantum Circuit Design using a Progressive Widening Monte Carlo Tree Search

Feb 06, 2025

Abstract:The performance of Variational Quantum Algorithms (VQAs) strongly depends on the choice of the parameterized quantum circuit to optimize. One of the biggest challenges in VQAs is designing quantum circuits tailored to the particular problem and to the quantum hardware. This article proposes a gradient-free Monte Carlo Tree Search (MCTS) technique to automate the process of quantum circuit design. It introduces a novel formulation of the action space based on a sampling scheme and a progressive widening technique to explore the space dynamically. When testing our MCTS approach on the domain of random quantum circuits, MCTS approximates unstructured circuits under different values of stabilizer R\'enyi entropy. It turns out that MCTS manages to approximate the benchmark quantum states independently from their degree of nonstabilizerness. Next, our technique exhibits robustness across various application domains, including quantum chemistry and systems of linear equations. Compared to previous MCTS research, our technique reduces the number of quantum circuit evaluations by a factor of 10 to 100 while achieving equal or better results. In addition, the resulting quantum circuits have up to three times fewer CNOT gates.

Environment Descriptions for Usability and Generalisation in Reinforcement Learning

Dec 22, 2024

Abstract:The majority of current reinforcement learning (RL) research involves training and deploying agents in environments that are implemented by engineers in general-purpose programming languages and more advanced frameworks such as CUDA or JAX. This makes the application of RL to novel problems of interest inaccessible to small organisations or private individuals with insufficient engineering expertise. This position paper argues that, to enable more widespread adoption of RL, it is important for the research community to shift focus towards methodologies where environments are described in user-friendly domain-specific or natural languages. Aside from improving the usability of RL, such language-based environment descriptions may also provide valuable context and boost the ability of trained agents to generalise to unseen environments within the set of all environments that can be described in any language of choice.

Anytime Sequential Halving in Monte-Carlo Tree Search

Nov 11, 2024

Abstract:Monte-Carlo Tree Search (MCTS) typically uses multi-armed bandit (MAB) strategies designed to minimize cumulative regret, such as UCB1, as its selection strategy. However, in the root node of the search tree, it is more sensible to minimize simple regret. Previous work has proposed using Sequential Halving as selection strategy in the root node, as, in theory, it performs better with respect to simple regret. However, Sequential Halving requires a budget of iterations to be predetermined, which is often impractical. This paper proposes an anytime version of the algorithm, which can be halted at any arbitrary time and still return a satisfactory result, while being designed such that it approximates the behavior of Sequential Halving. Empirical results in synthetic MAB problems and ten different board games demonstrate that the algorithm's performance is competitive with Sequential Halving and UCB1 (and their analogues in MCTS).

Enhancements for Real-Time Monte-Carlo Tree Search in General Video Game Playing

Jul 03, 2024

Abstract:General Video Game Playing (GVGP) is a field of Artificial Intelligence where agents play a variety of real-time video games that are unknown in advance. This limits the use of domain-specific heuristics. Monte-Carlo Tree Search (MCTS) is a search technique for game playing that does not rely on domain-specific knowledge. This paper discusses eight enhancements for MCTS in GVGP; Progressive History, N-Gram Selection Technique, Tree Reuse, Breadth-First Tree Initialization, Loss Avoidance, Novelty-Based Pruning, Knowledge-Based Evaluations, and Deterministic Game Detection. Some of these are known from existing literature, and are either extended or introduced in the context of GVGP, and some are novel enhancements for MCTS. Most enhancements are shown to provide statistically significant increases in win percentages when applied individually. When combined, they increase the average win percentage over sixty different games from 31.0% to 48.4% in comparison to a vanilla MCTS implementation, approaching a level that is competitive with the best agents of the GVG-AI competition in 2015.

* Green Open Access version of conference paper published in 2016

Towards a Characterisation of Monte-Carlo Tree Search Performance in Different Games

Jun 13, 2024

Abstract:Many enhancements to Monte-Carlo Tree Search (MCTS) have been proposed over almost two decades of general game playing and other artificial intelligence research. However, our ability to characterise and understand which variants work well or poorly in which games is still lacking. This paper describes work on an initial dataset that we have built to make progress towards such an understanding: 268,386 plays among 61 different agents across 1494 distinct games. We describe a preliminary analysis and work on training predictive models on this dataset, as well as lessons learned and future plans for a new and improved version of the dataset.

Proof Number Based Monte-Carlo Tree Search

Mar 16, 2023

Abstract:This paper proposes a new game search algorithm, PN-MCTS, that combines Monte-Carlo Tree Search (MCTS) and Proof-Number Search (PNS). These two algorithms have been successfully applied for decision making in a range of domains. We define three areas where the additional knowledge provided by the proof and disproof numbers gathered in MCTS trees might be used: final move selection, solving subtrees, and the UCT formula. We test all possible combinations on different time settings, playing against vanilla UCT MCTS on several games: Lines of Action ($7$$\times$$7$ and $8$$\times$$8$), MiniShogi, Knightthrough, Awari, and Gomoku. Furthermore, we extend this new algorithm to properly address games with draws, like Awari, by adding an additional layer of PNS on top of the MCTS tree. The experiments show that PN-MCTS confidently outperforms MCTS in 5 out of 6 game domains (all except Gomoku), achieving win rates up to 96.2% for Lines of Action.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge