Jakub Kowalski

Regular Games -- an Automata-Based General Game Playing Language

Nov 13, 2025Abstract:We propose a new General Game Playing (GGP) system called Regular Games (RG). The main goal of RG is to be both computationally efficient and convenient for game design. The system consists of several languages. The core component is a low-level language that defines the rules by a finite automaton. It is minimal with only a few mechanisms, which makes it easy for automatic processing (by agents, analysis, optimization, etc.). The language is universal for the class of all finite turn-based games with imperfect information. Higher-level languages are introduced for game design (by humans or Procedural Content Generation), which are eventually translated to a low-level language. RG generates faster forward models than the current state of the art, beating other GGP systems (Regular Boardgames, Ludii) in terms of efficiency. Additionally, RG's ecosystem includes an editor with LSP, automaton visualization, benchmarking tools, and a debugger of game description transformations.

Generalized Proof-Number Monte-Carlo Tree Search

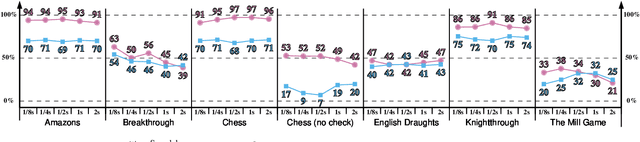

Jun 16, 2025Abstract:This paper presents Generalized Proof-Number Monte-Carlo Tree Search: a generalization of recently proposed combinations of Proof-Number Search (PNS) with Monte-Carlo Tree Search (MCTS), which use (dis)proof numbers to bias UCB1-based Selection strategies towards parts of the search that are expected to be easily (dis)proven. We propose three core modifications of prior combinations of PNS with MCTS. First, we track proof numbers per player. This reduces code complexity in the sense that we no longer need disproof numbers, and generalizes the technique to be applicable to games with more than two players. Second, we propose and extensively evaluate different methods of using proof numbers to bias the selection strategy, achieving strong performance with strategies that are simpler to implement and compute. Third, we merge our technique with Score Bounded MCTS, enabling the algorithm to prove and leverage upper and lower bounds on scores - as opposed to only proving wins or not-wins. Experiments demonstrate substantial performance increases, reaching the range of 80% for 8 out of the 11 tested board games.

Towards Explaining Monte-Carlo Tree Search by Using Its Enhancements

Jun 16, 2025Abstract:Typically, research on Explainable Artificial Intelligence (XAI) focuses on black-box models within the context of a general policy in a known, specific domain. This paper advocates for the need for knowledge-agnostic explainability applied to the subfield of XAI called Explainable Search, which focuses on explaining the choices made by intelligent search techniques. It proposes Monte-Carlo Tree Search (MCTS) enhancements as a solution to obtaining additional data and providing higher-quality explanations while remaining knowledge-free, and analyzes the most popular enhancements in terms of the specific types of explainability they introduce. So far, no other research has considered the explainability of MCTS enhancements. We present a proof-of-concept that demonstrates the advantages of utilizing enhancements.

Fast and Knowledge-Free Deep Learning for General Game Playing

Dec 21, 2023Abstract:We develop a method of adapting the AlphaZero model to General Game Playing (GGP) that focuses on faster model generation and requires less knowledge to be extracted from the game rules. The dataset generation uses MCTS playing instead of self-play; only the value network is used, and attention layers replace the convolutional ones. This allows us to abandon any assumptions about the action space and board topology. We implement the method within the Regular Boardgames GGP system and show that we can build models outperforming the UCT baseline for most games efficiently.

Pathway: a fast and flexible unified stream data processing framework for analytical and Machine Learning applications

Jul 12, 2023

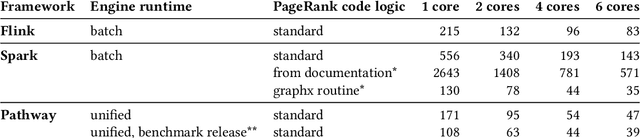

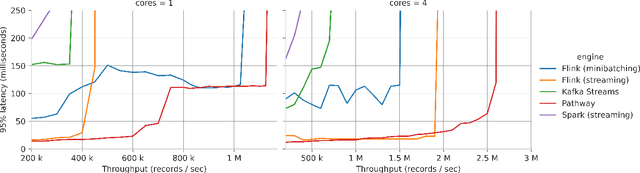

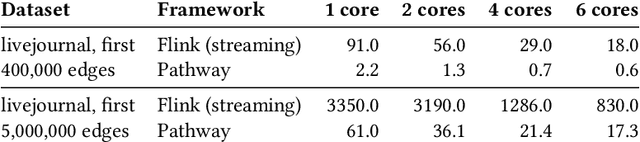

Abstract:We present Pathway, a new unified data processing framework that can run workloads on both bounded and unbounded data streams. The framework was created with the original motivation of resolving challenges faced when analyzing and processing data from the physical economy, including streams of data generated by IoT and enterprise systems. These required rapid reaction while calling for the application of advanced computation paradigms (machinelearning-powered analytics, contextual analysis, and other elements of complex event processing). Pathway is equipped with a Table API tailored for Python and Python/SQL workflows, and is powered by a distributed incremental dataflow in Rust. We describe the system and present benchmarking results which demonstrate its capabilities in both batch and streaming contexts, where it is able to surpass state-of-the-art industry frameworks in both scenarios. We also discuss streaming use cases handled by Pathway which cannot be easily resolved with state-of-the-art industry frameworks, such as streaming iterative graph algorithms (PageRank, etc.).

Summarizing Strategy Card Game AI Competition

May 19, 2023

Abstract:This paper concludes five years of AI competitions based on Legends of Code and Magic (LOCM), a small Collectible Card Game (CCG), designed with the goal of supporting research and algorithm development. The game was used in a number of events, including Community Contests on the CodinGame platform, and Strategy Card Game AI Competition at the IEEE Congress on Evolutionary Computation and IEEE Conference on Games. LOCM has been used in a number of publications related to areas such as game tree search algorithms, neural networks, evaluation functions, and CCG deckbuilding. We present the rules of the game, the history of organized competitions, and a listing of the participant and their approaches, as well as some general advice on organizing AI competitions for the research community. Although the COG 2022 edition was announced to be the last one, the game remains available and can be played using an online leaderboard arena.

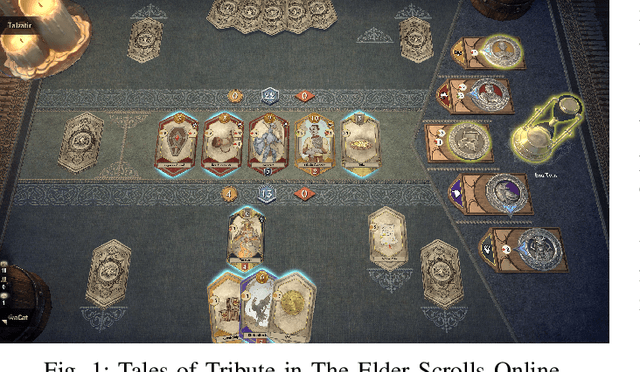

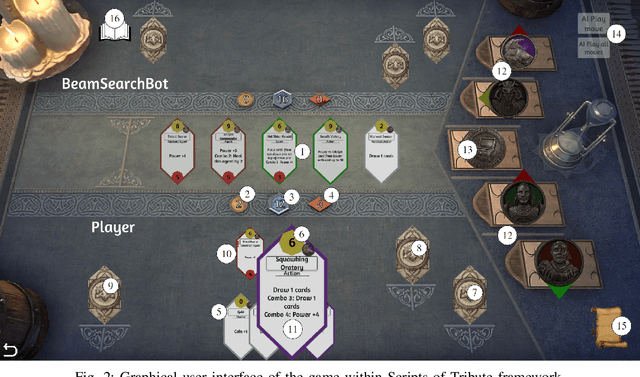

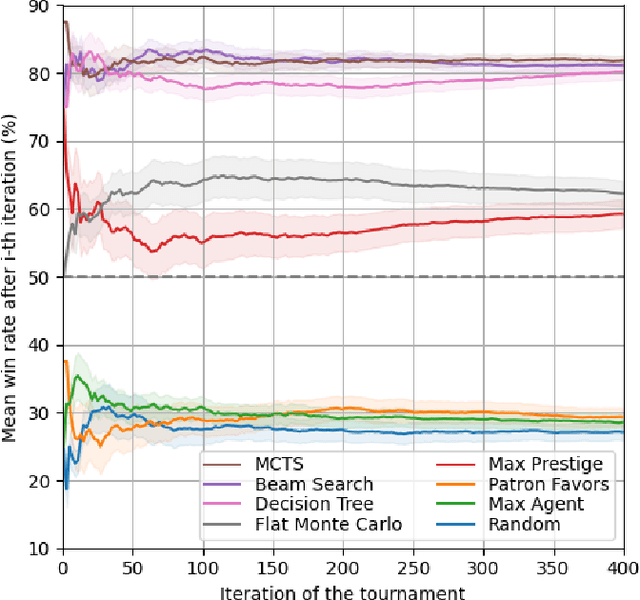

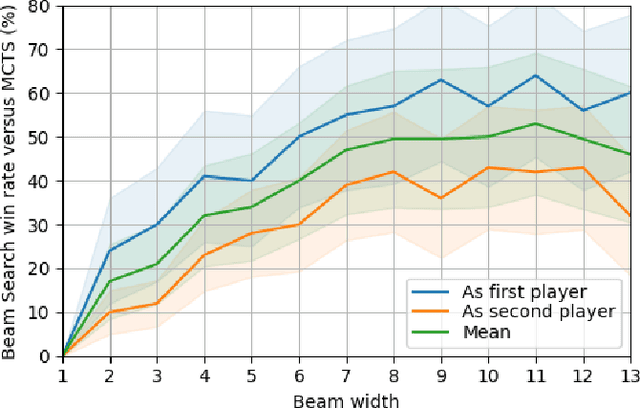

Introducing Tales of Tribute AI Competition

May 14, 2023

Abstract:This paper presents a new AI challenge, the Tales of Tribute AI Competition (TOTAIC), based on a two-player deck-building card game released with the High Isle chapter of The Elder Scrolls Online. Currently, there is no other AI competition covering Collectible Card Games (CCG) genre, and there has never been one that targets a deck-building game. Thus, apart from usual CCG-related obstacles to overcome, like randomness, hidden information, and large branching factor, the successful approach additionally requires long-term planning and versatility. The game can be tackled with multiple approaches, including classic adversarial search, single-player planning, and Neural Networks-based algorithms. This paper introduces the competition framework, describes the rules of the game, and presents the results of a tournament between sample AI agents. The first edition of TOTAIC is hosted at the IEEE Conference on Games 2023.

Proof Number Based Monte-Carlo Tree Search

Mar 16, 2023

Abstract:This paper proposes a new game search algorithm, PN-MCTS, that combines Monte-Carlo Tree Search (MCTS) and Proof-Number Search (PNS). These two algorithms have been successfully applied for decision making in a range of domains. We define three areas where the additional knowledge provided by the proof and disproof numbers gathered in MCTS trees might be used: final move selection, solving subtrees, and the UCT formula. We test all possible combinations on different time settings, playing against vanilla UCT MCTS on several games: Lines of Action ($7$$\times$$7$ and $8$$\times$$8$), MiniShogi, Knightthrough, Awari, and Gomoku. Furthermore, we extend this new algorithm to properly address games with draws, like Awari, by adding an additional layer of PNS on top of the MCTS tree. The experiments show that PN-MCTS confidently outperforms MCTS in 5 out of 6 game domains (all except Gomoku), achieving win rates up to 96.2% for Lines of Action.

Developing a Successful Bomberman Agent

Mar 17, 2022

Abstract:In this paper, we study AI approaches to successfully play a 2-4 players, full information, Bomberman variant published on the CodinGame platform. We compare the behavior of three search algorithms: Monte Carlo Tree Search, Rolling Horizon Evolution, and Beam Search. We present various enhancements leading to improve the agents' strength that concern search, opponent prediction, game state evaluation, and game engine encoding. Our top agent variant is based on a Beam Search with low-level bit-based state representation and evaluation function heavy relying on pruning unpromising states based on simulation-based estimation of survival. It reached the top one position among the 2,300 AI agents submitted on the CodinGame arena.

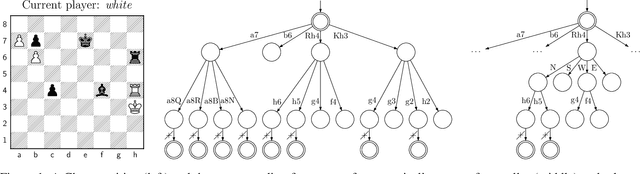

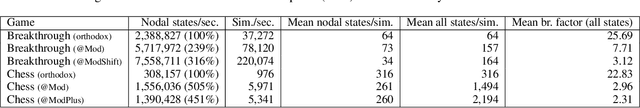

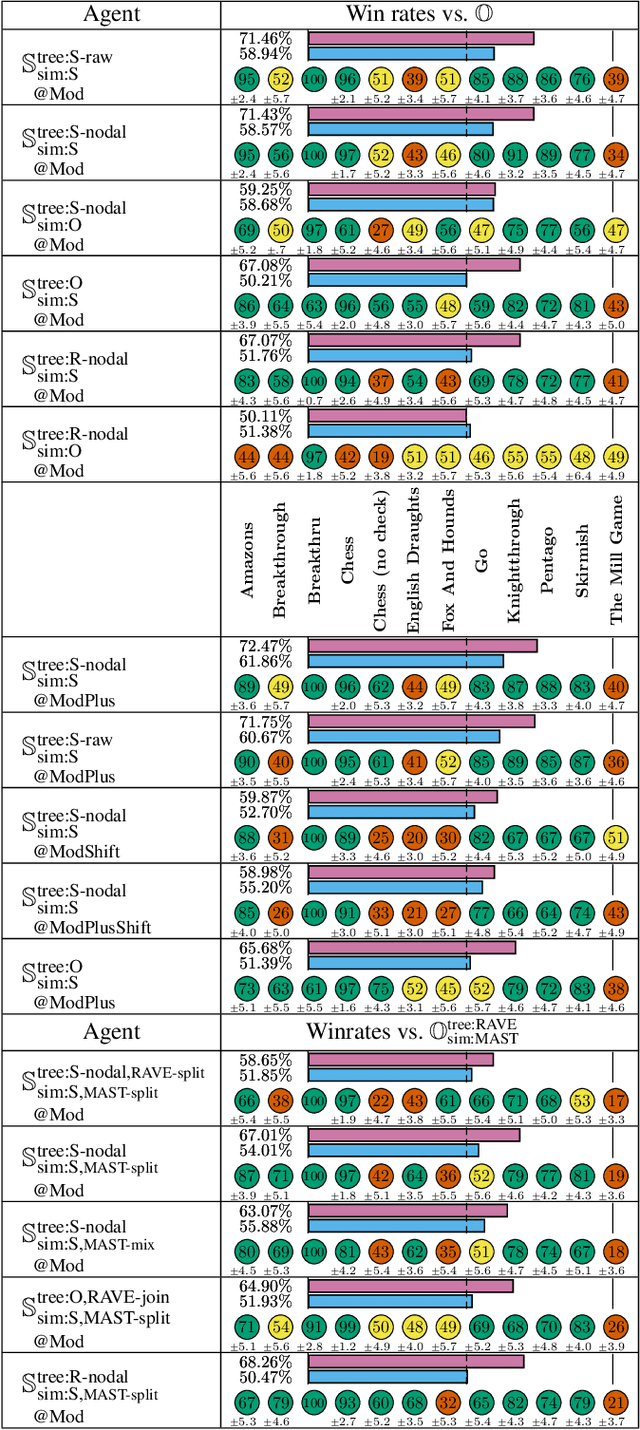

Split Moves for Monte-Carlo Tree Search

Dec 14, 2021

Abstract:In many games, moves consist of several decisions made by the player. These decisions can be viewed as separate moves, which is already a common practice in multi-action games for efficiency reasons. Such division of a player move into a sequence of simpler / lower level moves is called \emph{splitting}. So far, split moves have been applied only in forementioned straightforward cases, and furthermore, there was almost no study revealing its impact on agents' playing strength. Taking the knowledge-free perspective, we aim to answer how to effectively use split moves within Monte-Carlo Tree Search (MCTS) and what is the practical impact of split design on agents' strength. This paper proposes a generalization of MCTS that works with arbitrarily split moves. We design several variations of the algorithm and try to measure the impact of split moves separately on efficiency, quality of MCTS, simulations, and action-based heuristics. The tests are carried out on a set of board games and performed using the Regular Boardgames General Game Playing formalism, where split strategies of different granularity can be automatically derived based on an abstract description of the game. The results give an overview of the behavior of agents using split design in different ways. We conclude that split design can be greatly beneficial for single- as well as multi-action games.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge