Radosław Miernik

Regular Games -- an Automata-Based General Game Playing Language

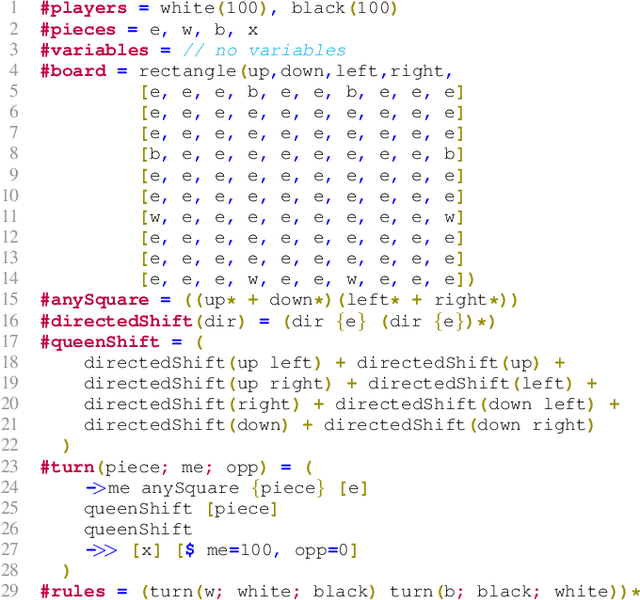

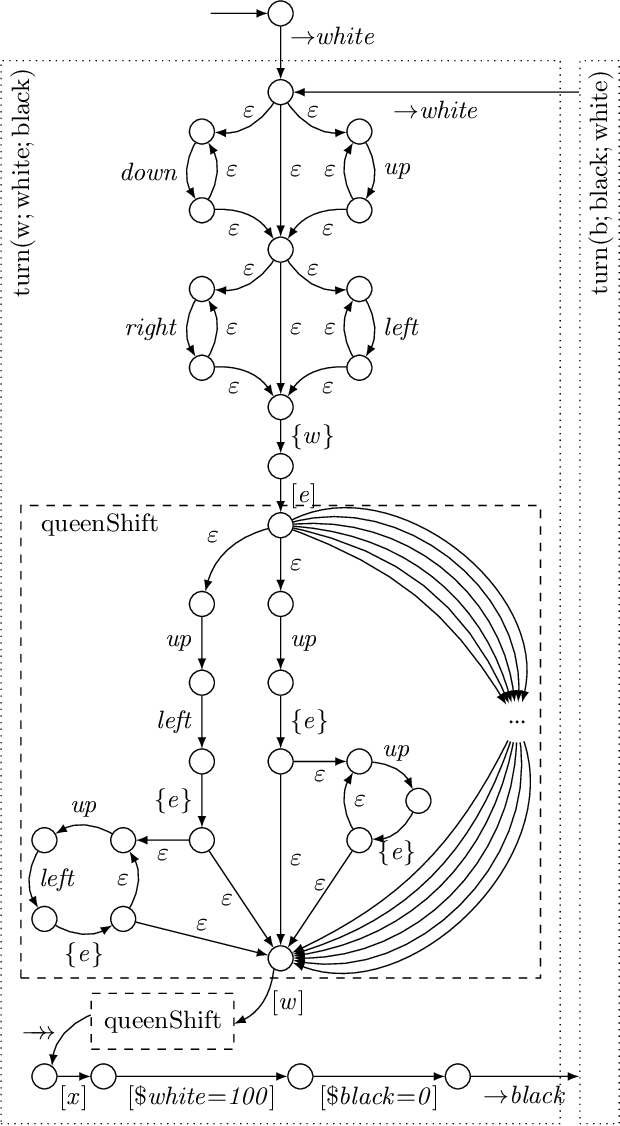

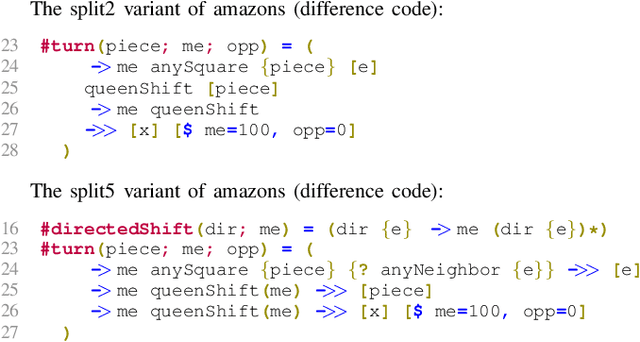

Nov 13, 2025Abstract:We propose a new General Game Playing (GGP) system called Regular Games (RG). The main goal of RG is to be both computationally efficient and convenient for game design. The system consists of several languages. The core component is a low-level language that defines the rules by a finite automaton. It is minimal with only a few mechanisms, which makes it easy for automatic processing (by agents, analysis, optimization, etc.). The language is universal for the class of all finite turn-based games with imperfect information. Higher-level languages are introduced for game design (by humans or Procedural Content Generation), which are eventually translated to a low-level language. RG generates faster forward models than the current state of the art, beating other GGP systems (Regular Boardgames, Ludii) in terms of efficiency. Additionally, RG's ecosystem includes an editor with LSP, automaton visualization, benchmarking tools, and a debugger of game description transformations.

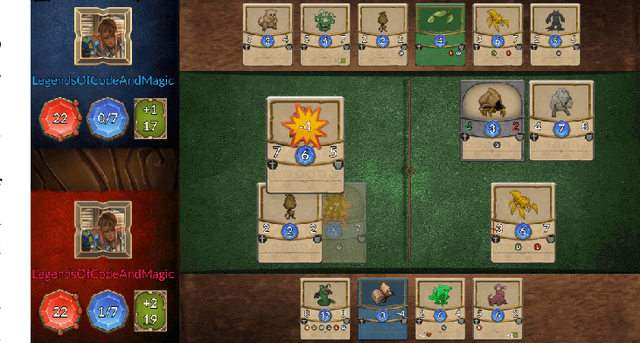

Summarizing Strategy Card Game AI Competition

May 19, 2023

Abstract:This paper concludes five years of AI competitions based on Legends of Code and Magic (LOCM), a small Collectible Card Game (CCG), designed with the goal of supporting research and algorithm development. The game was used in a number of events, including Community Contests on the CodinGame platform, and Strategy Card Game AI Competition at the IEEE Congress on Evolutionary Computation and IEEE Conference on Games. LOCM has been used in a number of publications related to areas such as game tree search algorithms, neural networks, evaluation functions, and CCG deckbuilding. We present the rules of the game, the history of organized competitions, and a listing of the participant and their approaches, as well as some general advice on organizing AI competitions for the research community. Although the COG 2022 edition was announced to be the last one, the game remains available and can be played using an online leaderboard arena.

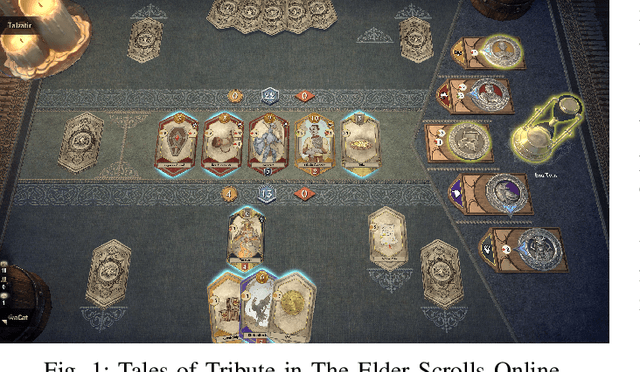

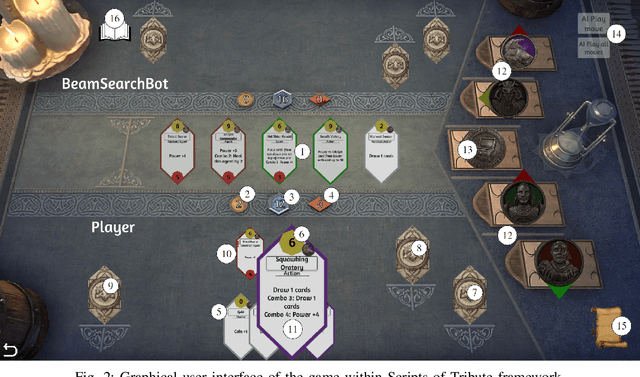

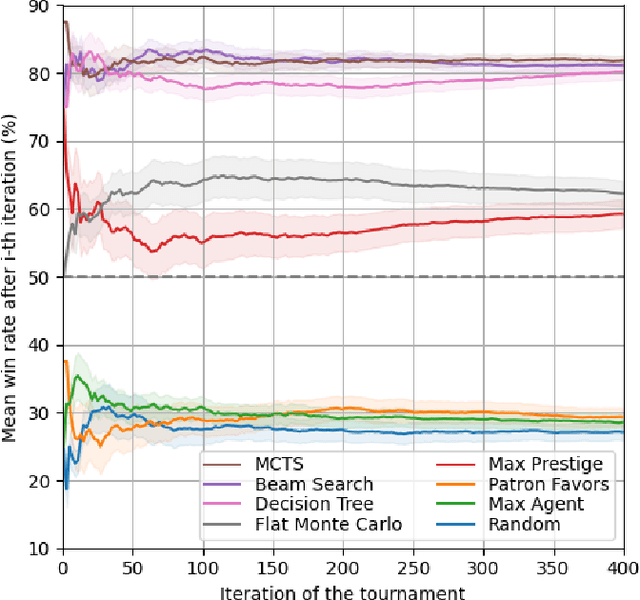

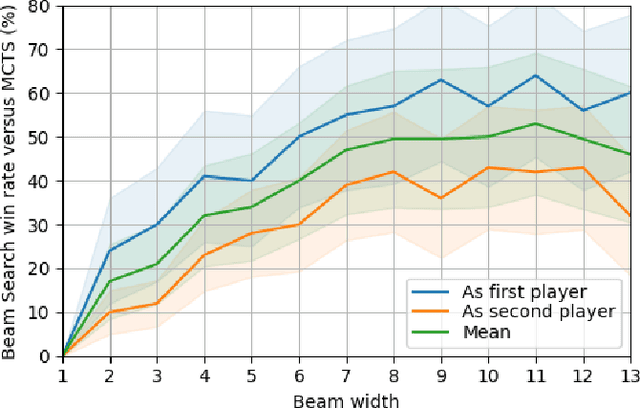

Introducing Tales of Tribute AI Competition

May 14, 2023

Abstract:This paper presents a new AI challenge, the Tales of Tribute AI Competition (TOTAIC), based on a two-player deck-building card game released with the High Isle chapter of The Elder Scrolls Online. Currently, there is no other AI competition covering Collectible Card Games (CCG) genre, and there has never been one that targets a deck-building game. Thus, apart from usual CCG-related obstacles to overcome, like randomness, hidden information, and large branching factor, the successful approach additionally requires long-term planning and versatility. The game can be tackled with multiple approaches, including classic adversarial search, single-player planning, and Neural Networks-based algorithms. This paper introduces the competition framework, describes the rules of the game, and presents the results of a tournament between sample AI agents. The first edition of TOTAIC is hosted at the IEEE Conference on Games 2023.

Developing a Successful Bomberman Agent

Mar 17, 2022

Abstract:In this paper, we study AI approaches to successfully play a 2-4 players, full information, Bomberman variant published on the CodinGame platform. We compare the behavior of three search algorithms: Monte Carlo Tree Search, Rolling Horizon Evolution, and Beam Search. We present various enhancements leading to improve the agents' strength that concern search, opponent prediction, game state evaluation, and game engine encoding. Our top agent variant is based on a Beam Search with low-level bit-based state representation and evaluation function heavy relying on pruning unpromising states based on simulation-based estimation of survival. It reached the top one position among the 2,300 AI agents submitted on the CodinGame arena.

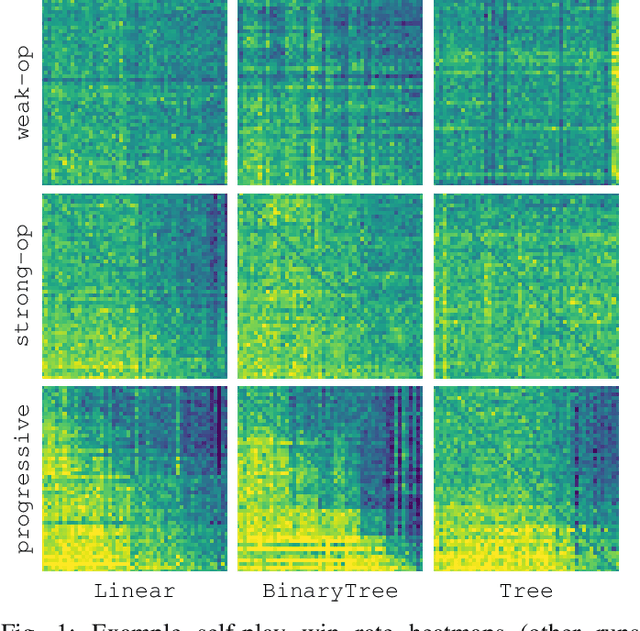

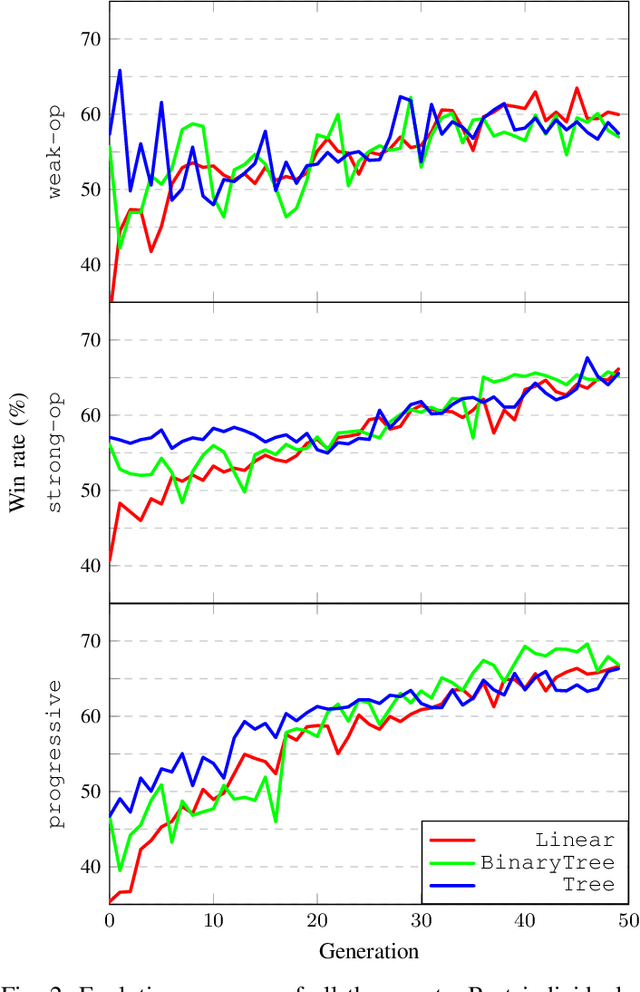

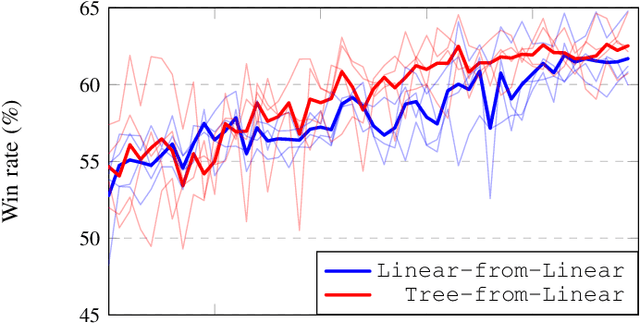

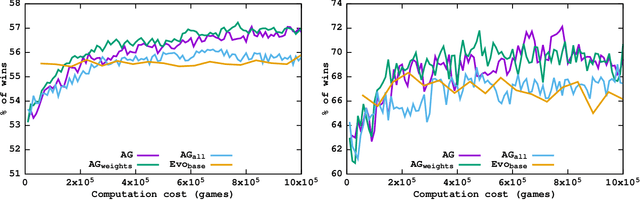

Evolving Evaluation Functions for Collectible Card Game AI

May 03, 2021

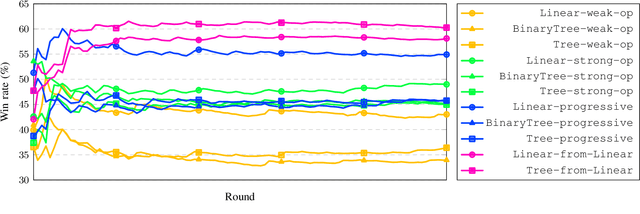

Abstract:In this work, we presented a study regarding two important aspects of evolving feature-based game evaluation functions: the choice of genome representation and the choice of opponent used to test the model. We compared three representations. One simpler and more limited, based on a vector of weights that are used in a linear combination of predefined game features. And two more complex, based on binary and n-ary trees. On top of this test, we also investigated the influence of fitness defined as a simulation-based function that: plays against a fixed weak opponent, plays against a fixed strong opponent, and plays against the best individual from the previous population. For a testbed, we have chosen a recently popular domain of digital collectible card games. We encoded our experiments in a programming game, Legends of Code and Magic, used in Strategy Card Game AI Competition. However, as the problems stated are of general nature we are convinced that our observations are applicable in the other domains as well.

Efficient Reasoning in Regular Boardgames

Jun 15, 2020

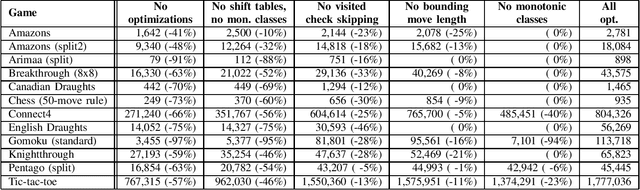

Abstract:We present the technical side of reasoning in Regular Boardgames (RBG) language -- a universal General Game Playing (GGP) formalism for the class of finite deterministic games with perfect information, encoding rules in the form of regular expressions. RBG serves as a research tool that aims to aid in the development of generalized algorithms for knowledge inference, analysis, generation, learning, and playing games. In all these tasks, both generality and efficiency are important. In the first part, this paper describes optimizations used by the RBG compiler. The impact of these optimizations ranges from 1.7 to even 33-fold efficiency improvement when measuring the number of possible game playouts per second. Then, we perform an in-depth efficiency comparison with three other modern GGP systems (GDL, Ludii, Ai Ai). We also include our own highly optimized game-specific reasoners to provide a point of reference of the maximum speed. Our experiments show that RBG is currently the fastest among the abstract general game playing languages, and its efficiency can be competitive to common interface-based systems that rely on handcrafted game-specific implementations. Finally, we discuss some issues and methodology of computing benchmarks like this.

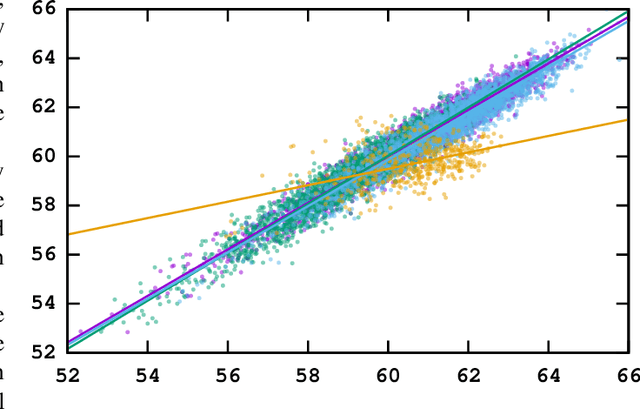

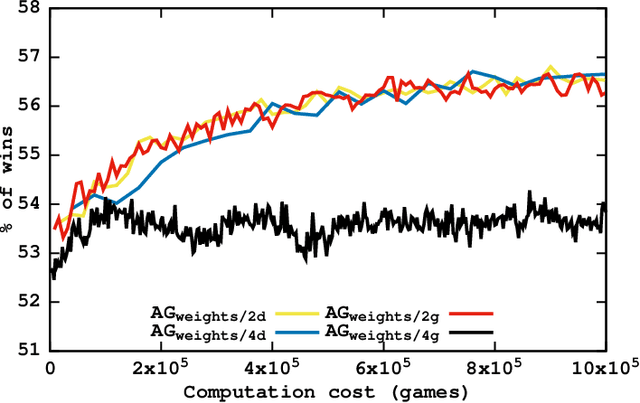

Evolutionary Approach to Collectible Card Game Arena Deckbuilding using Active Genes

Jan 05, 2020

Abstract:In this paper, we evolve a card-choice strategy for the arena mode of Legends of Code and Magic, a programming game inspired by popular collectible card games like Hearthstone or TES: Legends. In the arena game mode, before each match, a player has to construct his deck choosing cards one by one from the previously unknown options. Such a scenario is difficult from the optimization point of view, as not only the fitness function is non-deterministic, but its value, even for a given problem instance, is impossible to be calculated directly and can only be estimated with simulation-based approaches. We propose a variant of the evolutionary algorithm that uses a concept of an active gene to reduce the range of the operators only to generation-specific subsequences of the genotype. Thus, we batched learning process and constrained evolutionary updates only to the cards relevant for the particular draft, without forgetting the knowledge from the previous tests. We developed and tested various implementations of this idea, investigating their performance by taking into account the computational cost of each variant. Performed experiments show that some of the introduced active-genes algorithms tend to learn faster and produce statistically better draft policies than the compared methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge