Evolutionary Approach to Collectible Card Game Arena Deckbuilding using Active Genes

Paper and Code

Jan 05, 2020

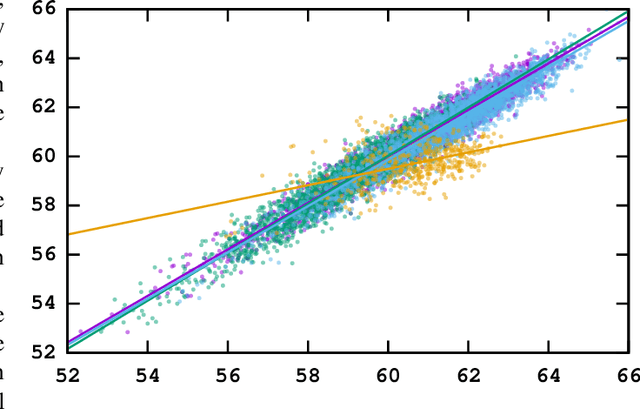

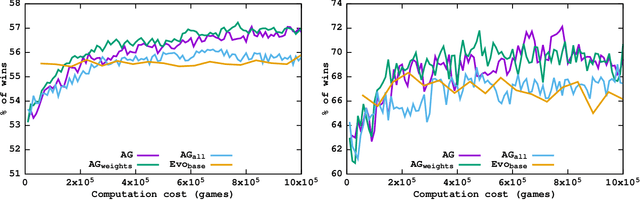

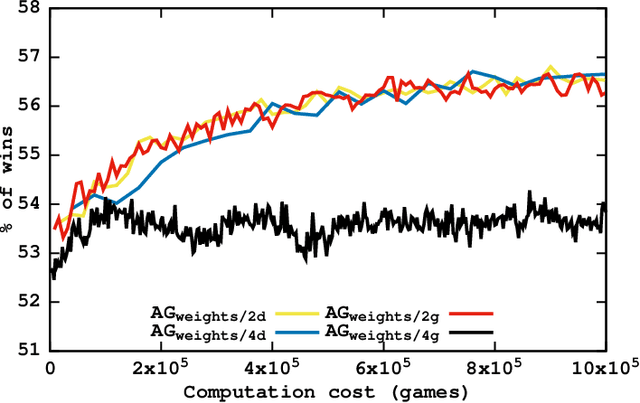

In this paper, we evolve a card-choice strategy for the arena mode of Legends of Code and Magic, a programming game inspired by popular collectible card games like Hearthstone or TES: Legends. In the arena game mode, before each match, a player has to construct his deck choosing cards one by one from the previously unknown options. Such a scenario is difficult from the optimization point of view, as not only the fitness function is non-deterministic, but its value, even for a given problem instance, is impossible to be calculated directly and can only be estimated with simulation-based approaches. We propose a variant of the evolutionary algorithm that uses a concept of an active gene to reduce the range of the operators only to generation-specific subsequences of the genotype. Thus, we batched learning process and constrained evolutionary updates only to the cards relevant for the particular draft, without forgetting the knowledge from the previous tests. We developed and tested various implementations of this idea, investigating their performance by taking into account the computational cost of each variant. Performed experiments show that some of the introduced active-genes algorithms tend to learn faster and produce statistically better draft policies than the compared methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge