Chiara F. Sironi

Enhancements for Real-Time Monte-Carlo Tree Search in General Video Game Playing

Jul 03, 2024

Abstract:General Video Game Playing (GVGP) is a field of Artificial Intelligence where agents play a variety of real-time video games that are unknown in advance. This limits the use of domain-specific heuristics. Monte-Carlo Tree Search (MCTS) is a search technique for game playing that does not rely on domain-specific knowledge. This paper discusses eight enhancements for MCTS in GVGP; Progressive History, N-Gram Selection Technique, Tree Reuse, Breadth-First Tree Initialization, Loss Avoidance, Novelty-Based Pruning, Knowledge-Based Evaluations, and Deterministic Game Detection. Some of these are known from existing literature, and are either extended or introduced in the context of GVGP, and some are novel enhancements for MCTS. Most enhancements are shown to provide statistically significant increases in win percentages when applied individually. When combined, they increase the average win percentage over sixty different games from 31.0% to 48.4% in comparison to a vanilla MCTS implementation, approaching a level that is competitive with the best agents of the GVG-AI competition in 2015.

* Green Open Access version of conference paper published in 2016

Ludii - The Ludemic General Game System

May 16, 2019

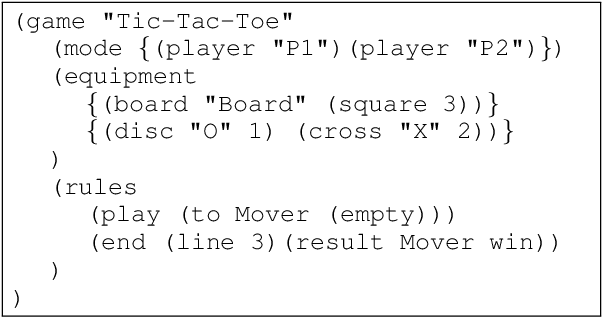

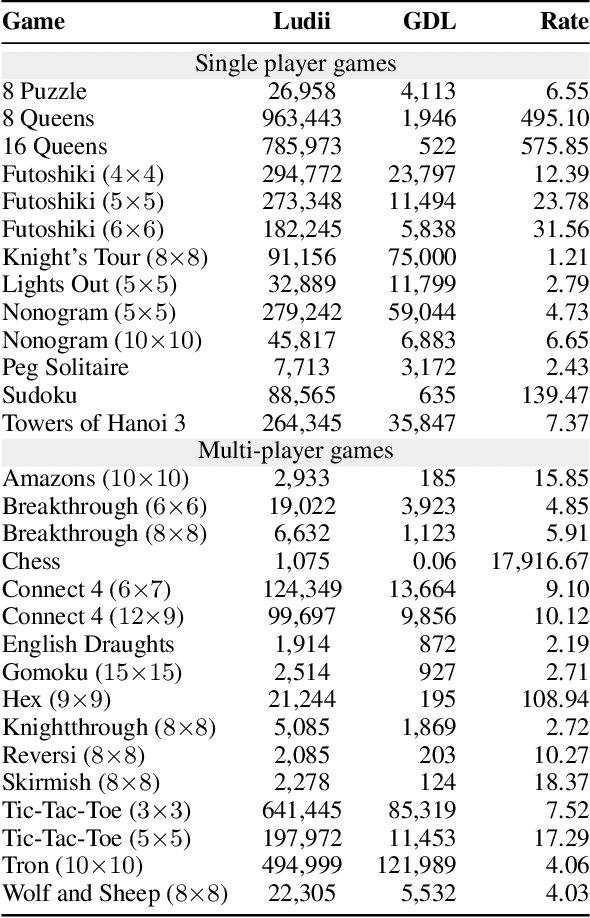

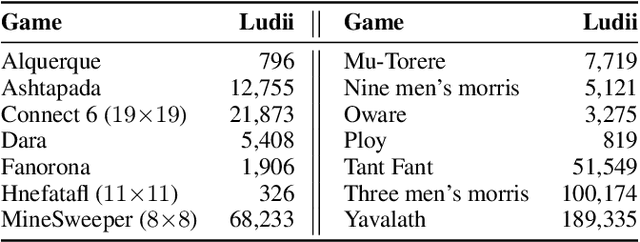

Abstract:While current General Game Playing (GGP) systems facilitate useful research in Artificial Intelligence (AI) for game-playing, they are often somewhat specialized and computationally inefficient. In this paper, we describe an initial version of a "ludemic" general game system called Ludii, which has the potential to provide an efficient tool for AI researchers as well game designers, historians, educators and practitioners in related fields. Ludii defines games as structures of ludemes, i.e. high-level, easily understandable game concepts. We establish the foundations of Ludii by outlining its main benefits: generality, extensibility, understandability and efficiency. Experimentally, Ludii outperforms one of the most efficient Game Description Language (GDL) reasoners, based on a propositional network, for all available games in the Tiltyard GGP repository.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge