Marina Monti

SHREC 2021: Track on Skeleton-based Hand Gesture Recognition in the Wild

Jun 21, 2021

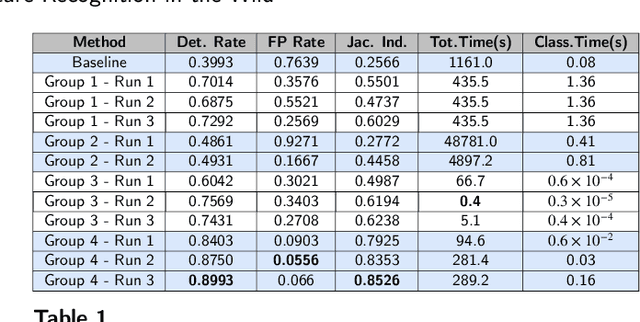

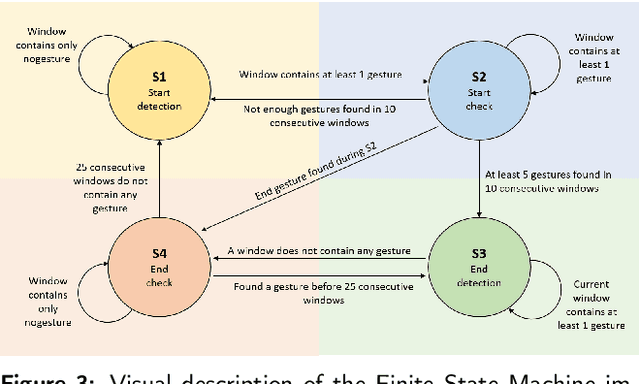

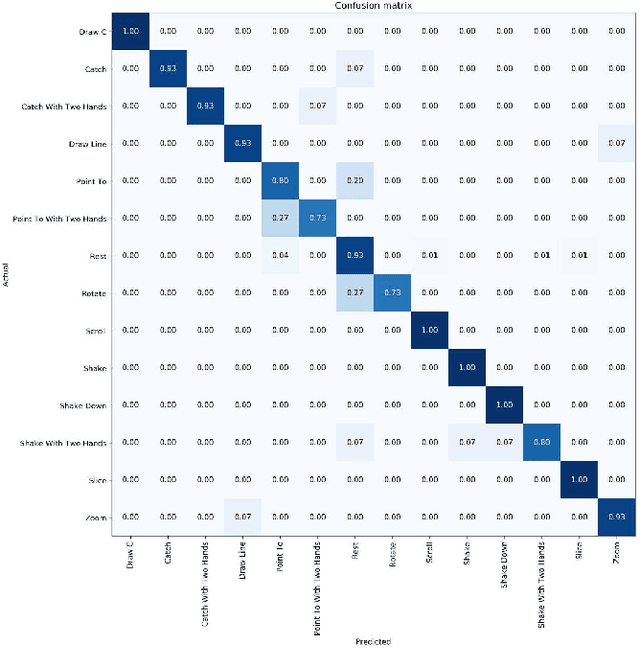

Abstract:Gesture recognition is a fundamental tool to enable novel interaction paradigms in a variety of application scenarios like Mixed Reality environments, touchless public kiosks, entertainment systems, and more. Recognition of hand gestures can be nowadays performed directly from the stream of hand skeletons estimated by software provided by low-cost trackers (Ultraleap) and MR headsets (Hololens, Oculus Quest) or by video processing software modules (e.g. Google Mediapipe). Despite the recent advancements in gesture and action recognition from skeletons, it is unclear how well the current state-of-the-art techniques can perform in a real-world scenario for the recognition of a wide set of heterogeneous gestures, as many benchmarks do not test online recognition and use limited dictionaries. This motivated the proposal of the SHREC 2021: Track on Skeleton-based Hand Gesture Recognition in the Wild. For this contest, we created a novel dataset with heterogeneous gestures featuring different types and duration. These gestures have to be found inside sequences in an online recognition scenario. This paper presents the result of the contest, showing the performances of the techniques proposed by four research groups on the challenging task compared with a simple baseline method.

3D dynamic hand gestures recognition using the Leap Motion sensor and convolutional neural networks

Mar 11, 2020

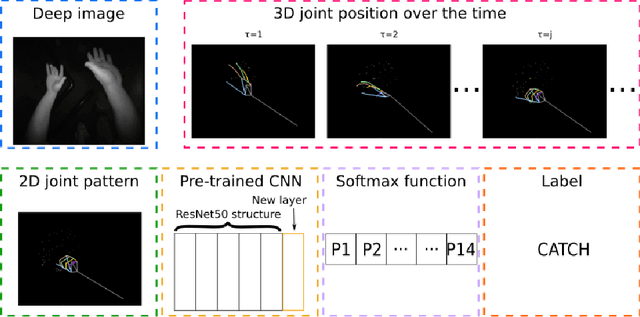

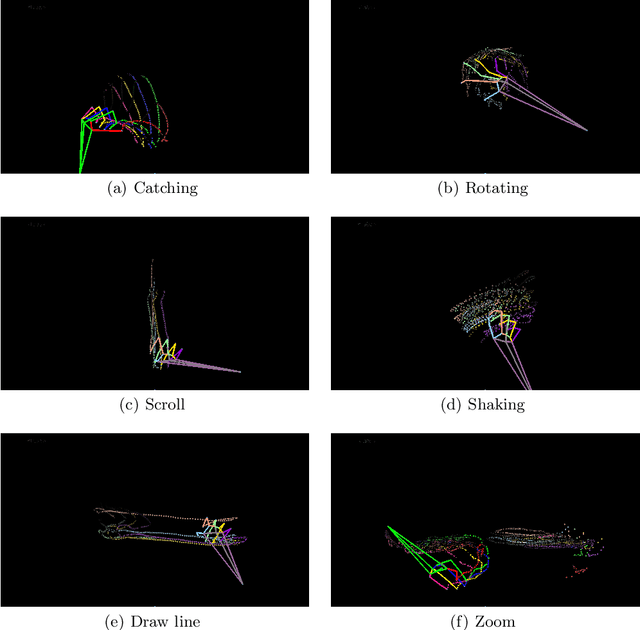

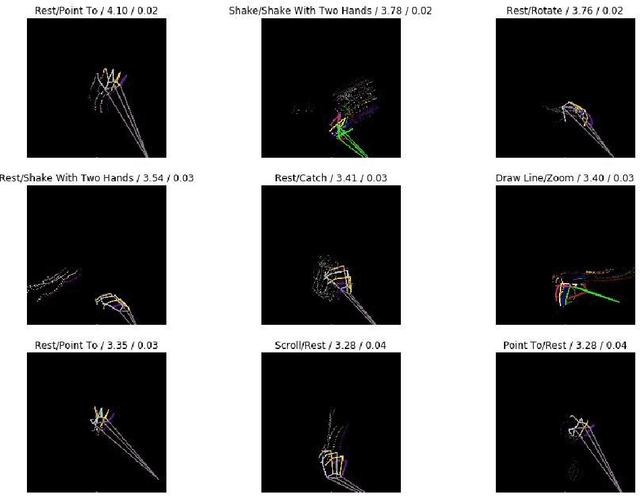

Abstract:Defining methods for the automatic understanding of gestures is of paramount importance in many application contexts and in Virtual Reality applications for creating more natural and easy-to-use human-computer interaction methods. In this paper, we present a method for the recognition of a set of non-static gestures acquired through the Leap Motion sensor. The acquired gesture information is converted in color images, where the variation of hand joint positions during the gesture are projected on a plane and temporal information is represented with color intensity of the projected points. The classification of the gestures is performed using a deep Convolutional Neural Network (CNN). A modified version of the popular ResNet-50 architecture is adopted, obtained by removing the last fully connected layer and adding a new layer with as many neurons as the considered gesture classes. The method has been successfully applied to the existing reference dataset and preliminary tests have already been performed for the real-time recognition of dynamic gestures performed by users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge