Maria Bannert

Taking the Next Step with Generative Artificial Intelligence: The Transformative Role of Multimodal Large Language Models in Science Education

Jan 01, 2024

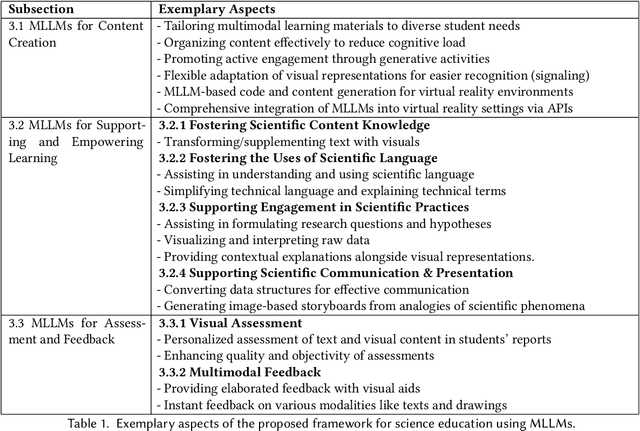

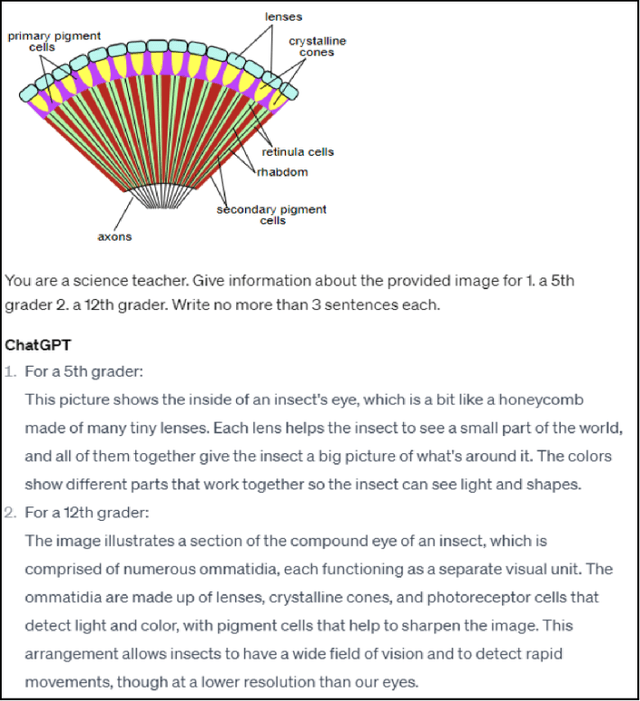

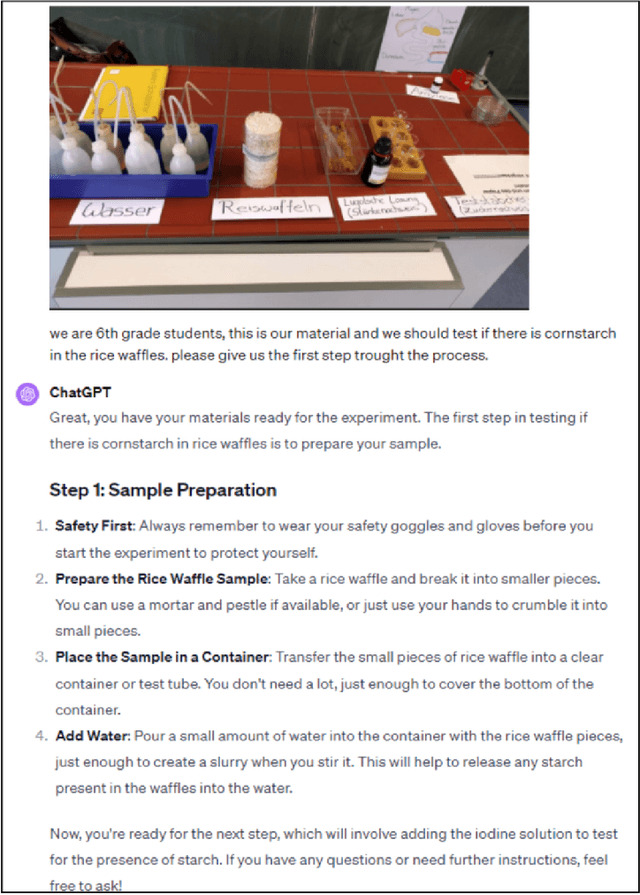

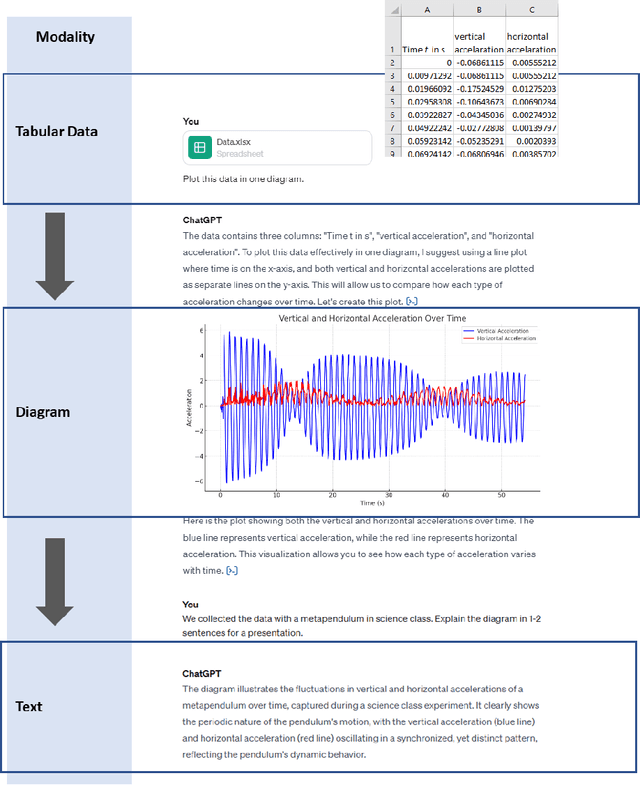

Abstract:The integration of Artificial Intelligence (AI), particularly Large Language Model (LLM)-based systems, in education has shown promise in enhancing teaching and learning experiences. However, the advent of Multimodal Large Language Models (MLLMs) like GPT-4 with vision (GPT-4V), capable of processing multimodal data including text, sound, and visual inputs, opens a new era of enriched, personalized, and interactive learning landscapes in education. Grounded in theory of multimedia learning, this paper explores the transformative role of MLLMs in central aspects of science education by presenting exemplary innovative learning scenarios. Possible applications for MLLMs could range from content creation to tailored support for learning, fostering competencies in scientific practices, and providing assessment and feedback. These scenarios are not limited to text-based and uni-modal formats but can be multimodal, increasing thus personalization, accessibility, and potential learning effectiveness. Besides many opportunities, challenges such as data protection and ethical considerations become more salient, calling for robust frameworks to ensure responsible integration. This paper underscores the necessity for a balanced approach in implementing MLLMs, where the technology complements rather than supplants the educator's role, ensuring thus an effective and ethical use of AI in science education. It calls for further research to explore the nuanced implications of MLLMs on the evolving role of educators and to extend the discourse beyond science education to other disciplines. Through the exploration of potentials, challenges, and future implications, we aim to contribute to a preliminary understanding of the transformative trajectory of MLLMs in science education and beyond.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge