Margaret Burnett

"Over-the-Hood" AI Inclusivity Bugs and How 3 AI Product Teams Found and Fixed Them

Oct 21, 2025Abstract:While much research has shown the presence of AI's "under-the-hood" biases (e.g., algorithmic, training data, etc.), what about "over-the-hood" inclusivity biases: barriers in user-facing AI products that disproportionately exclude users with certain problem-solving approaches? Recent research has begun to report the existence of such biases -- but what do they look like, how prevalent are they, and how can developers find and fix them? To find out, we conducted a field study with 3 AI product teams, to investigate what kinds of AI inclusivity bugs exist uniquely in user-facing AI products, and whether/how AI product teams might harness an existing (non-AI-oriented) inclusive design method to find and fix them. The teams' work resulted in identifying 6 types of AI inclusivity bugs arising 83 times, fixes covering 47 of these bug instances, and a new variation of the GenderMag inclusive design method, GenderMag-for-AI, that is especially effective at detecting certain kinds of AI inclusivity bugs.

Intersectionality Goes Analytical: Taming Combinatorial Explosion Through Type Abstraction

Jan 25, 2022Abstract:HCI researchers' and practitioners' awareness of intersectionality has been expanding, producing knowledge, recommendations, and prototypes for supporting intersectional populations. However, doing intersectional HCI work is uniquely expensive: it leads to a combinatorial explosion of empirical work (expense 1), and little of the work on one intersectional population can be leveraged to serve another (expense 2). In this paper, we explain how representations employed by certain analytical design methods correspond to type abstractions, and use that correspondence to identify a (de)compositional model in which a population's diverse identity properties can be joined and split. We formally prove the model's correctness, and show how it enables HCI designers to harness existing analytical HCI methods for use on new intersectional populations of interest. We illustrate through four design use-cases, how the model can reduce the amount of expense 1 and enable designers to leverage prior work to new intersectional populations, addressing expense 2.

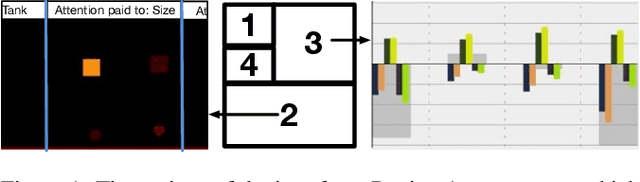

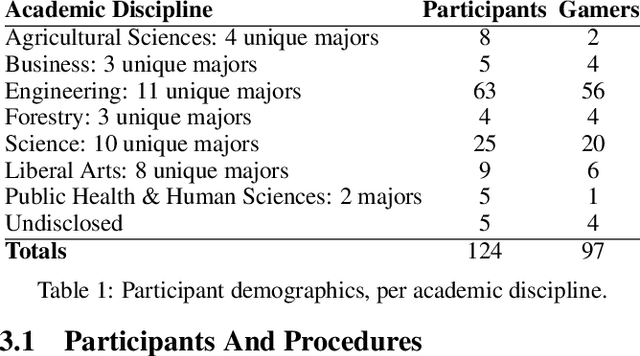

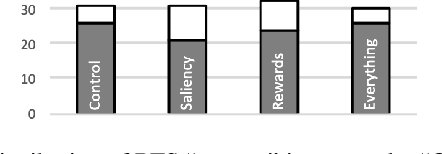

Explaining Reinforcement Learning to Mere Mortals: An Empirical Study

Mar 22, 2019

Abstract:We present a user study to investigate the impact of explanations on non-experts' understanding of reinforcement learning (RL) agents. We investigate both a common RL visualization, saliency maps (the focus of attention), and a more recent explanation type, reward-decomposition bars (predictions of future types of rewards). We designed a 124 participant, four-treatment experiment to compare participants' mental models of an RL agent in a simple Real-Time Strategy (RTS) game. Our results show that the combination of both saliency and reward bars were needed to achieve a statistically significant improvement in mental model score over the control. In addition, our qualitative analysis of the data reveals a number of effects for further study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge