Marcus De Carvalho

Reinforced Continual Learning for Graphs

Sep 04, 2022

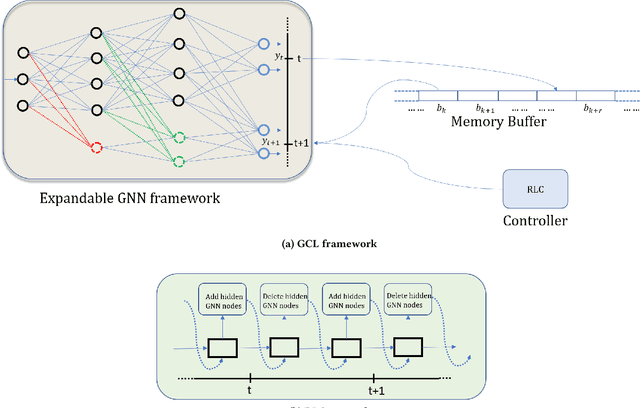

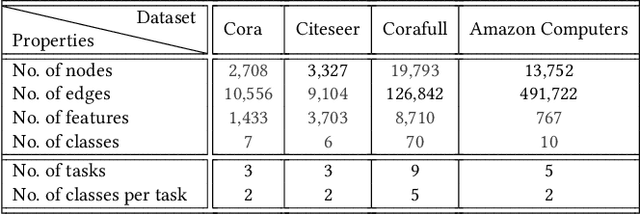

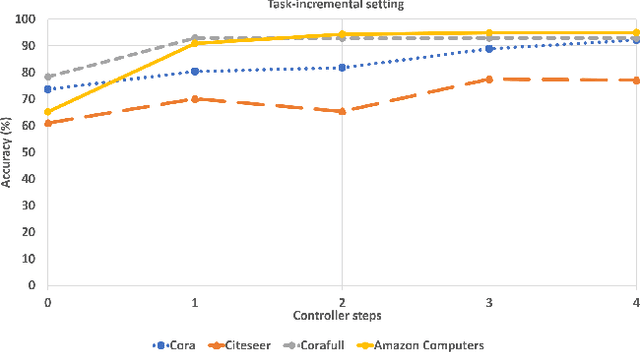

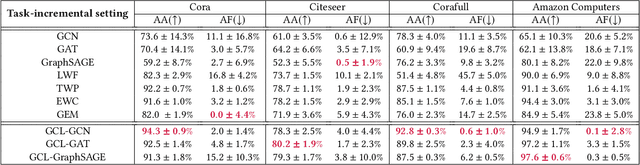

Abstract:Graph Neural Networks (GNNs) have become the backbone for a myriad of tasks pertaining to graphs and similar topological data structures. While many works have been established in domains related to node and graph classification/regression tasks, they mostly deal with a single task. Continual learning on graphs is largely unexplored and existing graph continual learning approaches are limited to the task-incremental learning scenarios. This paper proposes a graph continual learning strategy that combines the architecture-based and memory-based approaches. The structural learning strategy is driven by reinforcement learning, where a controller network is trained in such a way to determine an optimal number of nodes to be added/pruned from the base network when new tasks are observed, thus assuring sufficient network capacities. The parameter learning strategy is underpinned by the concept of Dark Experience replay method to cope with the catastrophic forgetting problem. Our approach is numerically validated with several graph continual learning benchmark problems in both task-incremental learning and class-incremental learning settings. Compared to recently published works, our approach demonstrates improved performance in both the settings. The implementation code can be found at \url{https://github.com/codexhammer/gcl}.

Autonomous Cross Domain Adaptation under Extreme Label Scarcity

Sep 04, 2022

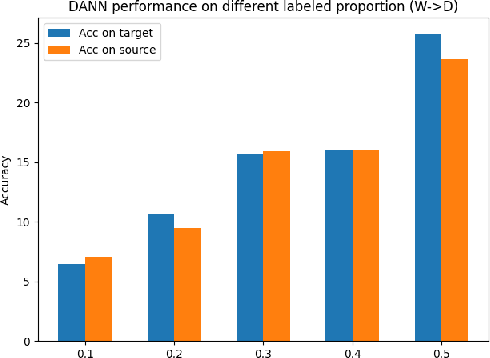

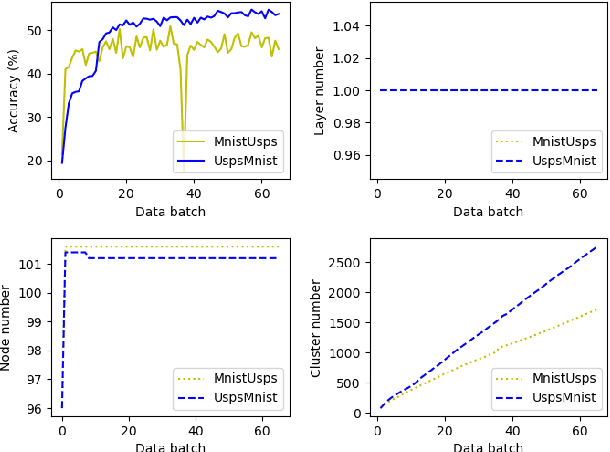

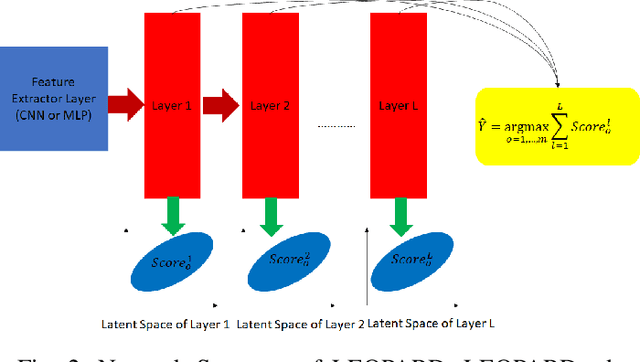

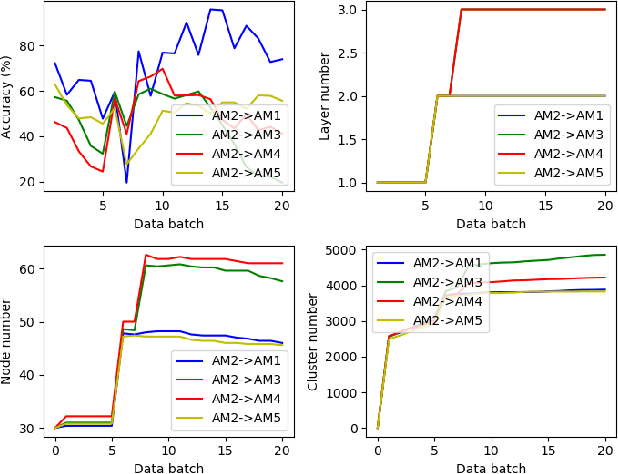

Abstract:A cross domain multistream classification is a challenging problem calling for fast domain adaptations to handle different but related streams in never-ending and rapidly changing environments. Notwithstanding that existing multistream classifiers assume no labelled samples in the target stream, they still incur expensive labelling cost since they require fully labelled samples of the source stream. This paper aims to attack the problem of extreme label shortage in the cross domain multistream classification problems where only very few labelled samples of the source stream are provided before process runs. Our solution, namely Learning Streaming Process from Partial Ground Truth (LEOPARD), is built upon a flexible deep clustering network where its hidden nodes, layers and clusters are added and removed dynamically in respect to varying data distributions. A deep clustering strategy is underpinned by a simultaneous feature learning and clustering technique leading to clustering-friendly latent spaces. A domain adaptation strategy relies on the adversarial domain adaptation technique where a feature extractor is trained to fool a domain classifier classifying source and target streams. Our numerical study demonstrates the efficacy of LEOPARD where it delivers improved performances compared to prominent algorithms in 15 of 24 cases. Source codes of LEOPARD are shared in \url{https://github.com/wengweng001/LEOPARD.git} to enable further study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge