Marcos A. M. Vieira

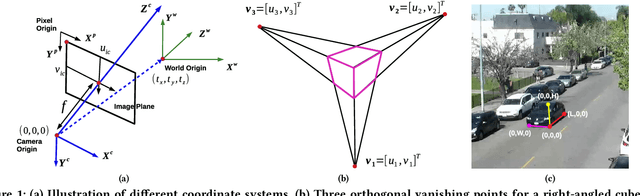

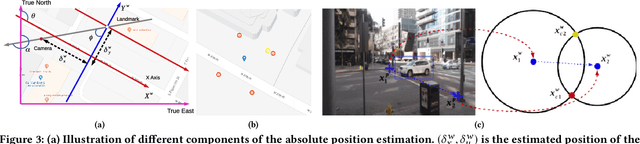

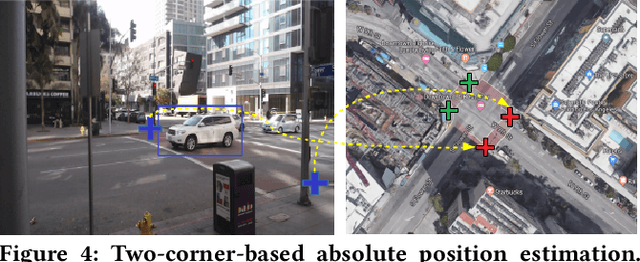

On Localizing a Camera from a Single Image

Mar 24, 2020

Abstract:Public cameras often have limited metadata describing their attributes. A key missing attribute is the precise location of the camera, using which it is possible to precisely pinpoint the location of events seen in the camera. In this paper, we explore the following question: under what conditions is it possible to estimate the location of a camera from a single image taken by the camera? We show that, using a judicious combination of projective geometry, neural networks, and crowd-sourced annotations from human workers, it is possible to position 95% of the images in our test data set to within 12 m. This performance is two orders of magnitude better than PoseNet, a state-of-the-art neural network that, when trained on a large corpus of images in an area, can estimate the pose of a single image. Finally, we show that the camera's inferred position and intrinsic parameters can help design a number of virtual sensors, all of which are reasonably accurate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge