Marcin Kowalczyk

High Throughput Event Filtering: The Interpolation-based DIF Algorithm Hardware Architecture

Jun 06, 2025Abstract:In recent years, there has been rapid development in the field of event vision. It manifests itself both on the technical side, as better and better event sensors are available, and on the algorithmic side, as more and more applications of this technology are proposed and scientific papers are published. However, the data stream from these sensors typically contains a significant amount of noise, which varies depending on factors such as the degree of illumination in the observed scene or the temperature of the sensor. We propose a hardware architecture of the Distance-based Interpolation with Frequency Weights (DIF) filter and implement it on an FPGA chip. To evaluate the algorithm and compare it with other solutions, we have prepared a new high-resolution event dataset, which we are also releasing to the community. Our architecture achieved a throughput of 403.39 million events per second (MEPS) for a sensor resolution of 1280 x 720 and 428.45 MEPS for a resolution of 640 x 480. The average values of the Area Under the Receiver Operating Characteristic (AUROC) index ranged from 0.844 to 0.999, depending on the dataset, which is comparable to the state-of-the-art filtering solutions, but with much higher throughput and better operation over a wide range of noise levels.

Learning from Noise: Enhancing DNNs for Event-Based Vision through Controlled Noise Injection

Jun 04, 2025Abstract:Event-based sensors offer significant advantages over traditional frame-based cameras, especially in scenarios involving rapid motion or challenging lighting conditions. However, event data frequently suffers from considerable noise, negatively impacting the performance and robustness of deep learning models. Traditionally, this problem has been addressed by applying filtering algorithms to the event stream, but this may also remove some of relevant data. In this paper, we propose a novel noise-injection training methodology designed to enhance the neural networks robustness against varying levels of event noise. Our approach introduces controlled noise directly into the training data, enabling models to learn noise-resilient representations. We have conducted extensive evaluations of the proposed method using multiple benchmark datasets (N-Caltech101, N-Cars, and Mini N-ImageNet) and various network architectures, including Convolutional Neural Networks, Vision Transformers, Spiking Neural Networks, and Graph Convolutional Networks. Experimental results show that our noise-injection training strategy achieves stable performance over a range of noise intensities, consistently outperforms event-filtering techniques, and achieves the highest average classification accuracy, making it a viable alternative to traditional event-data filtering methods in an object classification system. Code: https://github.com/vision-agh/DVS_Filtering

Utilisation of Vision Systems and Digital Twin for Maintaining Cleanliness in Public Spaces

Nov 08, 2024Abstract:Nowadays, the increasing demand for maintaining high cleanliness standards in public spaces results in the search for innovative solutions. The deployment of CCTV systems equipped with modern cameras and software enables not only real-time monitoring of the cleanliness status but also automatic detection of impurities and optimisation of cleaning schedules. The Digital Twin technology allows for the creation of a virtual model of the space, facilitating the simulation, training, and testing of cleanliness management strategies before implementation in the real world. In this paper, we present the utilisation of advanced vision surveillance systems and the Digital Twin technology in cleanliness management, using a railway station as an example. The Digital Twin was created based on an actual 3D model in the Nvidia Omniverse Isaac Sim simulator. A litter detector, bin occupancy level detector, stain segmentation, and a human detector (including the cleaning crew) along with their movement analysis were implemented. A preliminary assessment was conducted, and potential modifications for further enhancement and future development of the system were identified.

Fast-moving object counting with an event camera

Dec 16, 2022Abstract:This paper proposes the use of an event camera as a component of a vision system that enables counting of fast-moving objects - in this case, falling corn grains. These type of cameras transmit information about the change in brightness of individual pixels and are characterised by low latency, no motion blur, correct operation in different lighting conditions, as well as very low power consumption. The proposed counting algorithm processes events in real time. The operation of the solution was demonstrated on a stand consisting of a chute with a vibrating feeder, which allowed the number of grains falling to be adjusted. The objective of the control system with a PID controller was to maintain a constant average number of falling objects. The proposed solution was subjected to a series of tests to determine the correctness of the developed method operation. On their basis, the validity of using an event camera to count small, fast-moving objects and the associated wide range of potential industrial applications can be confirmed.

Hardware architecture for high throughput event visual data filtering with matrix of IIR filters algorithm

Jul 02, 2022

Abstract:Neuromorphic vision is a rapidly growing field with numerous applications in the perception systems of autonomous vehicles. Unfortunately, due to the sensors working principle, there is a significant amount of noise in the event stream. In this paper we present a novel algorithm based on an IIR filter matrix for filtering this type of noise and a hardware architecture that allows its acceleration using an SoC FPGA. Our method has a very good filtering efficiency for uncorrelated noise - over 99% of noisy events are removed. It has been tested for several event data sets with added random noise. We designed the hardware architecture in such a way as to reduce the utilisation of the FPGA's internal BRAM resources. This enabled a very low latency and a throughput of up to 385.8 MEPS million events per second.The proposed hardware architecture was verified in simulation and in hardware on the Xilinx Zynq Ultrascale+ MPSoC chip on the Mercury+ XU9 module with the Mercury+ ST1 base board.

A Connected Component Labelling algorithm for multi-pixel per clock cycle video strea

May 20, 2021

Abstract:This work describes the hardware implementation of a connected component labelling (CCL) module in reprogammable logic. The main novelty of the design is the "full", i.e. without any simplifications, support of a 4 pixel per clock format (4 ppc) and real-time processing of a 4K/UltraHD video stream (3840 x 2160 pixels) at 60 frames per second. To achieve this, a special labelling method was designed and a functionality that stops the input data stream in order to process pixel groups which require writing more than one merger into the equivalence table. The proposed module was verified in simulation and in hardware on the Xilinx Zynq Ultrascale+ MPSoC chip on the ZCU104 evaluation board.

Exploration of Hardware Acceleration Methods for an XNOR Traffic Signs Classifier

Apr 06, 2021

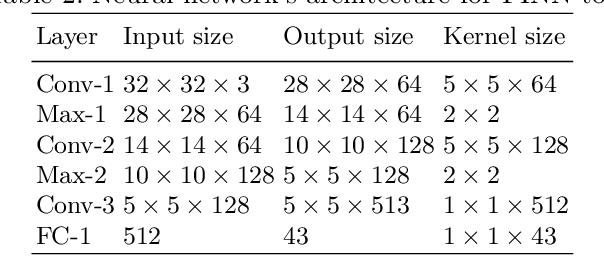

Abstract:Deep learning algorithms are a key component of many state-of-the-art vision systems, especially as Convolutional Neural Networks (CNN) outperform most solutions in the sense of accuracy. To apply such algorithms in real-time applications, one has to address the challenges of memory and computational complexity. To deal with the first issue, we use networks with reduced precision, specifically a binary neural network (also known as XNOR). To satisfy the computational requirements, we propose to use highly parallel and low-power FPGA devices. In this work, we explore the possibility of accelerating XNOR networks for traffic sign classification. The trained binary networks are implemented on the ZCU 104 development board, equipped with a Zynq UltraScale+ MPSoC device using two different approaches. Firstly, we propose a custom HDL accelerator for XNOR networks, which enables the inference with almost 450 fps. Even better results are obtained with the second method - the Xilinx FINN accelerator - enabling to process input images with around 550 frame rate. Both approaches provide over 96% accuracy on the test set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge