Hubert Szolc

Vision-based automatic fruit counting with UAV

Mar 17, 2025Abstract:The use of unmanned aerial vehicles (UAVs) for smart agriculture is becoming increasingly popular. This is evidenced by recent scientific works, as well as the various competitions organised on this topic. Therefore, in this work we present a system for automatic fruit counting using UAVs. To detect them, our solution uses a vision algorithm that processes streams from an RGB camera and a depth sensor using classical image operations. Our system also allows the planning and execution of flight trajectories, taking into account the minimisation of flight time and distance covered. We tested the proposed solution in simulation and obtained an average score of 87.27/100 points from a total of 500 missions. We also submitted it to the UAV Competition organised as part of the ICUAS 2024 conference, where we achieved an average score of 84.83/100 points, placing 6th in a field of 23 teams and advancing to the finals.

Utilisation of Vision Systems and Digital Twin for Maintaining Cleanliness in Public Spaces

Nov 08, 2024Abstract:Nowadays, the increasing demand for maintaining high cleanliness standards in public spaces results in the search for innovative solutions. The deployment of CCTV systems equipped with modern cameras and software enables not only real-time monitoring of the cleanliness status but also automatic detection of impurities and optimisation of cleaning schedules. The Digital Twin technology allows for the creation of a virtual model of the space, facilitating the simulation, training, and testing of cleanliness management strategies before implementation in the real world. In this paper, we present the utilisation of advanced vision surveillance systems and the Digital Twin technology in cleanliness management, using a railway station as an example. The Digital Twin was created based on an actual 3D model in the Nvidia Omniverse Isaac Sim simulator. A litter detector, bin occupancy level detector, stain segmentation, and a human detector (including the cleaning crew) along with their movement analysis were implemented. A preliminary assessment was conducted, and potential modifications for further enhancement and future development of the system were identified.

Tangled Program Graphs as an alternative to DRL-based control algorithms for UAVs

Nov 08, 2024Abstract:Deep reinforcement learning (DRL) is currently the most popular AI-based approach to autonomous vehicle control. An agent, trained for this purpose in simulation, can interact with the real environment with a human-level performance. Despite very good results in terms of selected metrics, this approach has some significant drawbacks: high computational requirements and low explainability. Because of that, a DRL-based agent cannot be used in some control tasks, especially when safety is the key issue. Therefore we propose to use Tangled Program Graphs (TPGs) as an alternative for deep reinforcement learning in control-related tasks. In this approach, input signals are processed by simple programs that are combined in a graph structure. As a result, TPGs are less computationally demanding and their actions can be explained based on the graph structure. In this paper, we present our studies on the use of TPGs as an alternative for DRL in control-related tasks. In particular, we consider the problem of navigating an unmanned aerial vehicle (UAV) through the unknown environment based solely on the on-board LiDAR sensor. The results of our work show promising prospects for the use of TPGs in control related-tasks.

LiDAR-based drone navigation with reinforcement learning

Jul 26, 2023Abstract:Reinforcement learning is of increasing importance in the field of robot control and simulation plays a~key role in this process. In the unmanned aerial vehicles (UAVs, drones), there is also an increase in the number of published scientific papers involving this approach. In this work, an autonomous drone control system was prepared to fly forward (according to its coordinates system) and pass the trees encountered in the forest based on the data from a rotating LiDAR sensor. The Proximal Policy Optimization (PPO) algorithm, an example of reinforcement learning (RL), was used to prepare it. A custom simulator in the Python language was developed for this purpose. The Gazebo environment, integrated with the Robot Operating System (ROS), was also used to test the resulting control algorithm. Finally, the prepared solution was implemented in the Nvidia Jetson Nano eGPU and verified in the real tests scenarios. During them, the drone successfully completed the set task and was able to repeatably avoid trees and fly through the forest.

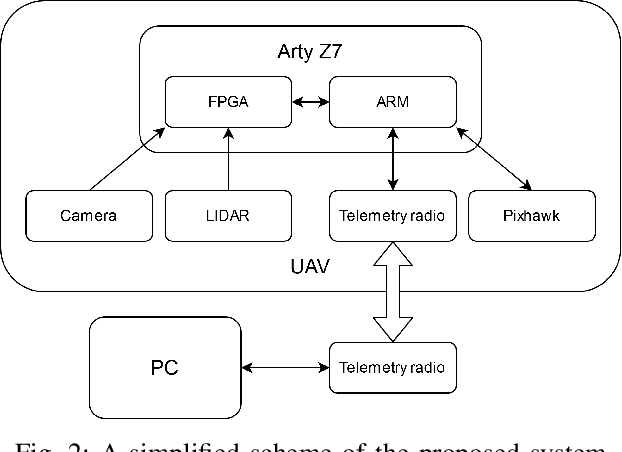

Hardware-in-the-loop simulation of a UAV autonomous landing algorithm implemented in SoC FPGA

Jul 25, 2022

Abstract:This paper presents a system for hardware-in-the-loop (HiL) simulation of unmanned aerial vehicle (UAV) control algorithms implemented on a heterogeneous SoC FPGA computing platforms. The AirSim simulator running on a PC and an Arty Z7 development board with a Zynq SoC chip from AMD Xilinx were used. Communication was carried out via a serial USB link. An application for autonomous landing on a specially marked landing strip was selected as a case study. A landing site detection algorithm was implemented on the Zynq SoC platform. This allowed processing a 1280 x 720 @ 60 fps video stream in real time. Performed tests showed that the system works correctly and there are no delays that could negatively affect the stability of the control. The proposed concept is characterised by relative simplicity and low implementation cost. At the same time, it can be applied to test various types of high-level perception and control algorithms for UAV implemented on embedded platforms. We provide the code developed on GitHub, which includes both Python scripts running on the PC and C code running on Arty Z7.

A simple vision-based navigation and control strategy for autonomous drone racing

Apr 20, 2021

Abstract:In this paper, we present a control system that allows a drone to fly autonomously through a series of gates marked with ArUco tags. A simple and low-cost DJI Tello EDU quad-rotor platform was used. Based on the API provided by the manufacturer, we have created a Python application that enables the communication with the drone over WiFi, realises drone positioning based on visual feedback, and generates control. Two control strategies were proposed, compared, and critically analysed. In addition, the accuracy of the positioning method used was measured. The application was evaluated on a laptop computer (about 40 fps) and a Nvidia Jetson TX2 embedded GPU platform (about 25 fps). We provide the developed code on GitHub.

Optimisation of a Siamese Neural Network for Real-Time Energy Efficient Object Tracking

Jul 01, 2020

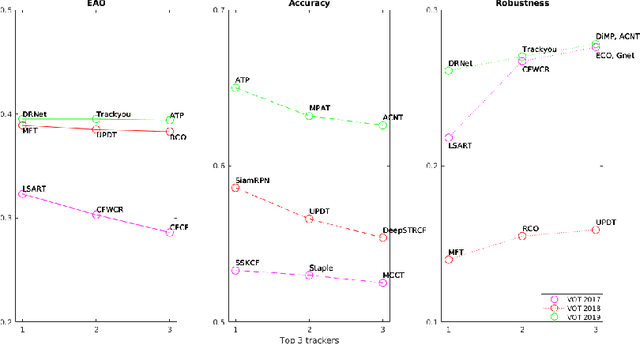

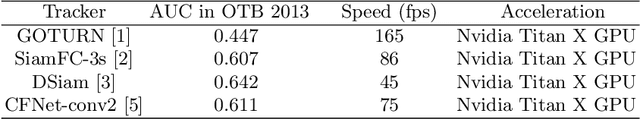

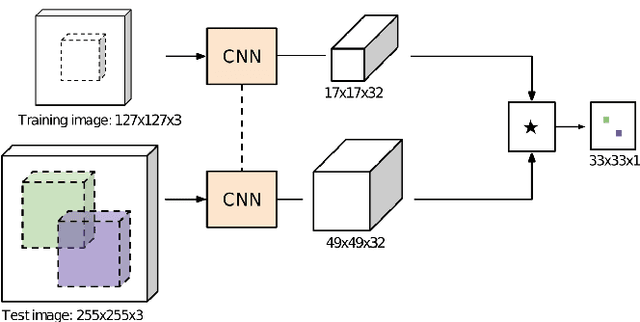

Abstract:In this paper the research on optimisation of visual object tracking using a Siamese neural network for embedded vision systems is presented. It was assumed that the solution shall operate in real-time, preferably for a high resolution video stream, with the lowest possible energy consumption. To meet these requirements, techniques such as the reduction of computational precision and pruning were considered. Brevitas, a tool dedicated for optimisation and quantisation of neural networks for FPGA implementation, was used. A number of training scenarios were tested with varying levels of optimisations - from integer uniform quantisation with 16 bits to ternary and binary networks. Next, the influence of these optimisations on the tracking performance was evaluated. It was possible to reduce the size of the convolutional filters up to 10 times in relation to the original network. The obtained results indicate that using quantisation can significantly reduce the memory and computational complexity of the proposed network while still enabling precise tracking, thus allow to use it in embedded vision systems. Moreover, quantisation of weights positively affects the network training by decreasing overfitting.

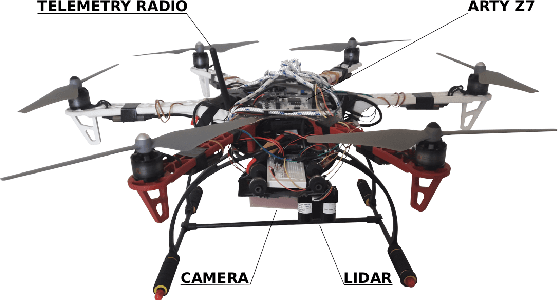

Vision based hardware-software real-time control system for autonomous landing of an UAV

Apr 24, 2020

Abstract:In this paper we present a vision based hardware-software control system enabling autonomous landing of a multirotor unmanned aerial vehicle (UAV). It allows the detection of a marked landing pad in real-time for a 1280 x 720 @ 60 fps video stream. In addition, a LiDAR sensor is used to measure the altitude above ground. A heterogeneous Zynq SoC device is used as the computing platform. The solution was tested on a number of sequences and the landing pad was detected with 96% accuracy. This research shows that a reprogrammable heterogeneous computing system is a good solution for UAVs because it enables real-time data stream processing with relatively low energy consumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge