Marc Goutier

Transferring Domain Knowledge with (X)AI-Based Learning Systems

Jun 03, 2024

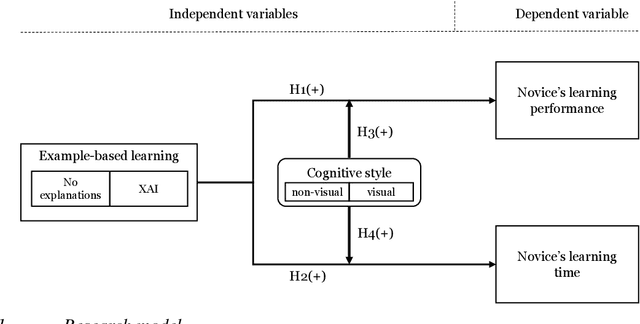

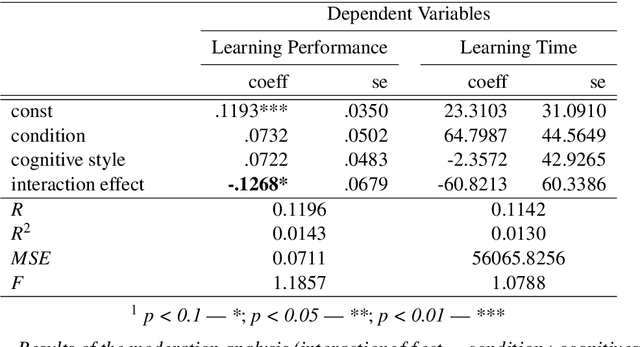

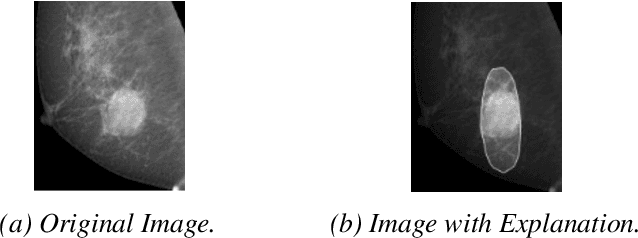

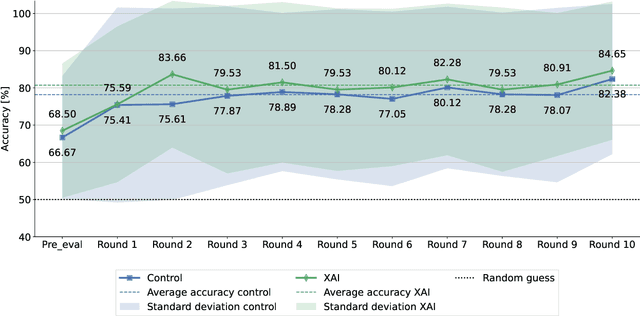

Abstract:In numerous high-stakes domains, training novices via conventional learning systems does not suffice. To impart tacit knowledge, experts' hands-on guidance is imperative. However, training novices by experts is costly and time-consuming, increasing the need for alternatives. Explainable artificial intelligence (XAI) has conventionally been used to make black-box artificial intelligence systems interpretable. In this work, we utilize XAI as an alternative: An (X)AI system is trained on experts' past decisions and is then employed to teach novices by providing examples coupled with explanations. In a study with 249 participants, we measure the effectiveness of such an approach for a classification task. We show that (X)AI-based learning systems are able to induce learning in novices and that their cognitive styles moderate learning. Thus, we take the first steps to reveal the impact of XAI on human learning and point AI developers to future options to tailor the design of (X)AI-based learning systems.

Training Novices: The Role of Human-AI Collaboration and Knowledge Transfer

Jul 01, 2022

Abstract:Across a multitude of work environments, expert knowledge is imperative for humans to conduct tasks with high performance and ensure business success. These humans possess task-specific expert knowledge (TSEK) and hence, represent subject matter experts (SMEs). However, not only demographic changes but also personnel downsizing strategies lead and will continue to lead to departures of SMEs within organizations, which constitutes the challenge of how to retain that expert knowledge and train novices to keep the competitive advantage elicited by that expert knowledge. SMEs training novices is time- and cost-intensive, which intensifies the need for alternatives. Human-AI collaboration (HAIC) poses a way out of this dilemma, facilitating alternatives to preserve expert knowledge and teach it to novices for tasks conducted by SMEs beforehand. In this workshop paper, we (1) propose a framework on how HAIC can be utilized to train novices on particular tasks, (2) illustrate the role of explicit and tacit knowledge in this training process via HAIC, and (3) outline a preliminary experiment design to assess the ability of AI systems in HAIC to act as a trainer to transfer TSEK to novices who do not possess prior TSEK.

Human vs. supervised machine learning: Who learns patterns faster?

Nov 30, 2020

Abstract:The capabilities of supervised machine learning (SML), especially compared to human abilities, are being discussed in scientific research and in the usage of SML. This study provides an answer to how learning performance differs between humans and machines when there is limited training data. We have designed an experiment in which 44 humans and three different machine learning algorithms identify patterns in labeled training data and have to label instances according to the patterns they find. The results show a high dependency between performance and the underlying patterns of the task. Whereas humans perform relatively similarly across all patterns, machines show large performance differences for the various patterns in our experiment. After seeing 20 instances in the experiment, human performance does not improve anymore, which we relate to theories of cognitive overload. Machines learn slower but can reach the same level or may even outperform humans in 2 of the 4 of used patterns. However, machines need more instances compared to humans for the same results. The performance of machines is comparably lower for the other 2 patterns due to the difficulty of combining input features.

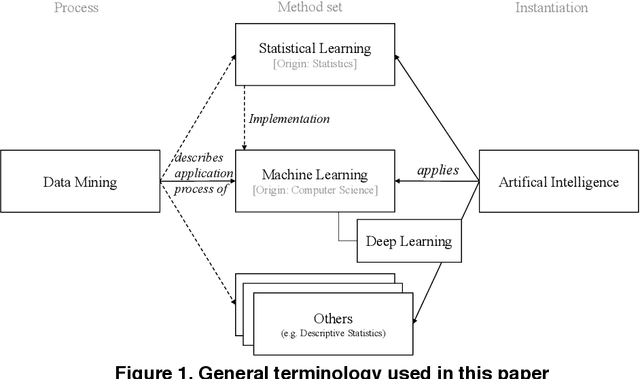

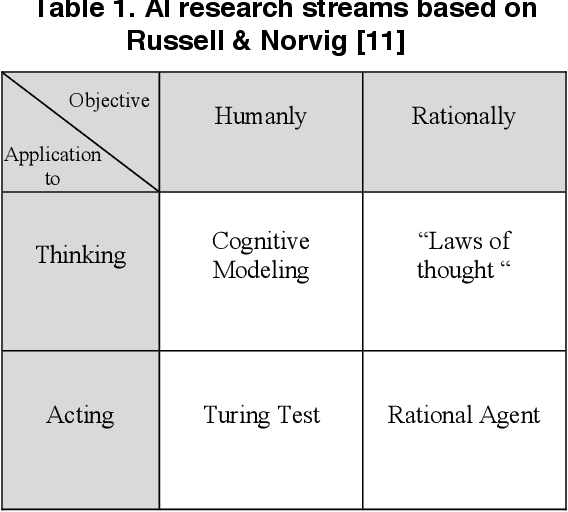

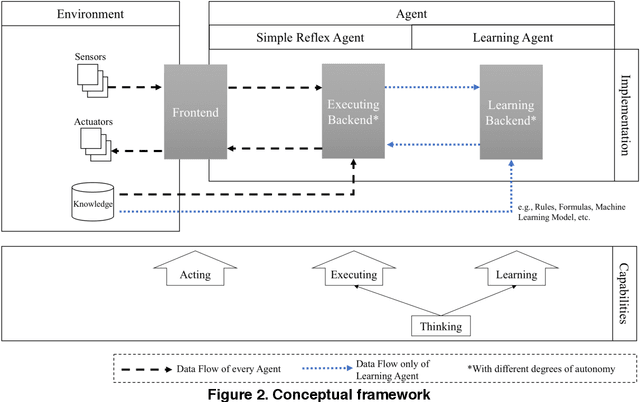

Machine Learning in Artificial Intelligence: Towards a Common Understanding

Mar 27, 2020

Abstract:The application of "machine learning" and "artificial intelligence" has become popular within the last decade. Both terms are frequently used in science and media, sometimes interchangeably, sometimes with different meanings. In this work, we aim to clarify the relationship between these terms and, in particular, to specify the contribution of machine learning to artificial intelligence. We review relevant literature and present a conceptual framework which clarifies the role of machine learning to build (artificial) intelligent agents. Hence, we seek to provide more terminological clarity and a starting point for (interdisciplinary) discussions and future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge