Marc Badger

Multi-view Tracking, Re-ID, and Social Network Analysis of a Flock of Visually Similar Birds in an Outdoor Aviary

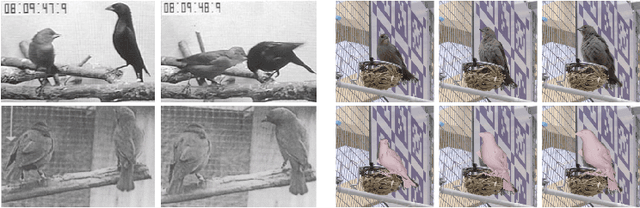

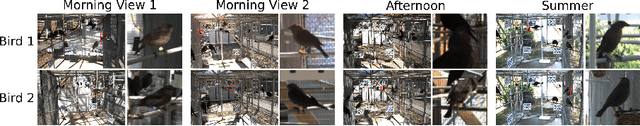

Dec 01, 2022Abstract:The ability to capture detailed interactions among individuals in a social group is foundational to our study of animal behavior and neuroscience. Recent advances in deep learning and computer vision are driving rapid progress in methods that can record the actions and interactions of multiple individuals simultaneously. Many social species, such as birds, however, live deeply embedded in a three-dimensional world. This world introduces additional perceptual challenges such as occlusions, orientation-dependent appearance, large variation in apparent size, and poor sensor coverage for 3D reconstruction, that are not encountered by applications studying animals that move and interact only on 2D planes. Here we introduce a system for studying the behavioral dynamics of a group of songbirds as they move throughout a 3D aviary. We study the complexities that arise when tracking a group of closely interacting animals in three dimensions and introduce a novel dataset for evaluating multi-view trackers. Finally, we analyze captured ethogram data and demonstrate that social context affects the distribution of sequential interactions between birds in the aviary.

Birds of a Feather: Capturing Avian Shape Models from Images

May 19, 2021

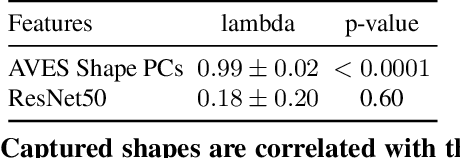

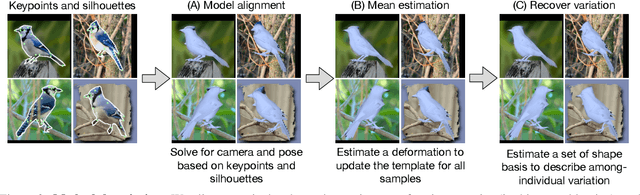

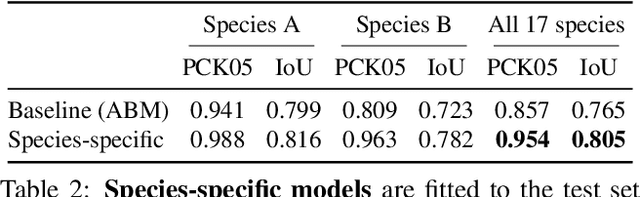

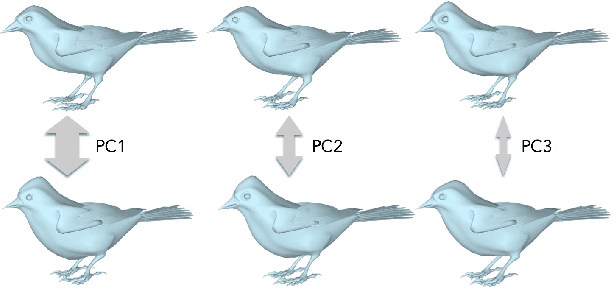

Abstract:Animals are diverse in shape, but building a deformable shape model for a new species is not always possible due to the lack of 3D data. We present a method to capture new species using an articulated template and images of that species. In this work, we focus mainly on birds. Although birds represent almost twice the number of species as mammals, no accurate shape model is available. To capture a novel species, we first fit the articulated template to each training sample. By disentangling pose and shape, we learn a shape space that captures variation both among species and within each species from image evidence. We learn models of multiple species from the CUB dataset, and contribute new species-specific and multi-species shape models that are useful for downstream reconstruction tasks. Using a low-dimensional embedding, we show that our learned 3D shape space better reflects the phylogenetic relationships among birds than learned perceptual features.

3D Bird Reconstruction: a Dataset, Model, and Shape Recovery from a Single View

Aug 13, 2020

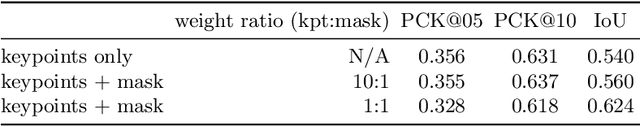

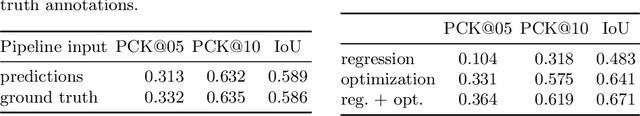

Abstract:Automated capture of animal pose is transforming how we study neuroscience and social behavior. Movements carry important social cues, but current methods are not able to robustly estimate pose and shape of animals, particularly for social animals such as birds, which are often occluded by each other and objects in the environment. To address this problem, we first introduce a model and multi-view optimization approach, which we use to capture the unique shape and pose space displayed by live birds. We then introduce a pipeline and experiments for keypoint, mask, pose, and shape regression that recovers accurate avian postures from single views. Finally, we provide extensive multi-view keypoint and mask annotations collected from a group of 15 social birds housed together in an outdoor aviary. The project website with videos, results, code, mesh model, and the Penn Aviary Dataset can be found at https://marcbadger.github.io/avian-mesh.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge