Manuel Silva

All for One, and One for All: UrbanSyn Dataset, the third Musketeer of Synthetic Driving Scenes

Dec 19, 2023

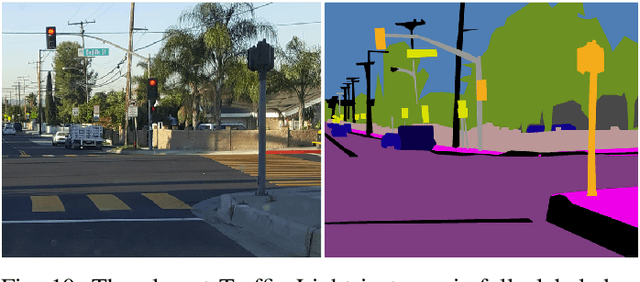

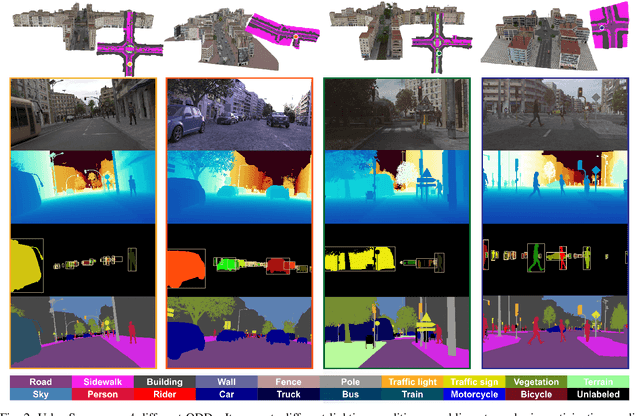

Abstract:We introduce UrbanSyn, a photorealistic dataset acquired through semi-procedurally generated synthetic urban driving scenarios. Developed using high-quality geometry and materials, UrbanSyn provides pixel-level ground truth, including depth, semantic segmentation, and instance segmentation with object bounding boxes and occlusion degree. It complements GTAV and Synscapes datasets to form what we coin as the 'Three Musketeers'. We demonstrate the value of the Three Musketeers in unsupervised domain adaptation for image semantic segmentation. Results on real-world datasets, Cityscapes, Mapillary Vistas, and BDD100K, establish new benchmarks, largely attributed to UrbanSyn. We make UrbanSyn openly and freely accessible (www.urbansyn.org).

Low-Resource White-Box Semantic Segmentation of Supporting Towers on 3D Point Clouds via Signature Shape Identification

Jun 13, 2023

Abstract:Research in 3D semantic segmentation has been increasing performance metrics, like the IoU, by scaling model complexity and computational resources, leaving behind researchers and practitioners that (1) cannot access the necessary resources and (2) do need transparency on the model decision mechanisms. In this paper, we propose SCENE-Net, a low-resource white-box model for 3D point cloud semantic segmentation. SCENE-Net identifies signature shapes on the point cloud via group equivariant non-expansive operators (GENEOs), providing intrinsic geometric interpretability. Our training time on a laptop is 85~min, and our inference time is 20~ms. SCENE-Net has 11 trainable geometrical parameters and requires fewer data than black-box models. SCENE--Net offers robustness to noisy labeling and data imbalance and has comparable IoU to state-of-the-art methods. With this paper, we release a 40~000 Km labeled dataset of rural terrain point clouds and our code implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge