Jose L. Gómez

Divide, Ensemble and Conquer: The Last Mile on Unsupervised Domain Adaptation for On-Board Semantic Segmentation

Jun 27, 2024

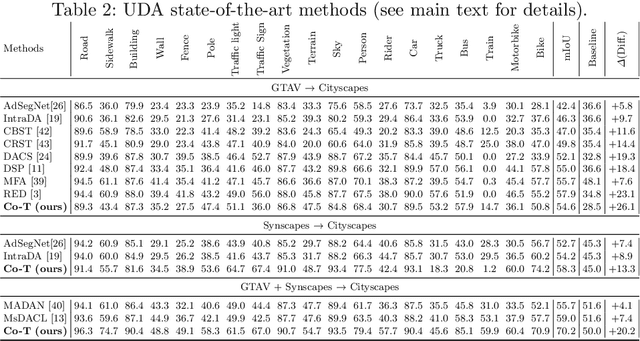

Abstract:The last mile of unsupervised domain adaptation (UDA) for semantic segmentation is the challenge of solving the syn-to-real domain gap. Recent UDA methods have progressed significantly, yet they often rely on strategies customized for synthetic single-source datasets (e.g., GTA5), which limits their generalisation to multi-source datasets. Conversely, synthetic multi-source datasets hold promise for advancing the last mile of UDA but remain underutilized in current research. Thus, we propose DEC, a flexible UDA framework for multi-source datasets. Following a divide-and-conquer strategy, DEC simplifies the task by categorizing semantic classes, training models for each category, and fusing their outputs by an ensemble model trained exclusively on synthetic datasets to obtain the final segmentation mask. DEC can integrate with existing UDA methods, achieving state-of-the-art performance on Cityscapes, BDD100K, and Mapillary Vistas, significantly narrowing the syn-to-real domain gap.

All for One, and One for All: UrbanSyn Dataset, the third Musketeer of Synthetic Driving Scenes

Dec 19, 2023

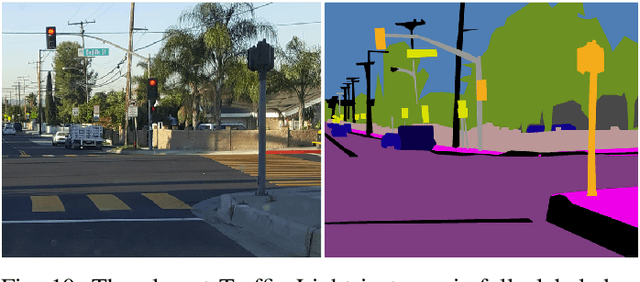

Abstract:We introduce UrbanSyn, a photorealistic dataset acquired through semi-procedurally generated synthetic urban driving scenarios. Developed using high-quality geometry and materials, UrbanSyn provides pixel-level ground truth, including depth, semantic segmentation, and instance segmentation with object bounding boxes and occlusion degree. It complements GTAV and Synscapes datasets to form what we coin as the 'Three Musketeers'. We demonstrate the value of the Three Musketeers in unsupervised domain adaptation for image semantic segmentation. Results on real-world datasets, Cityscapes, Mapillary Vistas, and BDD100K, establish new benchmarks, largely attributed to UrbanSyn. We make UrbanSyn openly and freely accessible (www.urbansyn.org).

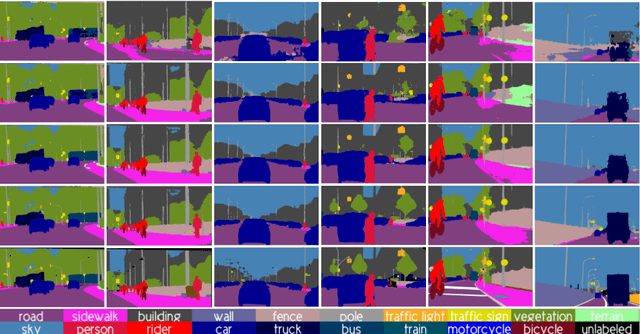

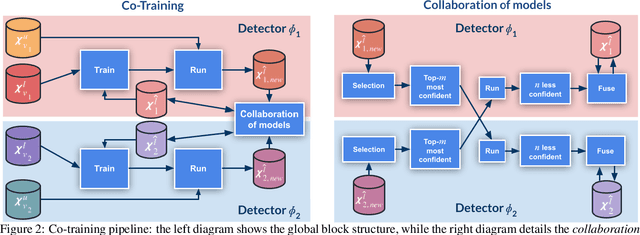

Co-Training for Unsupervised Domain Adaptation of Semantic Segmentation Models

May 31, 2022

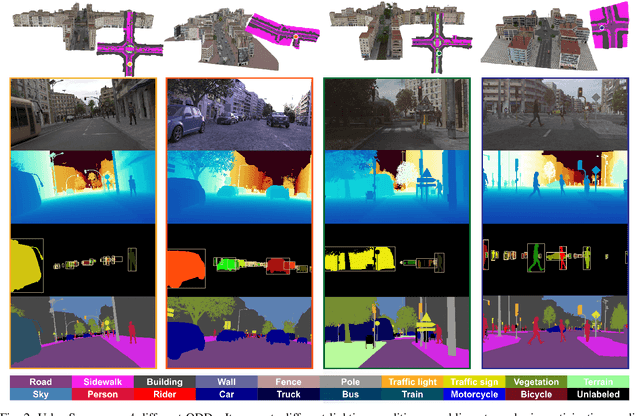

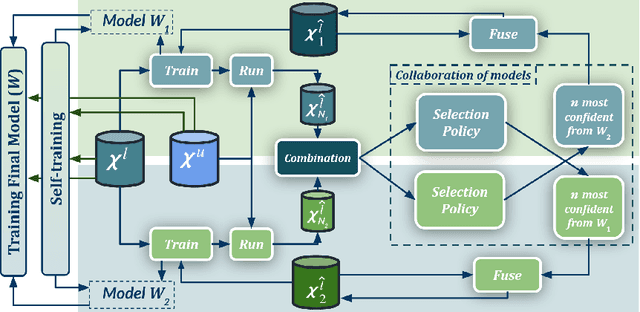

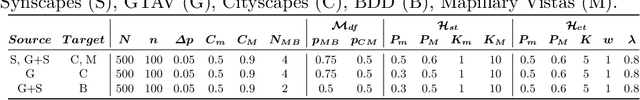

Abstract:Semantic image segmentation is addressed by training deep models. Since supervised training draws to a curse of human-based image labeling, using synthetic images with automatically generated ground truth together with unlabeled real-world images is a promising alternative. This implies to address an unsupervised domain adaptation (UDA) problem. In this paper, we proposed a new co-training process for synth-to-real UDA of semantic segmentation models. First, we design a self-training procedure which provides two initial models. Then, we keep training these models in a collaborative manner for obtaining the final model. The overall process treats the deep models as black boxes and drives their collaboration at the level of pseudo-labeled target images, {\ie}, neither modifying loss functions is required, nor explicit feature alignment. We test our proposal on standard synthetic and real-world datasets. Our co-training shows improvements of 15-20 percentage points of mIoU over baselines, so establishing new state-of-the-art results.

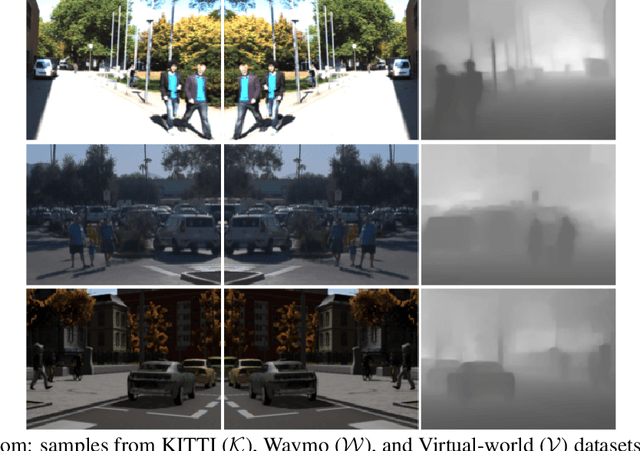

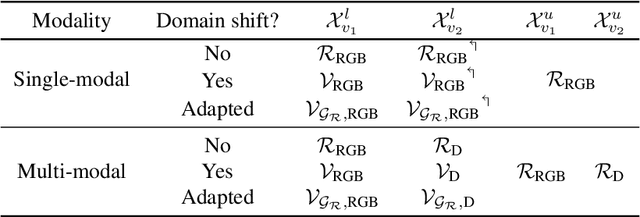

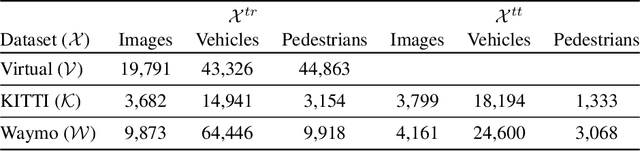

Co-training for Deep Object Detection: Comparing Single-modal and Multi-modal Approaches

Apr 23, 2021

Abstract:Top-performing computer vision models are powered by convolutional neural networks (CNNs). Training an accurate CNN highly depends on both the raw sensor data and their associated ground truth (GT). Collecting such GT is usually done through human labeling, which is time-consuming and does not scale as we wish. This data labeling bottleneck may be intensified due to domain shifts among image sensors, which could force per-sensor data labeling. In this paper, we focus on the use of co-training, a semi-supervised learning (SSL) method, for obtaining self-labeled object bounding boxes (BBs), i.e., the GT to train deep object detectors. In particular, we assess the goodness of multi-modal co-training by relying on two different views of an image, namely, appearance (RGB) and estimated depth (D). Moreover, we compare appearance-based single-modal co-training with multi-modal. Our results suggest that in a standard SSL setting (no domain shift, a few human-labeled data) and under virtual-to-real domain shift (many virtual-world labeled data, no human-labeled data) multi-modal co-training outperforms single-modal. In the latter case, by performing GAN-based domain translation both co-training modalities are on pair; at least, when using an off-the-shelf depth estimation model not specifically trained on the translated images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge