Manolis Tsiknakis

Synthetic Data Blueprint (SDB): A modular framework for the statistical, structural, and graph-based evaluation of synthetic tabular data

Dec 16, 2025

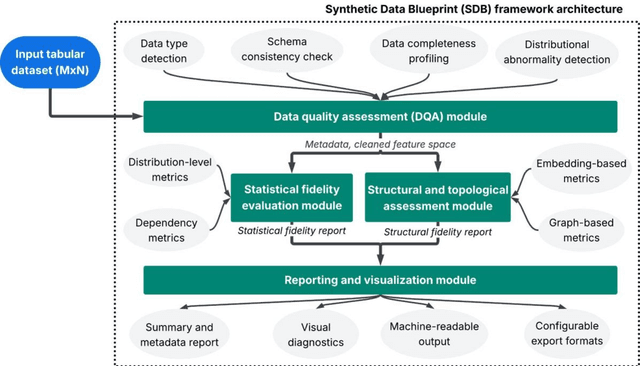

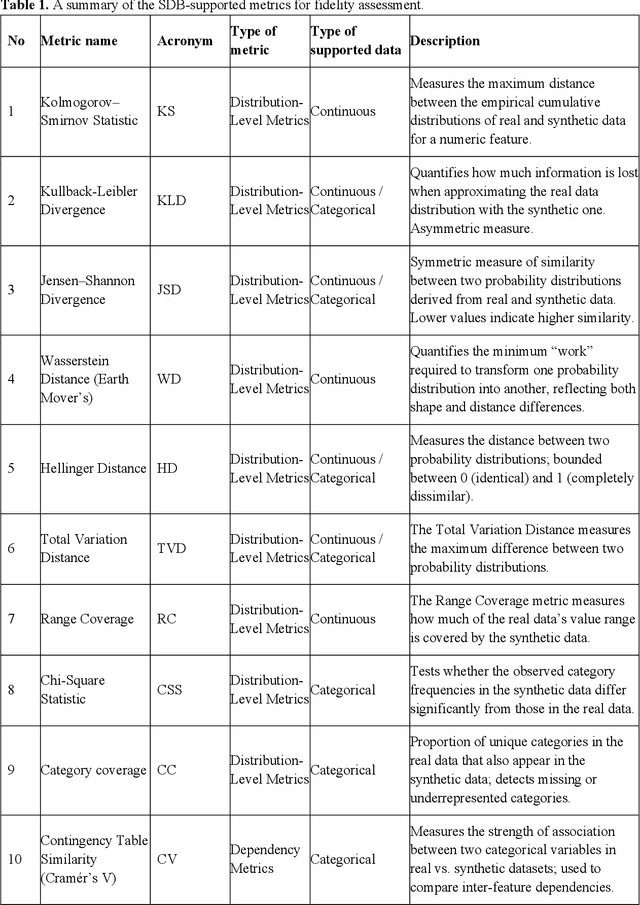

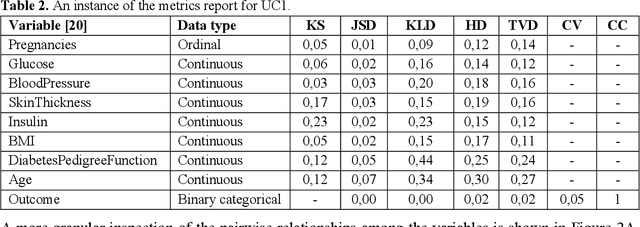

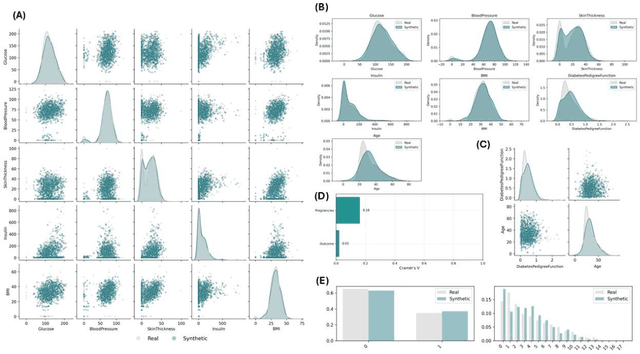

Abstract:In the rapidly evolving era of Artificial Intelligence (AI), synthetic data are widely used to accelerate innovation while preserving privacy and enabling broader data accessibility. However, the evaluation of synthetic data remains fragmented across heterogeneous metrics, ad-hoc scripts, and incomplete reporting practices. To address this gap, we introduce Synthetic Data Blueprint (SDB), a modular Pythonic based library to quantitatively and visually assess the fidelity of synthetic tabular data. SDB supports: (i) automated feature-type detection, (ii) distributional and dependency-level fidelity metrics, (iii) graph- and embedding-based structure preservation scores, and (iv) a rich suite of data visualization schemas. To demonstrate the breadth, robustness, and domain-agnostic applicability of the SDB, we evaluated the framework across three real-world use cases that differ substantially in scale, feature composition, statistical complexity, and downstream analytical requirements. These include: (i) healthcare diagnostics, (ii) socioeconomic and financial modelling, and (iii) cybersecurity and network traffic analysis. These use cases reveal how SDB can address diverse data fidelity assessment challenges, varying from mixed-type clinical variables to high-cardinality categorical attributes and high-dimensional telemetry signals, while at the same time offering a consistent, transparent, and reproducible benchmarking across heterogeneous domains.

Efficient Pain Recognition via Respiration Signals: A Single Cross-Attention Transformer Multi-Window Fusion Pipeline

Jul 30, 2025Abstract:Pain is a complex condition affecting a large portion of the population. Accurate and consistent evaluation is essential for individuals experiencing pain, and it supports the development of effective and advanced management strategies. Automatic pain assessment systems provide continuous monitoring and support clinical decision-making, aiming to reduce distress and prevent functional decline. This study has been submitted to the \textit{Second Multimodal Sensing Grand Challenge for Next-Gen Pain Assessment (AI4PAIN)}. The proposed method introduces a pipeline that leverages respiration as the input signal and incorporates a highly efficient cross-attention transformer alongside a multi-windowing strategy. Extensive experiments demonstrate that respiration is a valuable physiological modality for pain assessment. Moreover, experiments revealed that compact and efficient models, when properly optimized, can achieve strong performance, often surpassing larger counterparts. The proposed multi-window approach effectively captures both short-term and long-term features, as well as global characteristics, thereby enhancing the model's representational capacity.

Multi-Representation Diagrams for Pain Recognition: Integrating Various Electrodermal Activity Signals into a Single Image

Jul 30, 2025Abstract:Pain is a multifaceted phenomenon that affects a substantial portion of the population. Reliable and consistent evaluation benefits those experiencing pain and underpins the development of effective and advanced management strategies. Automatic pain-assessment systems deliver continuous monitoring, inform clinical decision-making, and aim to reduce distress while preventing functional decline. By incorporating physiological signals, these systems provide objective, accurate insights into an individual's condition. This study has been submitted to the \textit{Second Multimodal Sensing Grand Challenge for Next-Gen Pain Assessment (AI4PAIN)}. The proposed method introduces a pipeline that leverages electrodermal activity signals as input modality. Multiple representations of the signal are created and visualized as waveforms, and they are jointly visualized within a single multi-representation diagram. Extensive experiments incorporating various processing and filtering techniques, along with multiple representation combinations, demonstrate the effectiveness of the proposed approach. It consistently yields comparable, and in several cases superior, results to traditional fusion methods, establishing it as a robust alternative for integrating different signal representations or modalities.

Tiny-BioMoE: a Lightweight Embedding Model for Biosignal Analysis

Jul 30, 2025Abstract:Pain is a complex and pervasive condition that affects a significant portion of the population. Accurate and consistent assessment is essential for individuals suffering from pain, as well as for developing effective management strategies in a healthcare system. Automatic pain assessment systems enable continuous monitoring, support clinical decision-making, and help minimize patient distress while mitigating the risk of functional deterioration. Leveraging physiological signals offers objective and precise insights into a person's state, and their integration in a multimodal framework can further enhance system performance. This study has been submitted to the \textit{Second Multimodal Sensing Grand Challenge for Next-Gen Pain Assessment (AI4PAIN)}. The proposed approach introduces \textit{Tiny-BioMoE}, a lightweight pretrained embedding model for biosignal analysis. Trained on $4.4$ million biosignal image representations and consisting of only $7.3$ million parameters, it serves as an effective tool for extracting high-quality embeddings for downstream tasks. Extensive experiments involving electrodermal activity, blood volume pulse, respiratory signals, peripheral oxygen saturation, and their combinations highlight the model's effectiveness across diverse modalities in automatic pain recognition tasks. \textit{\textcolor{blue}{The model's architecture (code) and weights are available at https://github.com/GkikasStefanos/Tiny-BioMoE.

A Deep Learning approach for Depressive Symptoms assessment in Parkinson's disease patients using facial videos

May 05, 2025Abstract:Parkinson's disease (PD) is a neurodegenerative disorder, manifesting with motor and non-motor symptoms. Depressive symptoms are prevalent in PD, affecting up to 45% of patients. They are often underdiagnosed due to overlapping motor features, such as hypomimia. This study explores deep learning (DL) models-ViViT, Video Swin Tiny, and 3D CNN-LSTM with attention layers-to assess the presence and severity of depressive symptoms, as detected by the Geriatric Depression Scale (GDS), in PD patients through facial video analysis. The same parameters were assessed in a secondary analysis taking into account whether patients were one hour after (ON-medication state) or 12 hours without (OFF-medication state) dopaminergic medication. Using a dataset of 1,875 videos from 178 patients, the Video Swin Tiny model achieved the highest performance, with up to 94% accuracy and 93.7% F1-score in binary classification (presence of absence of depressive symptoms), and 87.1% accuracy with an 85.4% F1-score in multiclass tasks (absence or mild or severe depressive symptoms).

PainFormer: a Vision Foundation Model for Automatic Pain Assessment

May 02, 2025Abstract:Pain is a manifold condition that impacts a significant percentage of the population. Accurate and reliable pain evaluation for the people suffering is crucial to developing effective and advanced pain management protocols. Automatic pain assessment systems provide continuous monitoring and support decision-making processes, ultimately aiming to alleviate distress and prevent functionality decline. This study introduces PainFormer, a vision foundation model based on multi-task learning principles trained simultaneously on 14 tasks/datasets with a total of 10.9 million samples. Functioning as an embedding extractor for various input modalities, the foundation model provides feature representations to the Embedding-Mixer, a transformer-based module that performs the final pain assessment. Extensive experiments employing behavioral modalities-including RGB, synthetic thermal, and estimated depth videos-and physiological modalities such as ECG, EMG, GSR, and fNIRS revealed that PainFormer effectively extracts high-quality embeddings from diverse input modalities. The proposed framework is evaluated on two pain datasets, BioVid and AI4Pain, and directly compared to 73 different methodologies documented in the literature. Experiments conducted in unimodal and multimodal settings demonstrate state-of-the-art performances across modalities and pave the way toward general-purpose models for automatic pain assessment.

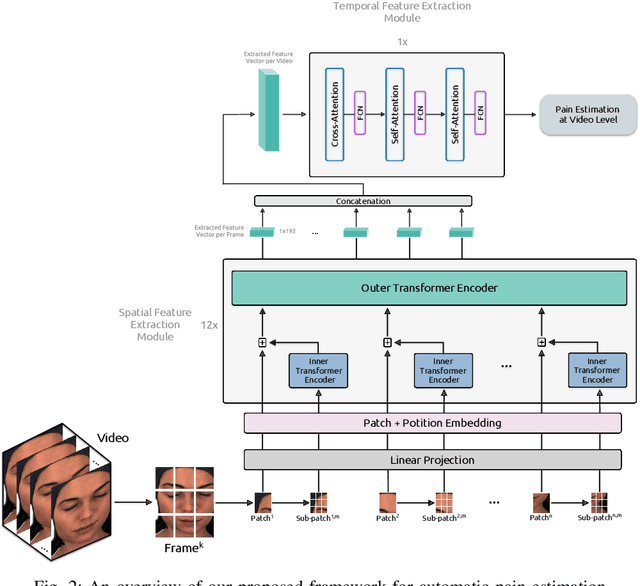

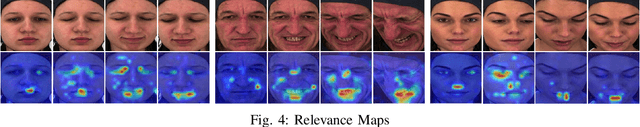

A Full Transformer-based Framework for Automatic Pain Estimation using Videos

Dec 19, 2024

Abstract:The automatic estimation of pain is essential in designing an optimal pain management system offering reliable assessment and reducing the suffering of patients. In this study, we present a novel full transformer-based framework consisting of a Transformer in Transformer (TNT) model and a Transformer leveraging cross-attention and self-attention blocks. Elaborating on videos from the BioVid database, we demonstrate state-of-the-art performances, showing the efficacy, efficiency, and generalization capability across all the primary pain estimation tasks.

Synthetic Thermal and RGB Videos for Automatic Pain Assessment utilizing a Vision-MLP Architecture

Jul 29, 2024Abstract:Pain assessment is essential in developing optimal pain management protocols to alleviate suffering and prevent functional decline in patients. Consequently, reliable and accurate automatic pain assessment systems are essential for continuous and effective patient monitoring. This study presents synthetic thermal videos generated by Generative Adversarial Networks integrated into the pain recognition pipeline and evaluates their efficacy. A framework consisting of a Vision-MLP and a Transformer-based module is utilized, employing RGB and synthetic thermal videos in unimodal and multimodal settings. Experiments conducted on facial videos from the BioVid database demonstrate the effectiveness of synthetic thermal videos and underline the potential advantages of it.

Twins-PainViT: Towards a Modality-Agnostic Vision Transformer Framework for Multimodal Automatic Pain Assessment using Facial Videos and fNIRS

Jul 29, 2024

Abstract:Automatic pain assessment plays a critical role for advancing healthcare and optimizing pain management strategies. This study has been submitted to the First Multimodal Sensing Grand Challenge for Next-Gen Pain Assessment (AI4PAIN). The proposed multimodal framework utilizes facial videos and fNIRS and presents a modality-agnostic approach, alleviating the need for domain-specific models. Employing a dual ViT configuration and adopting waveform representations for the fNIRS, as well as for the extracted embeddings from the two modalities, demonstrate the efficacy of the proposed method, achieving an accuracy of 46.76% in the multilevel pain assessment task.

Multi-task Neural Networks for Pain Intensity Estimation using Electrocardiogram and Demographic Factors

Jul 28, 2024Abstract:Pain is a complex phenomenon which is manifested and expressed by patients in various forms. The immediate and objective recognition of it is a great of importance in order to attain a reliable and unbiased healthcare system. In this work, we elaborate electrocardiography signals revealing the existence of variations in pain perception among different demographic groups. We exploit this insight by introducing a novel multi-task neural network for automatic pain estimation utilizing the age and the gender information of each individual, and show its advantages compared to other approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge